mTCP使ってみた

- 1. mTCP 使ってみた Userspace TCP/IP stack for PacketShader I/O engine Hajime Tazaki High-speed PC Router #3 2014/5/15

- 2. • PacketShader ファミリ (@ KAIST) • ユーザ空間スタック(kernel bypass) • w/ DPDK, PacketShader I/O, netmap • 種々の高速化手法 (Fast Packet I/O, affinity-accept, RSS, etc)を利用 • Short Flow:Linuxより25倍高速 mTCP とは 2 [mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014

- 3. ` 名前。。。 3

- 4. Why Userspace Stack ? • Problems • Lack of connection locality • Shared file descriptor space • Inefficient per-packet processing • System call overhead • kernel stack performance saturation 4 [mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014

- 5. カーネルスタックの性能 5 アプリはほとんどCPU使 えていない 複数コアの利用効率悪い [mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014

- 6. mTCP Design 6 [mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014

- 7. • パケットバッチング (ps I/O engine) • システムコール回数減少 • コンテキストスイッチも減少 • CPUまたぎ極小化 mTCP Design 7

- 8. mTCP Design (cont’d) 8 [mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014

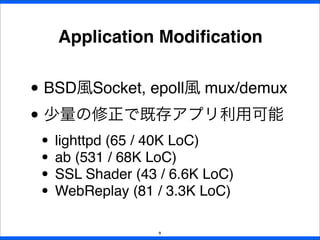

- 9. Application Modification 9 • BSD風Socket, epoll風 mux/demux • 少量の修正で既存アプリ利用可能 • lighttpd (65 / 40K LoC) • ab (531 / 68K LoC) • SSL Shader (43 / 6.6K LoC) • WebReplay (81 / 3.3K LoC)

- 10. 10 mTCP 性能 (64B ping-pong transaction) [mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014 x25 Linux x5 SO_REUSEPORT x3 MegaPipe

- 11. ` mTCPお試し 11

- 12. • https://github.com/eunyoung14/ mtcp • Q1: iperf を mTCP 化(簡単?) • Q2: iperf 速くなるの? さわってみた 12

- 13. Porting iperf for mTCP • socket () => mtcp_socket () • LD_PRELOAD なライブラリでOK かも。 13

- 15. Performance • PC0 • CPU: Xeon E5-2420 1.90GHz (6core) • NIC: Intel X520 • Linux 2.6.39.4 (ps_ixgbe.ko) • PC1 • CPU: Core i7-3770K 3.50GHz (8core) • NIC: Intel X520-2 • Linux 3.8.0-19 (ixgbe.ko) 15 PC0: mTCP-ed iperf PC1: vanilla Linux

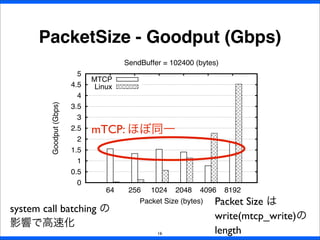

- 16. 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 64 256 1024 2048 4096 8192 Goodput(Gbps) Packet Size (bytes) SendBuffer = 102400 (bytes) MTCP Linux PacketSize - Goodput (Gbps) 16 mTCP: ほぼ同一 Packet Size は write(mtcp_write)の length system call batching の 影響で高速化

- 17. Buffer Size - Goodput (Gbps) 17 0 0.5 1 1.5 2 2.5 2048 10240 51200 102400 204800 512000 1024000 Goodput(Gbps) Buffer Size (bytes) Packet size = 64 byte MTCP Linux mTCP:調査中。。。 mTCP: Send Buffer configuration Linux: wmem_max configuration

- 18. Summary 18 • パケット長によらず (1.6 Gbps) • 単一コネクションなの。。 • 50行くらいの変更で利用可能 ! • 簡単に悪名高き iperf 高速化可能!

- 19. Discussion 19 • 複雑なものはユーザスペースへ • マイクロカーネル ? • システム入れ替え不要 • かつパフォーマンスも向上 Application BSD Socket network stack NIC Application network stack NIC network channel user kernel

- 20. • Kernel Bypass = Shortcut • 省略された機能どうすんの ? • only v4 ? udp ? • ルーティングテーブル ? • middlebox にしたら Quaggaとか? Discussion (cont’d) 20

- 21. Library OS 21 • Library Operating System (LibOS) • アプリケーションが選択可能なOS • End-to-End principle (on OS?) • rump[1], MirageOS[2], Drawbridge[3] • ネットワークスタックだけはもう ユーザスペースでいいんじゃね?[1] Kantee. Rump file systems: Kernel code reborn, USENIX ATC 2009. [2] Madhavapeddy et al., Unikernels: library operating systems for the cloud ASPLOS 2013 [3] Porter et al., Rethinking the library OS from the top down. ASPLOS 2011 ARP Qdisc TCP UDP DCCP SCTP ICMP IPv4IPv6 Netlink BridgingNetfilter IPSec Tunneling Kernel layer Heap Stack memory Virtualization Core layer ns-3 (network simulation core) POSIX layer Application (ip, iptables, quagga) bottom halves/rcu/ timer/interrupt struct net_device DCE ns-3 applicati on ns-3 TCP/IP stack

- 22. • Network Stack in Userspace (NUSE) • Direct Code Execution • liblinux.so (latest) • libfreebsd.so (10.0.0) • カーネル実装されたも のを全てライブラリ化 • 高機能化 • 高速化 ? An implementation of LibOS 22 NUSE: Network Stack in Userspace Hajime Tazaki1 and Mathieu Lacage2 , 1 NICT, Japan 2 France Summary Network Stack in Userspace (NUSE) I A framework for the userspace execution of network stacks I Based on Direct Code Execution (DCE) designed for ns-3 network simulator I Supports multiple kinds and versions of network stacks implemented for kernel-space (currently net-next Linux, freebsd.git are supported) I Introduces the distributed validation framework in a single controller (thanks to ns-3). I Transparent to existing codes (no manual patching to network stacks) Problem Statement on Network Stack Development Kernel-space Network Stack Implementation Userspace Network Stack Implementation hard to deploy Existing Implementation is desirable Userspace implementation is desirable - implementing network stack from scratch is not realistic (620K LOC in ./net-next/net/). - needs to validate the interoperatbility again! Infinite Loop on Network Stack Development The Architecture (kernel-space) Network Stack Code Shared Library Userspace Program compile dynamic- link I Kernel network stacks are compiled to shared library and linked to applications. I Unmodified application codes (userspace) and network stack (kernel-space) are usable. (Host) kernel process apps (socket/syscall) NUSE bottom-half (Guest) kernel network stack NUSE top-half user-space kernel-space library { I Top-half provides a transparent interface to applications with system-call redirection. I Bottom-half provides a bridge between userspace network stack and host operating system. Call trace via NUSE with Linux network stack: (gdb) bt --------------- #0 sendto () at ../sysdeps/unix/syscall-template.S:82 Host OS #1 ns3::EmuNetDevice::SendFrom () at ../src/emu/model/emu-net-device.cc:913 Raw socket #2 ns3::EmuNetDevice::Send () at ../src/emu/model/emu-net-device.cc:835 --------------- #3 ns3::LinuxSocketFdFactory::DevXmit () at ../model/linux-socket-fd-factory.cc:297 NUSE #4 sim_dev_xmit () at sim/sim.c:290 bottom half #5 fake_ether_output () at sim/sim-device.c:165 #6 arprequest () at freebsd.git/sys/netinet/if_ether.c:271 --------------- #7 arpresolve () at freebsd.git/sys/netinet/if_ether.c:419 #8 fake_ether_output () at sim/sim-device.c:89 freebsd.git #9 ip_output () at freebsd.git/sys/netinet/ip_output.c:631 network stack #10 udp_output () at freebsd.git/sys/netinet/udp_usrreq.c:1233 layer #11 udp_send () at freebsd.git/sys/netinet/udp_usrreq.c:1580 #12 sosend_dgram () at freebsd.git/sys/kern/uipc_socket.c:1115 --------------- #13 sim_sock_sendmsg () at sim/sim-socket.c:104 NUSE #14 sim_sock_sendmsg_forwarder () at sim/sim.c:88 top half #15 ns3::LinuxSocketFdFactory::Sendmsg () at ../model/linux-socket-fd-factory.cc:633 --------------- #16 ns3::LinuxSocketFd::Sendmsg () at ../model/linux-socket-fd.cc:76 syscall #17 ns3::LinuxSocketFd::Write () at ../model/linux-socket-fd.cc:44 emulation #18 dce_write () at ../model/dce-fd.cc:290 layer #19 write () at ../model/libc-ns3.h:187 --------------- #20 main () at ../example/udp-client.cc:34 application #21 ns3::DceManager::DoStartProcess () at ../model/dce-manager.cc:283 --------------- #22 ns3::TaskManager::Trampoline () at ../model/task-manager.cc:250 ns-3 DCE #23 ns3::UcontextFiberManager::Trampoline () at ../model/ucontext-fiber-manager.cc:1 scheduler #24 ?? () from /lib64/libc.so.6 --------------- #25 ?? () The kernel network stack code is transparently integrated into NUSE framework without any modifications into original one. Experience Linux (Host) Apps NIC 1) Linux native stack Linux (Host) vNIC ns3-core NUSE bottom- half linux net-next Apps NUSE top-half NIC 2) NUSE with Linux stack Linux (Host) vNIC ns3-core Apps NUSE bottom- half freebsd.git NUSE top-half NIC 3) NUSE with FreeBSD stack I A UDP simple socket-based traffic generator is used with three different scenarios. I The development tree version of Linux and FreeBSD kernel is encapsulated by NUSE without modifying the application and host network stacks. 0 5000 10000 15000 20000 1 2 3 4 5 UDPpacketgeneratingperformance(pps) Time (sec) 1) native 2) Linux NUSE 3) Freebsd NUSE I Linux NUSE shows a different behavior with Linux native stack: current timer implementation in NUSE is simplified and not enough to emulate native one accurately. I Native and FreeBSD NUSE show a similarity in the UDP packet generation. Possible Use-Cases network stack process NUSE (Guest) network stack user-space kernel-space NIC bypassed (raw sock/tap /netmap) I Application embedded network stacks deployment (e.g., Firefox + NUSE Linux + mptcp.). I No required kernel stack replacement with bypassing the host network stack. network stack process NUSE network stack X user-space kernel-space NIC Multiple Network Stack Instances process NUSE network stack Y process NUSE network stack Z NIC network stack ns-3 I Multiple network stacks debugging via ns-3 network simulator. I Validation platform across the distributed network entities (like VM multiplexing) with a simple controllable scenario. Related Work I Userspace network stack porting: Network Simulation Cradle [6], Daytona [9], Alpine [4], Entrapid [5], MiNet [3] Need of manual patching, resulting the difficulties of latest version tracking. I Virtual machines, operating systems: (KVM [8]/Linux Container [1]/User-mode Linux [2]) High-level transparency introduces the broader compatibility of codes (application, network stack), but unnecessary workload for the application execution. I In-kernel userspace execution support: Runnable Userspace Meta Program (RUMP) [7] No manual patching (already integrated NetBSD), but lack of integrated operation. Future Directions I Userspace binary emulation should I More useful example with unavailable features of current network stacks (e.g., mptcp) I Seek the performance improvement with userspace network stack by utilizing multiple processors. I Promote to merge Linux/FreeBSD main-tree as a general framework.

- 23. Next Steps • net-next-sim (liblinux.so) • Linuxと同等機能な userspace stack • make menuconfig で必要なものだけ • (work-in-progress) 23

- 24. まとめ • mTCP 簡単・高速 ! • (Packet I/O高速化だけなら) • 速ければいいのか ? • 速くて、高機能で、自由度も高く! 24

- 25. References • Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems, USENIX NSDI, April, 2014 • iperf mTCP version • https://github.com/thehajime/iperf-2.0.5- mtcp • PacketShader I/O engine mod (X520) • https://github.com/thehajime/mtcp • Direct Code Execution (liblinux.so) • https://github.com/direct-code-execution/ net-next-sim 25

- 26. • to Linux 3.8.0 • 基本問題なし • ARP/Static Route をファイルに設定 • to macosx (10.9.2) TCPv4 (iperf) • static route /32 で設定できない.. • でもちゃんとEstablish になる ! 相互接続関連 26

![• PacketShader ファミリ (@ KAIST)

• ユーザ空間スタック(kernel bypass)

• w/ DPDK, PacketShader I/O, netmap

• 種々の高速化手法 (Fast Packet I/O,

affinity-accept, RSS, etc)を利用

• Short Flow:Linuxより25倍高速

mTCP とは

2

[mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for

Multicore Systems, USENIX NSDI, April, 2014](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-2-320.jpg)

![Why Userspace Stack ?

• Problems

• Lack of connection locality

• Shared file descriptor space

• Inefficient per-packet processing

• System call overhead

• kernel stack performance saturation

4

[mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for

Multicore Systems, USENIX NSDI, April, 2014](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-4-320.jpg)

![カーネルスタックの性能

5

アプリはほとんどCPU使

えていない

複数コアの利用効率悪い

[mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for

Multicore Systems, USENIX NSDI, April, 2014](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-5-320.jpg)

![mTCP Design

6

[mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for

Multicore Systems, USENIX NSDI, April, 2014](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-6-320.jpg)

![mTCP Design (cont’d)

8

[mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for

Multicore Systems, USENIX NSDI, April, 2014](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-8-320.jpg)

![10

mTCP 性能

(64B ping-pong transaction)

[mTCP14] Jeong et al., mTCP: A Highly Scalable User-level TCP Stack for

Multicore Systems, USENIX NSDI, April, 2014

x25 Linux

x5 SO_REUSEPORT

x3 MegaPipe](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-10-320.jpg)

![Library OS

21

• Library Operating System (LibOS)

• アプリケーションが選択可能なOS

• End-to-End principle (on OS?)

• rump[1], MirageOS[2], Drawbridge[3]

• ネットワークスタックだけはもう

ユーザスペースでいいんじゃね?[1] Kantee. Rump file systems: Kernel code reborn, USENIX ATC 2009.

[2] Madhavapeddy et al., Unikernels: library operating systems for the cloud ASPLOS 2013

[3] Porter et al., Rethinking the library OS from the top down. ASPLOS 2011

ARP

Qdisc

TCP UDP DCCP SCTP

ICMP IPv4IPv6

Netlink

BridgingNetfilter

IPSec Tunneling

Kernel layer

Heap Stack

memory

Virtualization Core

layer

ns-3 (network simulation core)

POSIX layer

Application

(ip, iptables, quagga)

bottom halves/rcu/

timer/interrupt

struct net_device

DCE

ns-3

applicati

on

ns-3

TCP/IP

stack](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-21-320.jpg)

![• Network Stack in

Userspace (NUSE)

• Direct Code Execution

• liblinux.so (latest)

• libfreebsd.so (10.0.0)

• カーネル実装されたも

のを全てライブラリ化

• 高機能化

• 高速化 ?

An implementation of LibOS

22

NUSE: Network Stack in Userspace

Hajime Tazaki1

and Mathieu Lacage2

,

1

NICT, Japan 2

France

Summary

Network Stack in Userspace (NUSE)

I A framework for the userspace execution of network stacks

I Based on Direct Code Execution (DCE) designed for ns-3 network simulator

I Supports multiple kinds and versions of network stacks implemented for kernel-space (currently net-next

Linux, freebsd.git are supported)

I Introduces the distributed validation framework in a single controller (thanks to ns-3).

I Transparent to existing codes (no manual patching to network stacks)

Problem Statement on Network Stack Development

Kernel-space

Network Stack

Implementation

Userspace

Network Stack

Implementation

hard to deploy

Existing Implementation

is desirable

Userspace implementation

is desirable

- implementing network stack from scratch

is not realistic (620K LOC in ./net-next/net/).

- needs to validate the interoperatbility again!

Infinite Loop on

Network Stack Development

The Architecture

(kernel-space)

Network Stack

Code

Shared

Library

Userspace

Program

compile dynamic-

link

I Kernel network stacks are compiled to shared library and linked to applications.

I Unmodified application codes (userspace) and network stack (kernel-space) are usable.

(Host) kernel

process

apps (socket/syscall)

NUSE bottom-half

(Guest) kernel

network stack

NUSE top-half

user-space

kernel-space

library

{

I Top-half provides a transparent interface to applications with system-call redirection.

I Bottom-half provides a bridge between userspace network stack and host operating

system.

Call trace via NUSE with Linux network stack:

(gdb) bt ---------------

#0 sendto () at ../sysdeps/unix/syscall-template.S:82 Host OS

#1 ns3::EmuNetDevice::SendFrom () at ../src/emu/model/emu-net-device.cc:913 Raw socket

#2 ns3::EmuNetDevice::Send () at ../src/emu/model/emu-net-device.cc:835 ---------------

#3 ns3::LinuxSocketFdFactory::DevXmit () at ../model/linux-socket-fd-factory.cc:297 NUSE

#4 sim_dev_xmit () at sim/sim.c:290 bottom half

#5 fake_ether_output () at sim/sim-device.c:165

#6 arprequest () at freebsd.git/sys/netinet/if_ether.c:271 ---------------

#7 arpresolve () at freebsd.git/sys/netinet/if_ether.c:419

#8 fake_ether_output () at sim/sim-device.c:89 freebsd.git

#9 ip_output () at freebsd.git/sys/netinet/ip_output.c:631 network stack

#10 udp_output () at freebsd.git/sys/netinet/udp_usrreq.c:1233 layer

#11 udp_send () at freebsd.git/sys/netinet/udp_usrreq.c:1580

#12 sosend_dgram () at freebsd.git/sys/kern/uipc_socket.c:1115 ---------------

#13 sim_sock_sendmsg () at sim/sim-socket.c:104 NUSE

#14 sim_sock_sendmsg_forwarder () at sim/sim.c:88 top half

#15 ns3::LinuxSocketFdFactory::Sendmsg () at ../model/linux-socket-fd-factory.cc:633 ---------------

#16 ns3::LinuxSocketFd::Sendmsg () at ../model/linux-socket-fd.cc:76 syscall

#17 ns3::LinuxSocketFd::Write () at ../model/linux-socket-fd.cc:44 emulation

#18 dce_write () at ../model/dce-fd.cc:290 layer

#19 write () at ../model/libc-ns3.h:187 ---------------

#20 main () at ../example/udp-client.cc:34 application

#21 ns3::DceManager::DoStartProcess () at ../model/dce-manager.cc:283 ---------------

#22 ns3::TaskManager::Trampoline () at ../model/task-manager.cc:250 ns-3 DCE

#23 ns3::UcontextFiberManager::Trampoline () at ../model/ucontext-fiber-manager.cc:1 scheduler

#24 ?? () from /lib64/libc.so.6 ---------------

#25 ?? ()

The kernel network stack code is transparently integrated into NUSE framework without any modifications into original one.

Experience

Linux (Host)

Apps

NIC

1) Linux native stack

Linux (Host)

vNIC

ns3-core

NUSE bottom-

half

linux net-next

Apps

NUSE top-half

NIC

2) NUSE with Linux stack

Linux (Host)

vNIC

ns3-core

Apps

NUSE bottom-

half

freebsd.git

NUSE top-half

NIC

3) NUSE with FreeBSD stack

I A UDP simple socket-based traffic generator is used with three different scenarios.

I The development tree version of Linux and FreeBSD kernel is encapsulated by NUSE without modifying the

application and host network stacks.

0

5000

10000

15000

20000

1 2 3 4 5

UDPpacketgeneratingperformance(pps)

Time (sec)

1) native

2) Linux NUSE

3) Freebsd NUSE

I Linux NUSE shows a different behavior with Linux native stack: current timer implementation in NUSE is

simplified and not enough to emulate native one accurately.

I Native and FreeBSD NUSE show a similarity in the UDP packet generation.

Possible Use-Cases

network stack

process

NUSE

(Guest) network

stack

user-space

kernel-space

NIC

bypassed

(raw sock/tap

/netmap)

I Application embedded network stacks deployment (e.g., Firefox + NUSE Linux + mptcp.).

I No required kernel stack replacement with bypassing the host network stack.

network stack

process

NUSE

network

stack X

user-space

kernel-space

NIC

Multiple

Network Stack

Instances

process

NUSE

network

stack Y

process

NUSE

network

stack Z

NIC

network stack

ns-3

I Multiple network stacks debugging via ns-3 network simulator.

I Validation platform across the distributed network entities (like VM multiplexing) with a simple controllable

scenario.

Related Work

I Userspace network stack porting: Network Simulation Cradle [6], Daytona [9], Alpine [4], Entrapid [5],

MiNet [3]

Need of manual patching, resulting the difficulties of latest version tracking.

I Virtual machines, operating systems: (KVM [8]/Linux Container [1]/User-mode Linux [2])

High-level transparency introduces the broader compatibility of codes (application, network stack), but

unnecessary workload for the application execution.

I In-kernel userspace execution support: Runnable Userspace Meta Program (RUMP) [7]

No manual patching (already integrated NetBSD), but lack of integrated operation.

Future Directions

I Userspace binary emulation should

I More useful example with unavailable features of current network stacks (e.g., mptcp)

I Seek the performance improvement with userspace network stack by utilizing multiple processors.

I Promote to merge Linux/FreeBSD main-tree as a general framework.](https://image.slidesharecdn.com/2014-05-15-zak-mtcp-140515184030-phpapp02/85/mTCP-22-320.jpg)