Data structure & algorithm using java

- 1. 2018 Data Structure & Algorithm using Java First Edition Narayan Sau P U N E , I N D I A

- 2. Data Structure & Algorithm using Java Page 2 Data Structure & Algorithm Using Java First Edition By Narayan Sau, MCA, MBA COPYRIGHT INFORMATION No part of this document may be copied, reproduced, stored in any retrieval system, or transmitted in any form or by any means, either electronically, mechanically, or otherwise without prior written permission. Neo Four Technologies LLP, April 2018 All rights reserved. Neo Four Technologies LLP, www.neofour.com

- 3. Data Structure & Algorithm using Java Page 3 Data Structure & Algorithm Using Java By Narayan Sau ॐ पूणमदः पूणिमदं पूणा ुणमुद ते पूण पूणमादाय पूणमेवाविश ते ॥ ॐ शा ः शा ः शा ः ॥ Om Puurnnam-Adah Puurnnam-Idam Puurnnaat-Purnnam-Udacyate Puurnnasya Puurnnam-Aadaaya Puurnnam-Eva-Avashissyate || Om Shaantih Shaantih Shaantih || Om, That is complete, This is complete, From the completeness comes the completeness If completeness is taken away from completeness, Only completeness remains Om, Peace peace peace To my wife Sonali, our children Adithya and Aditi

- 4. Data Structure & Algorithm using Java Page 4 Preface The great learning of my life is this: If you want to make a new contribution, you’ve got to make a new preparation. This book is written keeping this in mind. It will help students learn and understand data structure and algorithm and help them with their ides of developing product/app. This should also hold them in good stead in their attempt to get placed in a Software Product Company. We all know perfection does not leave any room for improvement. So, let’s keep the perfection aside, rather aim to concentrate ourselves and put our best attention in everything we do. Let us remember there is something you can do better than anyone else. Let’s not compare with or compete with others but with inner self. Let ask and get approval from inside rather looking for somebody’s nod. Let us find the one best thing in us and try to improve it in day by day. My intention behind writing this book is try to gather as much information as possible, which is freely available on internet, and summarize it all in knowledge form to help students scratch their head and concentrate in one place for understanding data structures. This book is made freely available for educational and other non-commercial use only. I am truly indebted to my reviewer Manish Chowdhury whose tireless efforts helped this book to see the dawn of the day by eradicating many technical errors, duplication, various spelling mistakes, and improved its readability. For any suggestion and comment please write to the author. (Narayan Sau)

- 5. Data Structure & Algorithm using Java Page 5 About the Author Narayan Sau. Over 23 years of experience in Telecom BSS domain. Expertise in BSS/OSS system integration, Test automation, Migration and DevOps. Founder and Director of Neo Four Technologies. MBA from University of Dallas, Texas, USA. LinkedIn: https://www.linkedin.com/in/narayansau/ Email: [email protected] About the Reviewer Manish Chowdhury. Over 20 years of extensive international IT Industry experience in Telecom domain, end-to-end IT services including complex IT Program and Project Management, Solution Design, Development, Testing, Delivery, and Operations Management, Leadership, and Team Building. MBA from University of Kansas, Kansas, USA LinkedIn: https://www.linkedin.com/in/manishc/ Email: [email protected]

- 6. Data Structure & Algorithm using Java Page 6 Table of Contents 1 Introduction to Algorithms and Data Structures.................................................. 17 1.1 Prerequisites....................................................................................................... 17 1.2 Role of Algorithm ................................................................................................ 17 1.2.1 Laymen terms........................................................................................................ 17 1.3 Role of a Data Structure...................................................................................... 18 1.4 Identifying Techniques for Designing Algorithms................................................. 19 1.4.1 Brute Force............................................................................................................ 19 1.4.2 Greedy Algorithms “take what you can get now” strategy .................................... 19 1.4.3 Divide-and-Conquer, Decrease-and-Conquer ...................................................... 21 1.4.4 Dynamic Programming.......................................................................................... 22 1.4.5 Transform-and-Conquer........................................................................................ 22 1.4.6 Backtracking and branch-and-bound: Generate and test methods ...................... 22 1.5 What is Data Structure........................................................................................ 23 1.5.1 Types of Data Structures....................................................................................... 23 1.5.2 Dynamic Memory Allocation.................................................................................. 24 1.6 Algorithm Analysis............................................................................................... 25 1.6.1 Big Oh Notation, Ο ................................................................................................ 26 1.6.2 Omega Notation, Ω................................................................................................ 26 1.6.3 Theta Notation, θ................................................................................................... 27 1.6.4 How to analyze a program .................................................................................... 27 1.6.4.1 Some Mathematical Facts ..................................................................... 31 1.7 Random Access Machine model......................................................................... 32 1.8 Abstract Data Type ............................................................................................. 33 1.8.1 Advantages of Abstract Data Typing..................................................................... 33 1.8.1.1 Encapsulation ........................................................................................ 33 1.8.1.2 Localization of change ........................................................................... 33 1.8.1.3 Flexibility ................................................................................................ 34 1.9 Arrays & Structure............................................................................................... 34 1.9.1 Types of an Array.................................................................................................. 34 1.9.1.1 One-dimensional array........................................................................... 34 1.9.1.2 Multi-dimensional array.......................................................................... 34 1.9.2 Array Declaration................................................................................................... 35 1.9.3 Array Initialization.................................................................................................. 35 1.9.4 Memory allocation ................................................................................................. 35 1.9.5 Advantages & Disadvantages ............................................................................... 35 1.9.5.1 Advantages ............................................................................................ 35 1.9.5.2 Disadvantages ....................................................................................... 36

- 7. Data Structure & Algorithm using Java Page 7 1.9.6 Bound Checking .................................................................................................... 36 2 Introduction to Stack, Operations on Stack ........................................................ 37 2.1 Stack Representation.......................................................................................... 37 2.2 Basic Operations................................................................................................. 38 2.2.1 peek() .................................................................................................................... 38 2.2.2 isfull()..................................................................................................................... 38 2.2.3 isempty()................................................................................................................ 38 2.2.4 Push Operation ..................................................................................................... 38 2.2.4.1 Algorithm for Push Operation ................................................................ 39 2.2.5 Pop Operation ....................................................................................................... 39 2.2.5.1 Algorithm for Pop Operation .................................................................. 40 2.2.6 Program on stack link implementation .................................................................. 40 2.2.7 My Stack Array Implementation ............................................................................ 42 2.2.8 Evaluation of Expression....................................................................................... 44 2.2.9 Infix, Prefix, Postfix................................................................................................ 45 2.2.10 Converting Between These Notations................................................................... 46 2.2.11 Infix to Postfix using Stack .................................................................................... 47 2.2.12 Evaluation of Postfix.............................................................................................. 48 2.2.13 Infix to Prefix using STACK................................................................................... 50 2.2.14 Evaluation of Prefix ............................................................................................... 50 2.2.15 Prefix to Infix Conversion ...................................................................................... 51 2.2.16 Tower of Hanoi...................................................................................................... 53 3 Introduction to Queue, Operations on Queue..................................................... 55 3.1 Applications of Queue......................................................................................... 55 3.2 Array implementation Of Queue.......................................................................... 55 3.3 Queue Representation........................................................................................ 56 3.4 Basic Operations................................................................................................. 56 3.4.1 peek() .................................................................................................................... 56 3.4.2 isfull()..................................................................................................................... 57 3.4.3 isempty()................................................................................................................ 57 3.5 Enqueue Operation............................................................................................. 57 3.6 Dequeue Operation............................................................................................. 58 3.7 Queue using Linked List...................................................................................... 58 3.8 Operations .......................................................................................................... 59 3.8.1 enQueue(value)..................................................................................................... 59 3.8.2 deQueue() ............................................................................................................. 59 3.8.3 display()– Displaying the elements of Queue........................................................ 60 3.8.3.1 Abstract Data Type definition of Queue/Dequeue ................................. 60 3.8.3.2 Linked List implementation of Queue .................................................... 62

- 8. Data Structure & Algorithm using Java Page 8 3.9 Deque ................................................................................................................. 64 3.10 Input Restricted Double Ended Queue................................................................ 65 3.11 Output Restricted Double Ended Queue ............................................................. 65 3.12 Circular Queue.................................................................................................... 70 3.12.1 Implementation of Circular Queue ........................................................................ 71 3.12.2 enQueue(value) - Inserting value into the Circular Queue.................................... 71 3.12.3 deQueue()– Deleting a value from the Circular Queue......................................... 72 3.12.4 display()– Displays the elements of a Circular Queue .......................................... 72 3.13 Priority Queue..................................................................................................... 72 3.14 Applications of the Priority Queue ....................................................................... 74 3.14.1 PriorityQueueSort.................................................................................................. 74 3.14.1.1 Algorithm................................................................................................ 74 3.15 Adaptable Priority Queues .................................................................................. 77 3.16 Multiple Queues .................................................................................................. 77 3.16.1 Method 1: Divide the array in slots of size n/k....................................................... 77 3.16.2 Method 2: A space efficient implementation ......................................................... 78 3.17 Applications of Queue Data Structure ................................................................. 78 3.18 Applications of Stack........................................................................................... 78 3.19 Applications of Queue......................................................................................... 79 3.20 Compare the data structures: stack and queue solution...................................... 79 3.21 Comparison Chart............................................................................................... 79 4 Linked List .......................................................................................................... 81 4.1 Why Linked List?................................................................................................. 81 4.2 Singly Linked List ................................................................................................ 81 4.2.1 Insertion................................................................................................................. 82 4.2.1.1 Inserting at Beginning of the list............................................................. 82 4.2.1.2 Inserting at End of the list ...................................................................... 83 4.2.1.3 Inserting at Specific location in the list (After a Node) ........................... 83 4.2.2 Deletion ................................................................................................................. 83 4.2.2.1 Deleting from Beginning of the list......................................................... 83 4.2.2.2 Deleting from End of the list................................................................... 84 4.2.2.3 Deleting a Specific Node from the list.................................................... 84 4.3 Displaying a Single Linked List............................................................................ 85 4.3.1 Algorithm ............................................................................................................... 85 4.4 Circular Linked List.............................................................................................. 87 4.4.1 Insertion................................................................................................................. 88 4.4.1.1 Inserting at Beginning of the list............................................................. 88 4.4.1.2 Inserting at End of the list ...................................................................... 88

- 9. Data Structure & Algorithm using Java Page 9 4.4.1.3 Inserting At Specific location in the list (After a Node)........................... 89 4.4.2 Deletion ................................................................................................................. 89 4.4.2.1 Deleting from Beginning of the list......................................................... 89 4.4.2.2 Deleting from End of the list................................................................... 90 4.4.2.3 Deleting a Specific Node from the list.................................................... 90 4.4.3 Displaying a circular Linked List............................................................................ 91 4.5 Doubly Linked List............................................................................................... 91 4.5.1 Insertion................................................................................................................. 92 4.5.1.1 Inserting at Beginning of the list............................................................. 92 4.5.1.2 Inserting at End of the list ...................................................................... 92 4.5.1.3 Inserting at Specific location in the list (After a Node) ........................... 93 4.5.2 Deletion ................................................................................................................. 93 4.5.2.1 Deleting from Beginning of the list......................................................... 93 4.5.2.2 Deleting from End of the list................................................................... 94 4.5.2.3 Deleting a Specific Node from the list.................................................... 94 4.5.3 Displaying a Double Linked List ............................................................................ 95 4.6 ADT Linked List................................................................................................... 95 4.7 ADT Doubly Linked List....................................................................................... 97 4.8 Doubly Circular Linked List................................................................................ 102 4.9 Insertion in Circular Doubly Linked List ............................................................. 103 4.9.1 Insertion at the end of list or in an empty list....................................................... 103 4.9.2 Insertion at the beginning of list .......................................................................... 103 4.9.3 Insertion in between the nodes of the list............................................................ 103 4.10 Advantages & Disadvantages of Linked List...................................................... 104 4.10.1 Advantages of Linked List ................................................................................... 104 4.10.1.1 Dynamic Data Structure....................................................................... 104 4.10.1.2 Insertion and Deletion.......................................................................... 104 4.10.1.3 No Memory Wastage ........................................................................... 104 4.10.1.4 Implementation .................................................................................... 104 4.10.2 Disadvantages of Linked List .............................................................................. 104 4.10.2.1 Memory Usage..................................................................................... 104 4.10.2.2 Traversal .............................................................................................. 104 4.10.2.3 Reverse Traversing.............................................................................. 104 4.11 Operations on Linked list................................................................................... 104 4.11.1 Algorithm for Concatenation................................................................................ 105 4.11.2 Searching ............................................................................................................ 105 4.11.2.1 Iterative Solution .................................................................................. 105 4.11.2.2 Recursive Solution............................................................................... 105 4.11.3 Polynomials Using Linked Lists........................................................................... 105

- 10. Data Structure & Algorithm using Java Page 10 4.11.4 Representation of Polynomial ............................................................................. 106 4.11.4.1 Representation of Polynomials using Arrays....................................... 106 4.12 Exercise on Linked List ..................................................................................... 106 5 Sorting & Searching Techniques...................................................................... 111 5.1 What is Sorting.................................................................................................. 111 5.2 Methods of Sorting (Internal Sort, External Sort)............................................... 111 5.3 Sorting Algorithms............................................................................................. 111 5.3.1 Bubble Sort.......................................................................................................... 111 5.3.2 Selection sort....................................................................................................... 113 5.3.2.1 How Selection Sort Works? ................................................................. 114 5.3.3 Insertion sort........................................................................................................ 115 5.3.4 Merge sort ........................................................................................................... 117 5.3.4.1 How Merge Sort Works?...................................................................... 118 5.3.4.2 Algorithm.............................................................................................. 118 5.3.5 Quick Sort............................................................................................................ 120 5.3.5.1 Analysis of Quick Sort.......................................................................... 120 5.3.6 Heap Sort ............................................................................................................ 121 5.3.6.1 What is Binary Heap? .......................................................................... 121 5.3.6.2 Why array based representation for Binary Heap? ............................. 121 5.3.6.3 Heap Sort algorithm for sorting in increasing order ............................. 121 5.3.6.4 How to build the heap? ........................................................................ 122 5.3.7 Radix Sort............................................................................................................ 123 5.4 Searching Techniques ...................................................................................... 124 5.4.1 Linear Search...................................................................................................... 124 5.4.2 Binary Search...................................................................................................... 125 5.4.3 Jump Search ....................................................................................................... 125 5.4.3.1 What is the optimal block size to be skipped?..................................... 125 5.4.4 Interpolation Search ............................................................................................ 125 5.4.4.1 Algorithm.............................................................................................. 126 5.4.5 Exponential Search ............................................................................................. 126 5.4.5.1 Applications of Exponential Search: .................................................... 126 5.4.6 Sublist Search (search a linked list in another list) ............................................. 126 5.4.7 Fibonacci Search................................................................................................. 127 5.4.7.1 Background.......................................................................................... 127 5.4.7.2 Observations........................................................................................ 128 5.4.7.3 Algorithm.............................................................................................. 128 5.4.7.4 Time Complexity Analysis.................................................................... 128 6 Trees................................................................................................................ 129 6.1 Definition of Tree............................................................................................... 129

- 11. Data Structure & Algorithm using Java Page 11 6.2 Tree Terminology.............................................................................................. 129 6.3 Terminology ...................................................................................................... 131 6.3.1 Root..................................................................................................................... 131 6.3.2 Edge .................................................................................................................... 132 6.3.3 Parent.................................................................................................................. 132 6.3.4 Child .................................................................................................................... 132 6.3.5 Siblings................................................................................................................ 133 6.3.6 Leaf...................................................................................................................... 133 6.3.7 Internal Nodes ..................................................................................................... 133 6.3.8 Degree................................................................................................................. 134 6.3.9 Level.................................................................................................................... 134 6.3.10 Height .................................................................................................................. 134 6.3.11 Depth................................................................................................................... 135 6.3.12 Path ..................................................................................................................... 135 6.3.13 Sub Tree.............................................................................................................. 135 6.4 Types of Tree.................................................................................................... 136 6.4.1 Binary Trees ........................................................................................................ 136 6.4.2 Binary Tree implementation in Java.................................................................... 137 6.5 Binary Search Trees ......................................................................................... 141 6.5.1 Adding a value..................................................................................................... 141 6.5.1.1 Search for a place................................................................................ 141 6.5.1.2 Insert a new element to this place ....................................................... 142 6.5.2 Deletion ............................................................................................................... 143 6.6 Heaps and Priority Queues ............................................................................... 144 6.6.1 Heaps .................................................................................................................. 144 6.6.1.1 Heap Implementation........................................................................... 145 6.6.2 Priority Queue ..................................................................................................... 147 6.6.2.1 Performance of Adaptable Priority Queue Implementations ............... 148 6.7 AVL Trees......................................................................................................... 148 6.7.1 Definition of an AVL tree ..................................................................................... 148 6.7.2 Insertion............................................................................................................... 149 6.7.3 AVL Rotations ..................................................................................................... 149 6.7.3.1 Left Rotation......................................................................................... 150 6.7.3.2 Right Rotation ...................................................................................... 150 6.7.3.3 Left-Right Rotation............................................................................... 150 6.7.3.4 Right-Left Rotation............................................................................... 151 6.7.4 AVL Tree | Set 1 (Insertion)................................................................................. 153 6.7.5 Why AVL Trees? ................................................................................................. 153 6.7.6 Insertion............................................................................................................... 154

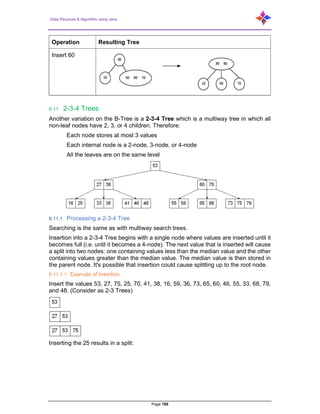

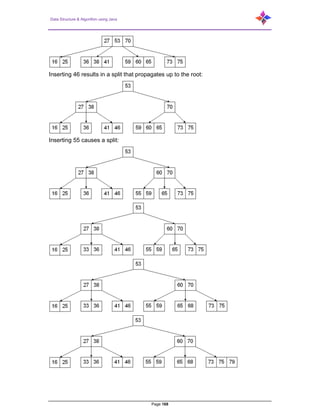

- 12. Data Structure & Algorithm using Java Page 12 6.7.7 Steps to follow for insertion ................................................................................. 154 6.7.7.1 Left Left Case....................................................................................... 154 6.7.7.2 Left Right Case .................................................................................... 155 6.7.7.3 Right Right Case.................................................................................. 155 6.7.7.4 Right Left Case .................................................................................... 155 6.7.8 Insertion Examples.............................................................................................. 155 6.8 B-Trees (or general m-way search trees).......................................................... 157 6.8.1 Operations on a B-Tree....................................................................................... 158 6.8.1.1 Search Operation in B-Tree................................................................. 158 6.8.2 Insertion Operation in B-Tree.............................................................................. 158 6.8.2.1 Example of Insertion ............................................................................ 159 6.8.3 Deletion Operations in B Tree............................................................................. 160 6.8.3.1 Deleting a Key – Case 1 ...................................................................... 161 6.8.3.2 Deleting a Key – Cases 2a, 2b ............................................................ 161 6.8.3.3 Deleting a Key – Case 2c .................................................................... 162 6.8.3.4 Deleting a Key – Case 3b-1................................................................. 162 6.8.3.5 Deleting a Key – Case 3b-2................................................................. 162 6.8.3.6 Deleting a Key – Case 3a .................................................................... 163 6.9 B+ Trees ........................................................................................................... 163 6.9.1 Order of a B+ tree................................................................................................ 164 6.9.2 Properties of a B+ Tree of Order m..................................................................... 164 6.10 2-3 Trees .......................................................................................................... 164 6.10.1 Insertion............................................................................................................... 165 6.11 2-3-4 Trees ....................................................................................................... 166 6.11.1 Processing a 2-3-4 Tree...................................................................................... 166 6.11.1.1 Example of Insertion ............................................................................ 166 6.11.1.2 Deletion from a 2-3-4 Tree................................................................... 169 6.11.2 Storage................................................................................................................ 169 6.12 Red-Black Trees ............................................................................................... 170 6.12.1 Processing Red-Black Trees............................................................................... 171 6.12.1.1 Insertion ............................................................................................... 171 6.13 Applications of Binary Search Trees ................................................................. 172 6.13.1 Binary Search Tree ............................................................................................. 172 6.13.2 Binary Space Partition......................................................................................... 172 6.13.3 Binary Tries ......................................................................................................... 172 6.13.4 Hash Trees.......................................................................................................... 173 6.13.5 Heaps .................................................................................................................. 173 6.13.6 Huffman Coding Tree (Chip Uni)......................................................................... 173 6.13.7 GGM Trees.......................................................................................................... 173

- 13. Data Structure & Algorithm using Java Page 13 6.13.8 Syntax Tree ......................................................................................................... 173 6.13.9 Treap ................................................................................................................... 173 6.13.10 T-tree................................................................................................................... 173 6.14 Types of Binary Trees ....................................................................................... 173 6.14.1 Full Binary Tree ................................................................................................... 173 6.14.2 Complete Binary Tree ......................................................................................... 174 6.14.3 Perfect Binary Tree ............................................................................................. 174 6.14.4 Balanced Binary Tree.......................................................................................... 174 6.14.5 A degenerate (or pathological) tree..................................................................... 175 6.15 Representation of Binary Tree .......................................................................... 175 6.16 Array Implementation of Binary Tree................................................................. 176 6.17 Encoding Messages Using a Huffman Tree ...................................................... 176 6.17.1 Generating Huffman Trees.................................................................................. 178 6.17.2 Representing Huffman Trees .............................................................................. 179 6.17.2.1 The decoding procedure...................................................................... 180 6.17.2.2 Exercises ............................................................................................. 181 7 Map .................................................................................................................. 183 7.1 Java Map Interface............................................................................................ 184 7.1.1 Useful methods of Map interface ........................................................................ 184 7.2 Java LinkedHashMap class............................................................................... 184 7.3 Hash Table ....................................................................................................... 185 7.3.1 Hash function ...................................................................................................... 186 7.3.2 Need for a good hash function ............................................................................ 186 7.3.3 Collision resolution techniques............................................................................ 188 7.3.3.1 Separate chaining (open hashing)....................................................... 188 7.3.3.2 Separate Chaining ............................................................................... 190 7.4 The Set ADT ..................................................................................................... 190 7.5 Linear Probing and Its Variants ......................................................................... 191 8 Graphs ............................................................................................................. 192 8.1 What is a Graph? .............................................................................................. 192 8.2 Graph Terminology ........................................................................................... 192 8.3 Representation.................................................................................................. 192 8.3.1 Adjacency Matrix................................................................................................. 192 8.4 Adjacency List................................................................................................... 193 8.5 Graph and its representations ........................................................................... 193 8.6 Graph Representation....................................................................................... 194 8.6.1 Adjacency Matrix................................................................................................. 194 8.6.2 Adjacency List: .................................................................................................... 194

- 14. Data Structure & Algorithm using Java Page 14 8.7 Data Structures for Graphs ............................................................................... 196 8.8 Traversing a Graph ........................................................................................... 197 8.8.1 Connectivity......................................................................................................... 197 8.8.1.1 Example 1 ............................................................................................ 197 8.8.1.2 Example 2 ............................................................................................ 197 8.8.2 Cut Vertex ........................................................................................................... 197 8.8.2.1 Example ............................................................................................... 197 8.8.3 Cut Edge (Bridge)................................................................................................ 198 8.8.3.1 Example ............................................................................................... 198 8.8.4 Cut Set of a Graph .............................................................................................. 199 8.8.4.1 Example ............................................................................................... 199 8.8.5 Edge Connectivity ............................................................................................... 200 8.8.5.1 Example ............................................................................................... 200 8.8.6 Vertex Connectivity ............................................................................................. 200 8.8.6.1 Example 1 ............................................................................................ 200 8.8.6.2 Example 2 ............................................................................................ 201 8.8.7 Line Covering ...................................................................................................... 201 8.8.7.1 Example ............................................................................................... 202 8.8.8 Minimal Line Covering......................................................................................... 202 8.8.8.1 Example ............................................................................................... 202 8.8.9 Minimum Line Covering....................................................................................... 202 8.8.9.1 Example ............................................................................................... 202 8.8.10 Vertex Covering................................................................................................... 203 8.8.10.1 Example ............................................................................................... 203 8.8.11 Minimal Vertex Covering ..................................................................................... 203 8.8.11.1 Example ............................................................................................... 203 8.8.12 Minimum Vertex Covering................................................................................... 203 8.8.12.1 Example ............................................................................................... 203 8.8.13 Matching.............................................................................................................. 204 8.8.13.1 Example ............................................................................................... 204 8.8.14 Maximal Matching ............................................................................................... 205 8.8.14.1 Example ............................................................................................... 205 8.8.15 Maximum Matching ............................................................................................. 205 8.8.15.1 Example ............................................................................................... 205 8.8.16 Perfect Matching ................................................................................................. 206 8.8.16.1 Example ............................................................................................... 206 8.8.16.2 Example ............................................................................................... 206 8.8.16.3 Example ............................................................................................... 207 8.9 Independent Line Set........................................................................................ 207 8.9.1 Example............................................................................................................... 207

- 15. Data Structure & Algorithm using Java Page 15 8.10 Maximal Independent Line Set.......................................................................... 207 8.10.1 Example............................................................................................................... 208 8.11 Maximum Independent Line Set........................................................................ 208 8.11.1 Example............................................................................................................... 208 8.12 Independent Vertex Set .................................................................................... 209 8.12.1 Example............................................................................................................... 209 8.13 Maximal Independent Vertex Set ...................................................................... 209 8.13.1 Example............................................................................................................... 209 8.14 Maximum Independent Vertex Set .................................................................... 210 8.14.1 Example............................................................................................................... 210 8.14.2 Example............................................................................................................... 210 8.15 Vertex Colouring ............................................................................................... 211 8.16 Chromatic Number............................................................................................ 211 8.16.1 Example............................................................................................................... 211 8.17 Region Colouring .............................................................................................. 211 8.17.1 Example............................................................................................................... 211 8.18 Applications of Graph Colouring........................................................................ 212 8.19 Isomorphic Graphs............................................................................................ 213 8.19.1 Example............................................................................................................... 214 8.20 Planar Graphs................................................................................................... 214 8.20.1 Example............................................................................................................... 214 8.21 Regions............................................................................................................. 214 8.21.1 Example............................................................................................................... 215 8.22 Homomorphism................................................................................................. 216 8.23 Polyhedral graph............................................................................................... 217 8.24 Euler’s Path....................................................................................................... 218 8.25 Euler’s Circuit.................................................................................................... 218 8.26 Euler’s Circuit Theorem..................................................................................... 218 8.27 Hamiltonian Graph ............................................................................................ 219 8.28 Hamiltonian Path............................................................................................... 219 8.29 Graph traversal ................................................................................................. 220 8.29.1 Depth-first search ................................................................................................ 221 8.29.1.1 Pseudocode ......................................................................................... 221 8.29.2 Breadth-first search ............................................................................................. 221 8.29.2.1 Pseudocode ......................................................................................... 222 8.29.3 DFS other way (Depth First Search) ................................................................... 222 8.29.4 BFS other way (Breadth First Search) ................................................................ 224 8.30 Application of Graph.......................................................................................... 228

- 16. Data Structure & Algorithm using Java Page 16 8.31 Spanning Trees................................................................................................. 230 8.32 Circuit Rank ...................................................................................................... 230 8.33 Kirchoff’s Theorem............................................................................................ 231 9 Examples of Graph........................................................................................... 232 9.1 Example 1......................................................................................................... 232 9.1.1 Solution................................................................................................................ 232 9.2 Example 2......................................................................................................... 232 9.2.1 Solution................................................................................................................ 232 9.3 Example 3......................................................................................................... 233 9.3.1 Solution................................................................................................................ 233 9.4 Example 4......................................................................................................... 233 9.4.1 Solution................................................................................................................ 233 9.5 Example 5......................................................................................................... 233 9.5.1 Solution................................................................................................................ 233 9.6 Example 6......................................................................................................... 234 9.6.1 Solution................................................................................................................ 234 9.7 Prim’s Algorithm................................................................................................ 234 9.7.1 Proof of Correctness of Prim's Algorithm ............................................................ 237 9.7.2 Implementation of Prim's Algorithm..................................................................... 237 9.8 Kruskal’s Algorithm ........................................................................................... 239 9.8.1 Greedy algorithm................................................................................................. 239 9.8.2 Proof of Correctness of Kruskal's Algorithm ....................................................... 239 9.8.3 An illustration of Kruskal's algorithm ................................................................... 240 9.8.4 Program............................................................................................................... 241 9.8.5 Implementation.................................................................................................... 241 9.8.6 Running Time of Kruskal's Algorithm .................................................................. 241 9.8.7 Program............................................................................................................... 242 9.8.8 Implementation.................................................................................................... 243 9.8.9 Running Time of Kruskal's Algorithm .................................................................. 243 10 Complexity Theory ........................................................................................... 244 10.1 Undecidable Problems...................................................................................... 244 10.2 P Class Problems ............................................................................................. 244 10.3 NP Class Problems........................................................................................... 244 10.4 NP-Complete Problems .................................................................................... 245 10.5 NP-Hard............................................................................................................ 245

- 17. Data Structure & Algorithm using Java Page 17 1 Introduction to Algorithms and Data Structures 1.1 Prerequisites In presenting this book on data structure & algorithm, it is assumed that the reader has basic familiarity with any one of the high-level languages like Java, Python, C/C++. At the very least they are expected to know: Variables and Expressions Methods Conditional and loops Array, ADT 1.2 Role of Algorithm A program is a collection of instructions that performs a specific task when executed. A part of a computer program that performs a well-defined task is known as an algorithm. Let us concentrate on algorithms that are much underpins of today's computer programming. There are many steps involved in writing computer program to solve a given problem. The steps go from problem formulation and specification, to design of the solution, to implementation, testing and documentation, and finally to evaluation of the solution. The word algorithm comes from the name of the 9th century Persian and Muslim mathematician Abu Abdullah Muhammad ibn Musa Al-Khwarizmi. He was a mathematician, astronomer, and geographer during the Abbasid Caliphate, a scholar in the House of Wisdom in Baghdad. 1.2.1 Laymen terms An algorithm is a procedure to get things done. Precisely, An algorithm is a procedure to solve a problem in mathematical terms. It is the mathematical counterpart to programs. The essential concept to build efficient systems in space and time complexity and develop one’s problem solving skills. Provides the right set of techniques for data handling Helps you compare the efficiency of different approaches to a problem An important part of every technical interview round Given an algorithm, it can be implemented in any programming language. Similarly, all programs have an underlying algorithm which dictates their working. An algorithm as defined by Knuth must have the following properties: Finiteness. An algorithm must always terminate after a finite number of steps.

- 18. Data Structure & Algorithm using Java Page 18 Definiteness. Each step of an algorithm must be precisely defined down to the last detail. The action to be carried out must be rigorously and unambiguously specified for each case. Input. An algorithm has zero or more inputs. Output. An algorithm has one or more outputs. Effectiveness. An algorithm is also generally expected to be effective in the sense that its operations must all be sufficiently basic that they can in principle be done exactly and in a finite length of time by someone using pencil and paper. Let us explain with an example. Consider the simplest problem of finding the G.C.D. (H.C.F.) of two positive integer number m and n where n < m. E1. [Find remainder.] Divide m by n and let r be the remainder. ( 0<=r<n) E2. [Is it zero?] If r = 0, the algorithm terminates; n is the answer. E3. [Reduce] Set m =n, n = r. go back to step E1. The algorithm is terminating after finite number of steps [Finiteness]. All three steps are precisely defined [Definiteness]. The actions carrying out are rigorous and unambiguous. It has two [Input] and one [Output]. Also, the algorithm is effective in the sense that its operations can be done in finite length of time [Effectiveness]. 1.3 Role of a Data Structure A problem can be solved with multiple algorithms. Therefore, we need to choose an algorithm which provides maximum efficiency i.e. use minimum time and minimum memory. Thus, data structures come into picture. Data structure is the art of structuring the data in computer memory in such a way that the resulting operations can be performed efficiently. Data can be organized in many ways; therefore, you can create as many data structures as you want. However, there are some standard data structures that have proved to be useful over the years. These include arrays, linked lists, stacks, queues, trees, and graphs. We will learn more about these data structures in the subsequent sections. All these data structures are designed to hold a collection of data items. However, the difference lies in the way the data items are arranged with respect to each other and the operations that they allow. Because of the different ways in which the data items are arranged with respect to each other, some data structures prove to be more efficient than others in solving a given problem.

- 19. Data Structure & Algorithm using Java Page 19 1.4 Identifying Techniques for Designing Algorithms To solve our real-world problems, we need real world solutions. That’s why we may not follow any systematic method for designing an algorithm. But still there are some well-known techniques that have proved to be quite useful in designing algorithms. We follow these methods because: They provide templates suited to solving a broad range of diverse problems. They can be translated into common control and data structures provided by most high-level languages. The temporal and spatial requirements of the algorithms which result can be precisely analyzed. Although more than one technique may be applicable to a specific problem, it is often the case that an algorithm constructed by one approach is clearly superior to equivalent solutions built using alternative techniques. 1.4.1 Brute Force Brute force is a straightforward approach to solve a problem based on the problem statement and definitions of the concepts involved. It is considered as one of the easiest approach to apply and is useful for solving small-size instances of a problem. Some examples of brute force algorithms are: Computing an (a > 0, n a nonnegative integer) by multiplying a*a*…*a Computing n! Selection sort Bubble sort Sequential search Exhaustive search: Travelling Salesman Problem, Knapsack problem. 1.4.2 Greedy Algorithms “take what you can get now” strategy The greedy approach is an algorithm design technique that selects the best possible option at any given time. Algorithms based on the greedy approach are used for solving optimization problems, where you need to maximize profits or minimize costs under a given set of conditions. Some examples of optimization problems are: Finding the shortest distance from an originating city to a set of destination cities, given the distances between the pairs of cities. Finding the minimum number of currency notes required for an amount, where an arbitrary number of notes for each denomination are available. Selecting items with maximum value from a given set of items, where the total weight of the selected items cannot exceed a given value. Consider an example where you must fill a bag of 10 kg capacity by selecting items (from a set of items), whose weights and values are given in the following table:

- 20. Data Structure & Algorithm using Java Page 20 Item Weight (in kg) Value (in $/kg) Total Value (in $) A 2 200 400 B 3 150 450 C 4 200 800 D 1 50 50 E 5 100 500 A greedy algorithm acts greedy, and therefore selects the item with the maximum total value at each stage. Therefore, first item C with total value of $800 and weight 4 kgs will be selected. Next, item E with total value $500 and weight 5 kg will be selected. The next item with the highest value is item B with a total value of $450 and weight 3 kgs. However, if this item is selected, the total weight of the selected items will be 12 kgs (4 + 5 + 3), which is more than the capacity of the bag. Therefore, we discard item B and search for the item with the next highest value. The item with the next higher value is item A having a total value of $400 and a total weight of 2 kgs. However, the item also cannot be selected because if it is selected, the total weight of the selected items will be 11 kgs (4 + 5 + 2). Now, there is only one item left, that is, item D with a total value of $50 and a weight of 1 kg. This item can be selected as it makes the total weight equal to 10 kgs. The selected items and their total weights are listed in the following table: Item Weight (in kg) Total value (in $) C 4 800 E 5 500 D 1 50 Total 10 1350 Items selected using Greedy Approach: For most problems, greedy algorithms usually fail to find the globally optimal solution. This is because they usually don’t operate exhaustively on all data. They can make commitments to certain choices too early, which prevent them from finding the best overall solution later. This can be seen from the preceding example where the use of a greedy algorithm selects item with a total value of $1350 only. However, if the items were selected in the sequence depicted by the following table, the total value would have been much greater, with the weight being 10 kg only.

- 21. Data Structure & Algorithm using Java Page 21 Item Weight (in kg) Total value (in $) C 4 800 B 3 450 A 2 400 D 1 50 Total 10 1700 Optimal selection of Items: In the preceding example you can observe that the greedy approach commits to item E very early. This prevents it from determining the best overall solution later. Nevertheless, greedy approach is useful because it’s quick and easy to implement. Moreover, it often gives good approximation to the optimal value. 1.4.3 Divide-and-Conquer, Decrease-and-Conquer Given an instance of the problem to be solved, split this into several smaller sub- instances (of the same problem), independently solve each of the sub-instances, and then combine the sub-instance solutions to yield a solution for the original instance. With the divide-and-conquer method the size of the problem instance is reduced by a factor (e.g. half the input size), while with the decrease-and-conquer method the size is reduced by a constant. When we use recursion, the solution of the minimal instance is called “terminating condition”. Examples: Divide and Conquer Computing an (a > 0, n a nonnegative integer) by recursion Binary search in a sorted array (recursion) Merge sort algorithm, Quick sort algorithm (recursion) The algorithm for solving the fake coin problem (recursion) Decrease-and-Conquer Insertion sort Topological sorting Binary Tree traversals: inorder, preorder and postorder (recursion) Computing the length of the longest path in a binary tree (recursion) Computing Fibonacci numbers (recursion) Reversing a queue (recursion)

- 22. Data Structure & Algorithm using Java Page 22 1.4.4 Dynamic Programming One disadvantage of using Divide-and-Conquer is that the process of recursively solving separate sub-instances can result in the same computations being performed repeatedly since identical sub-instances may arise. The idea behind dynamic programming is to avoid this pathology by obviating the requirement to calculate the same quantity twice. The method usually accomplishes this by maintaining a table of sub-instance results. Dynamic Programming is a Bottom-Up technique in which the smallest sub-instances are explicitly solved first and the results of these are used to construct solutions to progressively larger sub-instances. In contrast, Divide-and-Conquer is a Top-Down technique which logically progresses from the initial instance down to the smallest sub-instance via intermediate sub- instances. 1.4.5 Transform-and-Conquer These methods work as two-stage procedures. First, the problem is modified to be more amenable to solution. In the second stage the problem is solved. Many problems involving lists are easier when list is sorted. Searching Computing the median (selection problem) Checking if all elements are distinct (element uniqueness) Pre-sorting is used in many geometric algorithms. Efficiency of algorithms involving sorting depends on efficiency of sorting. 1.4.6 Backtracking and branch-and-bound: Generate and test methods The method is used for state-space search problems. State-space search problems are problems, where the problem representation consists of: Initial state Goal state(s) A set of intermediate states A set of operators that transform one state into another. Each operator has pre- conditions and post-conditions. A cost function; evaluates the cost of the operations (optional) A utility function; evaluates how close is a given state to the goal state (optional) Example: You are given two jugs, a 4 gallon one and a 3 gallon one. Neither have any measuring markers on it. There is a tap that can be used to fill the jugs with water. How can you get exactly 2 gallons of water into the 4-gallon jug? Description Pre-conditions on (X, Y) Action (Post-conditions)

- 23. Data Structure & Algorithm using Java Page 23 O1. Fill A X < 4 (4, Y) O2. Fill B Y < 3 (X, 3) O3. Empty A X > 0 (0, Y) O4. Empty B Y > 0 (X, 0) O5. Pour A into B X > 3 - Y X ≤ 3 - Y (X + Y - 3, 3) (0, X + Y) O6. Pour B into A Y > 4 - X Y ≤ 4 - X (4, X + Y - 4) (X + Y, 0) 1.5 What is Data Structure A data structure is a specialized format for organizing and storing data. General data structure types include the array, the file, the record, the table, the tree, and so on. Any data structure is designed to organize data to suit a specific purpose so that it can be accessed and worked within appropriate ways. In computer programming, a data structure may be selected or designed to store data for working on it with various algorithms. 1.5.1 Types of Data Structures Following are the various types of data structures. Primitive types: Boolean, true or false. Character Floating-point, single-precision real number values. Double, a wider floating-point size. Integer, integral or fixed-precision values. String, a sequence of characters. Reference (also called a pointer or handle), a small value referring to another object's address in memory, possibly a much larger one. Enumerated type, a small set of uniquely named values. Composite types or Non-primitive type: Array Record (also called tuple or structure) Union Tagged unions (also called variant, variant record, discriminated union, or disjoint union)

- 24. Data Structure & Algorithm using Java Page 24 Abstract data types: Container List Associative array Multicar Set Multistep (Bag) Stack Queue Double-ended queue Priority queue Tree Graph Linear data structures: Arrays Types of Lists Trees Binary trees B-trees Heaps Trees Multiday trees Space-partitioning trees Application-specific trees Hashes Graphs 1.5.2 Dynamic Memory Allocation Dynamic memory allocation is when an executing program requests that the operating system give it a block of main memory. The program then uses this memory for some purpose. Usually the purpose is to add a node to a data structure. In object oriented languages, dynamic memory allocation is used to get the memory for a new object. The memory comes from above the static part of the data segment. Programs may request memory and may also return previously dynamically allocated memory. Memory may be returned whenever it is no longer needed. Memory can be returned in any order without any relation to the order in which it was allocated. The heap may develop “holes” where previously allocated memory has been returned between blocks of memory still in use.

- 25. Data Structure & Algorithm using Java Page 25 A new dynamic request for memory might return a range of addresses out of one of the holes. But it might not use up the entire hole, so further dynamic requests might be satisfied out of the original hole. If too many small holes develop, memory is wasted because the total memory used by the holes may be large, but the holes cannot be used to satisfy dynamic requests. This situation is called memory fragmentation. Keeping track of allocated and de- allocated memory is complicated. A modern operating system does all this. Memory for an object can also be allocated dynamically during a method's execution, by having that method utilize the special new operator built into Java. For example, the following Java statement creates an array of integers whose size is given by the value of variable k: int[] items = new int[k]; The size of the array above is known only at runtime. Moreover, the array may continue to exist even after the method that created it terminates. Thus, the memory for this array cannot be allocated on the Java stack. 1.6 Algorithm Analysis The analysis of algorithms is the determination of the computational complexity of algorithms, i.e. the amount of time, storage and/or other resources necessary to execute them. Usually, this involves determining a function that relates the length of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small. Since different inputs of the same length may cause the algorithm to have different behavior, the function describing its performance is usually an upper bound on the actual performance, determined from the worst case inputs to the algorithm. A Priori Analysis− This is a theoretical analysis of an algorithm. Efficiency of an algorithm is measured by assuming that all other factors, for example, processor speed, are constant and have no effect on the implementation. A Posterior Analysis− This is an empirical analysis of an algorithm. The selected algorithm is implemented using programming language. This is then executed on target computer machine. In this analysis, actual statistics like running time and space required, are collected. Time Factor− Time is measured by counting the number of key operations such as comparisons in the sorting algorithm. Space Factor− Space is measured by counting the maximum memory space required by the algorithm. In general, the running time of an algorithm or data structure method increases with the input size, although it may also vary for different inputs of the same size. Also, the running time is affected by the hardware environment (as reflected in the processor, clock rate, memory, disk, etc.) and software environment (as reflected in the operating system, programming language, compiler, interpreter, etc.) in which the algorithm is implemented, compiled, and executed. All other factors being equal, the running time of the same algorithm on the same input data will be smaller if the computer has, say, a much faster processor or if the implementation is done in a program compiled into

- 26. Data Structure & Algorithm using Java Page 26 native machine code instead of an interpreted implementation run on a virtual machine. Nevertheless, despite the possible variations that come from different environmental factors, we would like to focus on the relationship between the running time of an algorithm and the size of its input. We are interested in characterizing an algorithm's running time as a function of the input size. But what is the proper way of measuring it? Usually, the time required by an algorithm falls under three types: Best Case− Minimum time required for program execution. Average Case− Average time required for program execution. Worst Case− Maximum time required for program execution. Following are the commonly used asymptotic notations to calculate the running time complexity of an algorithm. Ο Notation Ω Notation θ Notation 1.6.1 Big Oh Notation, Ο The notation Ο(n) is the formal way to express the upper bound of an algorithm's running time. It measures the worst-case time complexity or the longest amount of time an algorithm can possibly take to complete. Let f(n) and g(n) be functions mapping non-negative integers to real numbers. We say that f(n) is O(g(n)) if there is a real constant c > 0 and an integer constant n0 >= 1 such that f(n) <= c.g(n), for n > n0. Then f(n) is big-Oh of g(n). 1.6.2 Omega Notation, Ω The notation Ω(n) is the formal way to express the lower bound of an algorithm's running time. It measures the best-case time complexity or the best amount of time an algorithm can possibly take to complete.

- 27. Data Structure & Algorithm using Java Page 27 If f(n) >= c.g(n) for c = constant and n > n0, then we say that f(n) is Ω(g(n)) 1.6.3 Theta Notation, θ Theta, commonly written as Θ, is an Asymptotic Notation to denote the asymptotically tight bound on the growth rate of runtime of an algorithm. f(n) is Θ(g(n)), if for some real constants c1, c2 and n0 (c1 > 0, c2 > 0, n0 > 0), c1 g(n) is < f(n) is < c2 g(n) for every input size n (n > n0). ∴ f(n) is Θ(g(n)) implies f(n) is O(g(n)) as well as f(n) is Ω(g(n)). The notation θ(n) is the formal way to express both the lower bound and the upper bound of an algorithm's running time. It is represented as follows− Notation name: O(1) constant O(log(n)) logarithmic O((log(n))^c) poly logarithmic O(n) linear O(n^2) quadratic O(n^c) polynomial O(c^n) exponential 1.6.4 How to analyze a program In general, how can you determine running time of a piece of code? The answer is that it depends on what kinds of statements are used. Let the sequence of statements be: statement 1; statement 2; … statement k; The total time is found by adding the times for all statements: total time = time(statement 1) + time(statement 2) + … + time(statement k) If each statement is “simple” (only involves basic operations) then the time for each statement is constant and the total time is also constant: O(1).

- 28. Data Structure & Algorithm using Java Page 28 If-Then-Else: if (condition) then block 1 (sequence of statements) else block 2 (sequence of statements) end if; Here, either block 1 will execute, or block 2 will execute. Therefore, the worst-case time is the slower of the two possibilities: max(time(block 1), time(block 2)) If block 1 takes O(1) and block 2 takes O(N), the if-then-else statement would be O(N). Loops: for I in 1.. N loop sequence of statements end loop; The loop executes N times, so the sequence of statements also executes N times. If we assume the statements are O(1), the total time for the for loop is N * O(1), which is O(N) Example (nested loops): for I in 1.. N loop for J in 1 … M loop sequence of statements end loop; end loop; The outer loop executes N times. Every time the outer loop executes, the inner loop executes M times. As a result, the statements in the inner loop execute a total of N*M times. Thus, the complexity is O(N*M). In a common special case where the stopping condition of the inner loop is never occurred, meaning the inner loop also executes N times, then the total complexity for the two loops is O(N^2). Statements with function/procedure calls When a statement involves a function/procedure call, the complexity of the statement includes the complexity of the function/procedure. Assuming that you know that function/procedure f takes constant time, and that function/procedure g takes time proportional to (linear in) the value of its parameter k. Then the statements below have the time complexities indicated. f(k) has O(1) g(k) has O(k) When a loop is involved, the same rule applies. For example: for J in 1.. N loop g(J); end loop; has complexity (N^2). The loop executes N times and each function/procedure call is of complexity O(N).

- 29. Data Structure & Algorithm using Java Page 29 CENG 213 Data Structures 12 Algorithm Growth Rates (cont.) Time requirements as a function of the problem size n

- 30. Data Structure & Algorithm using Java Page 30

- 31. Data Structure & Algorithm using Java Page 31 1.6.4.1 Some Mathematical Facts

- 32. Data Structure & Algorithm using Java Page 32 CENG 213 Data Structures 28 Growth-Rate Functions – Example3 Cost Times for (i=1; i<=n; i++) c1 n+1 for (j=1; j<=i; j++) c2 for (k=1; k<=j; k++) c3 x=x+1; c4 T(n) = c1*(n+1) + c2*( ) + c3* ( ) + c4*( ) = a*n3 + b*n2 + c*n + d So, the growth-rate function for this algorithm is O(n3) n j j 1 )1( n j j k k 1 1 )1( n j j k k 1 1 n j j 1 )1( n j j k k 1 1 )1( n j j k k 1 1 CENG 213 Data Structures 38 How much better is O(log2n)? n O(log2n) 16 4 64 6 256 8 1024 (1KB) 10 16,384 14 131,072 17 262,144 18 524,288 19 1,048,576 (1MB) 20 1,073,741,824 (1GB) 30 1.7 Random Access Machine model Algorithms can be measured in a machine-independent way using the Random Access Machine (RAM) model. This model assumes a single processor. In the RAM model, instructions are executed one after the other, with no concurrent operations. This model of computation is an abstraction that allows us to compare algorithms based on performance. The assumptions made in the RAM model to accomplish this are: Each simple operation takes 1-time step.