We demonstrate Deep Redundancy (DRED) for the Opus codec. DRED makes it possible to include up to 1 second of redundancy in every 20-ms packet we send. That will make it possible to keep a conversation even in extremely bad network conditions.

We demonstrate Deep Redundancy (DRED) for the Opus codec. DRED makes it possible to include up to 1 second of redundancy in every 20-ms packet we send. That will make it possible to keep a conversation even in extremely bad network conditions.

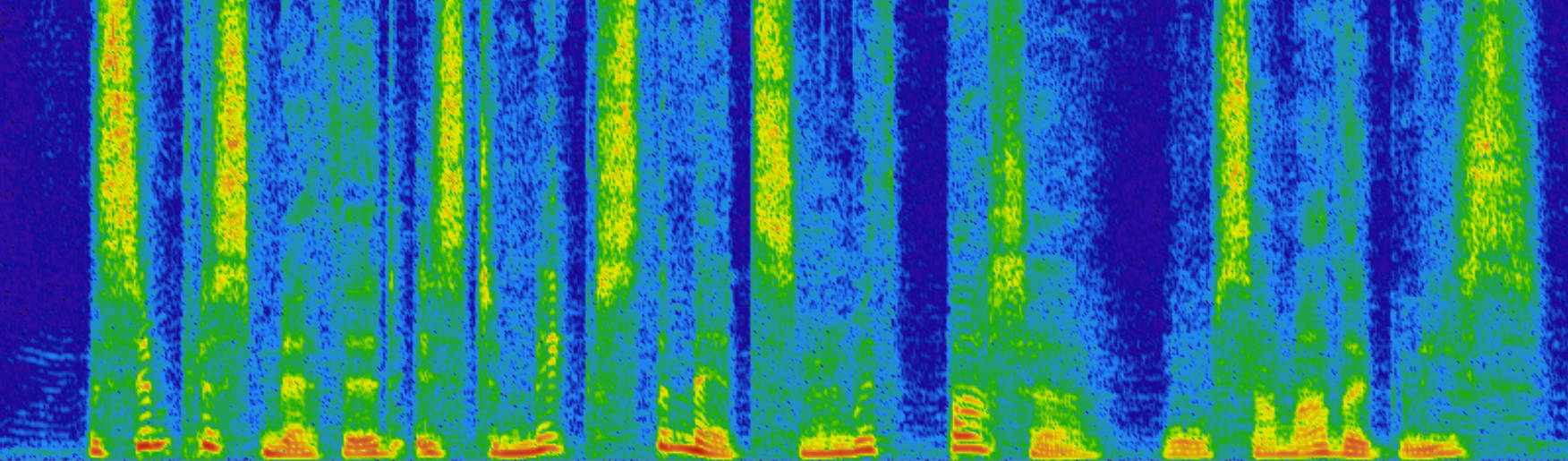

This demo introduces the PercepNet algorithm, which combines signal processing, knowledge of human perception, and deep learning to enhance speech in real time. PercepNet ranked second in the real-time track of the Interspeech 2020 Deep Noise Suppression challenge, despite using only 5% of a CPU core. We've previously talked about PercepNet here, but this time we go into the technical details of how and why it works.

This demo introduces the PercepNet algorithm, which combines signal processing, knowledge of human perception, and deep learning to enhance speech in real time. PercepNet ranked second in the real-time track of the Interspeech 2020 Deep Noise Suppression challenge, despite using only 5% of a CPU core. We've previously talked about PercepNet here, but this time we go into the technical details of how and why it works.

This is some of what I've been up to since joining AWS... My team and I participated in the Interspeech 2020 Deep Noise Suppression Challenge and got both the top spot in the non-real-time track, and the second spot in the real-time track. See the official Amazon Science blog post for more details on how it happened and how it's already shipping in Amazon Chime.

You can also listen to some samples, or read the papers:

Note: This is a first-person account of my involvement in Opus. Since I was not part of the early SILK efforts mentioned below, I cannot speak about its early development. That part is omitted from this account but by no means is that intended to diminish its importance to Opus.

Opus is an open-source, royalty-free, highly versatile audio codec standard. It is now deployed in billions of devices. This is how it came to be. Even before Opus, I had a strong interest in open standards, which led me to start the Speex project in 2002, with help from David Rowe. Speex was one of the first modern royalty-free speech codecs. It was shipped in many applications, especially games, but because it was slightly inferior to the standard codecs of the time, it never achieved a critical mass of deployment.

In 2007, when working on a high-quality videoconferencing project as part of my post-doc, I realized the need for a high-fidelity audio codec that also had very low delay suitable for interactive, real-time applications. At the time, audio codecs were mostly divided into two categories: there were high-delay, high-fidelity transform codecs (like MP3, AAC, and Vorbis) that were unsuitable for real-time operation, and there were low-delay speech codecs (like AMR, Speex, and G.729) with limited audio quality.

That is why I started the Opus ancestor called CELT, an effort to create a high-fidelity transform codec with an ultra low delay around 4-8 ms — even lower than the 20 ms typical delay for VoIP and videoconferencing. My first step was to discuss with Christopher "Monty" Montgomery, who had previously designed Ogg Vorbis, a high-delay, high-fidelity codec, and was then looking at designing a successor. Even though our sets of goals proved too different for us to merge the two efforts, the discussion was very helpful in that I was able to gain some of the experience Monty got while designing Vorbis. The most important advice I got was "always make sure the shape of the energy spectrum is preserved". In Vorbis (and other codecs), that energy constraint was only partially achieved, through very careful tuning of the encoder, and sometimes at great cost in bitrate. For CELT, I attacked the problem from a different perspective: What if CELT could be designed so that the constraint was built into the format, and thus mathematically impossible to violate?

This is where the CELT name originated: Constrained Energy Lapped Transform. The format itself would constrain the energy so that no effort or bits would be wasted. Although simple in principle, that idea required completely new compression and math techniques that had never previously been used in transform codecs. One of them was algebraic vector quantization, which had been used for a long time in speech codecs, but never in transform codecs, which still used scalar quantization. Overall, it took about 2 years to figure out the core of the CELT technology, with the help of Tim Terriberry, Greg Maxwell, and other Xiph contributors.

Because of the ultra low delay constraint, CELT was not trying to match or exceed the bitrate efficiency of MP3 and AAC, since these codecs benefited from a long delay (100-200 ms). It was thus a complete surprise when — only 6 months after the first commits — a listening test showed CELT already out-performing MP3 despite the difference in delay. That was attributed to the ancient technology behind MP3. CELT was still behind the more recent AAC, with no plan to compete on efficiency alone.

Despite still being in early development, some people started using CELT for their projects, mostly because it was the only codec that would suit their needs. These early users greatly helped CELT to improve by providing real-life use cases and raising issues that could not have been foreseen with just "lab" testing. For example, a developer who was using CELT for network music performances (musicians playing live together in different cities) once complained that "CELT works very well for everyone, except for me with my bass guitar". By getting an actual sample, it was easy to find the problem and address it. There were many similar stories and over a few years, many parts of CELT were changed or completely rewritten.

There has been no mention of the name Opus so far because there was still a missing piece. Around the same time CELT was getting started, another codec effort was quietly started by Koen Vos, Søren Skak Jensen, and Karsten Vandborg Sørensen at Skype under the name SILK. SILK was a more traditional speech codec, but with state-of-the-art efficiency, competing with or exceeding other speech codecs. We became aware of SILK in 2009 when Skype proposed it as a royalty-free codec to the Internet Engineering Task Force (IETF), the main standards body governing the Internet. We immediately joined the effort, proposing CELT to the emerging working group. It was a highly political effort, given the presence of organizations heavily invested in royalty-bearing codecs. There was thus strong pressure into restricting the working group’s effort to standardizing a single codec. That drove us to investigate ways to combine SILK and CELT. The two codecs were surprisingly complementary, SILK being more efficient at coding speech up to 8 kHz, and CELT being more efficient at coding music and achieving delays below 10 ms. The only thing none of the codecs did very efficiently was coding high-quality speech covering the full audio bandwidth (up to 20 kHz). This is where both SILK and CELT could be used simultaneously and achieve high-quality, fullband speech codec at just 32 kb/s, something no other codecs could achieve. Opus was born and, thanks to the IETF collaboration, the result would be better than the sum of its SILK and CELT parts.

Integrating SILK and CELT required changes to both technologies. On the CELT side, it meant supporting and optimizing for frame sizes up to 20 ms — no longer ultra-low delay but low enough for videoconferencing. Through collaboration in the working group, CELT also gained a perceptual pitch post-filter contributed by Raymond Chen at Broadcom. The post-filter and the 20-ms frames also increased the efficiency to the point where some audio enthusiasts started comparing Opus to HE-AAC on music compression. Unsurprisingly, they found the higher-delay HE-AAC to have higher quality at the same bitrate, but they also started providing specific feedback that helped improve Opus. This went on for several months, until a listening test eventually showed Opus having higher quality than HE-AAC, despite HE-AAC being designed for much higher delays. At that point, Opus really became a universal audio codec. It was either on par or better than all other audio codecs, regardless of the application, be it speech, music, real-time, storage, or streaming.

Opus officially became an IETF standard in 2012. At the time, the IETF was also defining the WebRTC standard for videoconferencing on the web. Thanks to its efficiency and its royalty-free nature, Opus became the mandatory-to-implement standard for WebRTC. In part thanks to WebRTC, Opus is now included in all major browsers and in both the Android and iOS mobile operating systems. It is also used alongside AV1 in YouTube. Most large technology companies now ship products using Opus. This ensures inter-operability across different applications that can communicate with a common codec. Because there are no royalties, it also enables some products that would not otherwise be viable (e.g. because you can't afford to pay a 0.50$ royalty for each freely-downloaded copy of a client software).

As for many other codecs, only the Opus decoder is standardized, which means that the encoder can keep improving without breaking compatibility. This is how Opus keeps improving to this day, with the latest version, Opus 1.3, released in October 2018.

This is a follow-up on the first LPCNet demo. In this new demo, we turn LPCNet into a very low-bitrate neural speech codec (see submitted paper) that's actually usable on current hardware and even on phones. It's the first time a neural vocoder is able to run in real-time using just one CPU core on a phone (as opposed to a high-end GPU). The resulting bitrate — just 1.6 kb/s — is about 10 times less than what wideband codecs typically use. The quality is much better than existing very low bitrate vocoders and comparable to that of more traditional codecs using a higher bitrate.

This new demo presents LPCNet, an architecture that combines signal processing and deep learning to improve the efficiency of neural speech synthesis. Neural speech synthesis models like WaveNet have recently demonstrated impressive speech synthesis quality. Unfortunately, their computational complexity has made them hard to use in real-time, especially on phones. As was the case in the RNNoise project, one solution is to use a combination of deep learning and digital signal processing (DSP) techniques. This demo explains the motivations for LPCNet, shows what it can achieve, and explores its possible applications.

Opus gets another major update with the release of version 1.3. This release brings quality improvements to both speech and music, while remaining fully compatible with RFC 6716. This is also the first release with Ambisonics support. This Opus 1.3 demo describes a few of the upgrades that users and implementers will care about the most. You can download the new version from the Opus website.

Opus gets another major update with the release of version 1.3. This release brings quality improvements to both speech and music, while remaining fully compatible with RFC 6716. This is also the first release with Ambisonics support. This Opus 1.3 demo describes a few of the upgrades that users and implementers will care about the most. You can download the new version from the Opus website.After more than 10 years not having any desktop at home, I recently started doing some deep learning work that requires more processing power than a quad-core laptop can provide. So I went looking for a powerful desktop machine, with the constraint that it had to be quiet. I do a lot of work with audio (e.g. Opus), so I can't have a lot of noise in my office. I could have gone with just a remote machine, but sometimes it's convenient to have some compute power locally. Overall, I'm quite pleased with the result, so I'm providing details here in case anyone else finds the need for a similar machine. It took quite a bit of effort to find a good combination of components. I don't pretend any component is the optimal choice, but they're all pretty good.

First here's what the machine looks like right now.

Now let's look at each component separately.

Dual-socket Xeon E5-2640 v4 (10 cores/20 threads each) running at 2.4 GHz. One pleasant surprise I have found is that even under full load, the turbo is able to keep all cores running at 2.6 GHz. With just one core in use, the clock can go up to 3.4 GHz. The listed TDP is 90W, which isn't so bad. In practice, even when fully loaded with AVX2 computations, I haven't been able to reach 90W, so I guess it's a pretty conservative value. The main reason I went with Intel was dual-socket motherboard availability. Had I chosen to go single-socket, I would probably have picked up an AMD Threadripper 1950x instead. As to why I need so many CPU cores when deep learning is all about GPUs these days, what I can say is that some of the recurrent neural networks I'm training need several thousands of time steps and for now CPUs seem to be more efficient than GPUs on those.

Choosing the right CPU coolers has been one of the main headaches I've had. It's very hard to rely on manufacturer specs and even reviews, because they tend to use different testing methodologies (can only compare tests coming from the same lab). Also, comparing water cooling to air cooling is hard because there's a case issue. Air cooling is all well inside the case, so the noise level depends on the case damping. For water cooling, the fans are (obviously) setup in holes in the case, so they benefit less from noise damping. Add to that the fact that most tests report A-weighted dB values, which underestimates the kind of low-frequency noise that pumps tend to make. In the end, I went with a pair of Noctua NH-U12DX i4 air coolers. I preferred those over water coolers based on the reviews I saw, but also on the reasoning that the fan was about the same size as those on water radiators, but at least it was inside the case.

Got an ASUS Z10PE-D16 WS motherboard. All I can say is that it works fine and I haven't had any issue with it. Not sure how it would compare to other boards.

I have 128 GB ECC memory, split as 8x 16 GB so as to fill all 4 channels of each CPU. Nothing more to say here.

For now, deep learning essentially requires CUDA, which means I had to get an NVIDIA card. The fastest GPU at a "reasonable" price point for now is the 1080 Ti, so that part wasn't hard to figure out. The hard part was finding a quiet 1080 Ti-based card. I originally went with the water-cooled EVGA GeForce GTX 1080 Ti SC2 HYBRID for which I saw good reviews and which was recommended by a colleague. I went with water cooling because I was worried about the smaller fans usually found on GPUs. Unfortunately, the card turned out to be too noisy for me. The pump makes an audible low-frequency noise even when idle and the 120 mm fan that comes with the radiator is a bit noisy (and runs even when idle). So that card may end up being a good choice for some people, but not for a really quiet desktop. I replaced it with an air-cooled MSI GeForce GTX 1080 Ti GAMING X TRIO. I chose it based on both good reviews and availability. I've been pleasantly surprised by how quiet it is. When idle or lightly loaded, the fans do not spin at all, which is good since I'm not using it all the time. So far the highest load I've been able to generate on it is around 150W out of the theoretical 280W max power. Even at that relatively high load, the fans are spinning at 28% of their max speed and, although audible, they're reasonably quiet. In fact, the air-cooled MSI is much quieter under load than the EVGA when idle.

To avoid wasting resources on the main GPU, I went with a separate, much smaller GPU to handle the actual display. I wanted an AMD card because of the good open-source Linux driver support. I didn't put too much thought on that one, and I went with a MSI Radeon RX 560 AERO. It's probably not the most quiet card out there, but considering that I don't do any fancy graphics, it's very lightly loaded, so the fan spins pretty slowly.

According to some reviews I looked at, the Corsair HX1000i appeared to be the most quiet PSU out there. All I can say is that so far, even under load, I haven't been able to even get the PSU fan to spin at all, so it looks like a good choice.

The case was another big headache. It's really hard to get useful data since the amount of noise depends on what's in the case more than on the case itself. After all, cases don't cause noise, they attenuate it. In the end, I went with the Fractal Design Define XL R2, mostly due to the overall good reviews and the sound absorbing material in the panels. Again, I can't compare it to other cases, but it seems to be dampening the noise from the CPU coolers pretty effectively.

The Define XL R2 case originally came with three Silent Series R2 3-pin fans. When running at 12V, those fans are actually pretty noisy. The case comes with a 5V/7V/12V switch, so I had the fans run on 5V instead, making them much quieter. The down-side is of course lower air flow, but it looked (kinda) sufficient. Still, I wanted to see if I could get both better air flow and lower noise by trying a Noctua NF-A14 fan. The good point is that it indeed has a better air flow/noise ratio. The not so good point is that it requires more than 7V to start, so I couldn't operate it at low voltage. The best I could do was to use it on 12V with the low-noise adapter, which is equivalent to running it around 9V. In that configuration, it has similar noise level than the Silent Series R2 running at 5V, but better air flow. That's still good, but I wish I could make it even more quiet. So for now I have one Noctua fan and two Silent Series R2, providing plenty of air flow without too much noise.

Got a 1 TB SSD, which of course is completely silent. Not much more to say on that one.

For the stuff that doesn't fit on an SSD, I decided to get an actual spinning hard disk. The main downside is that it is currently the noisiest component of the system by far. It's not so much the direct noise from the hard disk as the vibrations it propagates through the entire case. despite being mounted on rubber rings the vibrations are causing very audible low-frequency noise. For now I'm mitigating the issue by having the disk spin down when I'm not using it (and I'm not using it often), but it would be nice to not have to do that. I've been considering home-made suspensions, but I haven't tried it yet. I would prefer some off-the-shelf solution, but haven't found anything sufficiently interesting yet. The actual drive I have is a 8 TB, Helium-filled WD Red, but I doubt any other 5400 rpm drive would have been significantly better or worse. The only extra annoyance with the WD Red is that it automatically moves the heads every 5 seconds, which makes additional noise. Apparently they call it pre-emptive wear leveling.

I've seen many contradicting theories about how to configure the case fans. Some say you need positive pressure (more intake than exhaust), some say negative pressure (more exhaust), some say you need to balance them. I don't pretend to solve the debate, but I can talk about what works in this machine. I decided against negative airflow because of dust issues (you don't want dust to enter through all openings in the case) and the initial configuration I got was one intake at the bottom of the case, one intake at the front, and one exhaust at the back. It worked fine, but then I noticed something strange. If I just removed the exhaust fan, my CPU would run 5 degrees cooler! That's right, 2 intake, no exhaust ran cooler. I don't fully understand why, but one thing I noticed was that the exhaust fan was causing the air flow at back to be cooler, while causing hot air to be expelled from the holes in the 5.25" bay. I have no idea why, but clearly, it's disrupting the air flow. One thing worth pointing out is that even without the exhaust fan, the CPU fans are pushing air right towards the rear exhaust and the positive pressure in the case is probably helping them. When the CPUs are under load, there's definitely a lot of hot air coming out from the rear. One theory I have is that not having an exhaust fan means a higher positive pressure, causing many openings in the case to act as exhaust (no air would be expelled if the pressure was balanced). So in the end, I added a third fan as intake at the front, further increasing the positive pressure and reducing temperature under load by another ~1 degree. As of now, when fully loading the CPUs, I get a max temperature of 55 degrees for CPU 0 and 64 degrees for CPU 1. The difference may look strange, but it's likely due to the CPU 0 fan blowing its air on the CPU 1 fan.

This demo presents the RNNoise project, showing how deep learning can be applied to noise suppression. The main idea is to combine classic signal processing with deep learning to create a real-time noise suppression algorithm that's small and fast. No expensive GPUs required — it runs easily on a Raspberry Pi. The result is much simpler (easier to tune) and sounds better than traditional noise suppression systems (been there!).

Over the last three years, we have published a number of Daala technology demos. With pieces of Daala being contributed to the Alliance for Open Media's AV1 video codec, now seems like a good time to go back over the demos and see what worked, what didn't, and what changed compared to the description we made in the demos.

Here's the latest addition to the Daala demo series. This demo describes the new Daala deringing filter that replaces a previous attempt with a less complex algorithm that performs much better. Those who like the know all the math details can also check out the full paper.

Here's my new contribution to the Daala demo effort. Perceptual Vector Quantization has been one of the core ideas in Daala, so it was time for me to explain how it works. The details involve lots of maths, but hopefully this demo will make the general idea clear enough. I promise that the equations in the top banner are the only ones you will see!

As a contribution to Monty's Daala demo effort, I decided to demonstrate a technique I've recently been developing for Daala: image painting. The idea is to represent images as directions and 1-D patterns.

Three years ago Opus got rated higher than HE-AAC and Vorbis in a 64 kb/s listening test. Now, the results of the recent 96 kb/s listening test are in and Opus got the best ratings, ahead of AAC-LC and Vorbis. Also interesting, Opus at 96 kb/s sounded better than MP3 at 128 kb/s.

I just got back from the 135th AES convention, where Koen Vos and I presented two papers on voice and music coding in Opus.

Also of interest at the convention was the Fraunhofer codec booth. It appears that Opus is now causing them some concerns, which is a good sign. And while we're on that topic, the answer is yes :-)