-

Notifications

You must be signed in to change notification settings - Fork 442

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add compaction pacing mechanism #179

Add compaction pacing mechanism #179

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 0 of 10 files reviewed, 6 unresolved discussions (waiting on @ajkr, @petermattis, and @Ryanfsdf)

compaction.go, line 1026 at r1 (raw file):

for key, val := iter.First(); key != nil; key, val = iter.Next() { // Slow down memtable flushing to match fill rate. if c.flushing != nil {

This pacing code has become significant enough that I think it should be separated out from this main loop. I think if that separation is done nicely we'll be able to test the pacing code in isolation. This would be a significant change, and I'd suggest doing it in a follow-on PR and not this PR.

compaction.go, line 1044 at r1 (raw file):

prevBytesIterated = c.bytesIterated d.pendingFlushCompactionDebt += uint64(d.estimatedWAmp * float64(flushAmount))

I think it be more accurate for pendingFlushCompactionDebt to track the number of "in flight" flushed bytes and to compute the actual debt when this variable is used as pendingFlushCompactionBytes * d.estimatedWAmp.

compaction.go, line 1073 at r1 (raw file):

} } else { var curCompactionDebt uint64

There is a lot of shared code between this and the flush pacing. See my comment above that this should be restructured in a follow-on PR.

compaction.go, line 1074 at r1 (raw file):

} else { var curCompactionDebt uint64 if d.compactionDebt + d.pendingFlushCompactionDebt > c.bytesIterated {

runCompaction can run concurrently, once for a flush and once for a compaction. It looks like you're updating pendingFlushCompactionDebt when flushing and then reading it here...without any synchronization.

compaction_picker.go, line 149 at r1 (raw file):

estimatedWAmp += levelRatio + 1 } else { // Return because next levels no longer contribute to compaction debt.

I'm not understanding this comment. If levelSize <= p.levelMaxBytes[level] indicates that no compaction is needed at level, but don't subsequent levels affect write amp? It might be misunderstanding the calculation you're trying to perform. More comments would be helpful.

db.go, line 176 at r1 (raw file):

compactionLimiter *rate.Limiter compactionDebt uint64

Is there any locking which protects the update to this variable? If it is updated atomically, please add an updated atomically comment.

608a182

to

a17e85c

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 0 of 10 files reviewed, 6 unresolved discussions (waiting on @ajkr and @petermattis)

compaction.go, line 1026 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

This pacing code has become significant enough that I think it should be separated out from this main loop. I think if that separation is done nicely we'll be able to test the pacing code in isolation. This would be a significant change, and I'd suggest doing it in a follow-on PR and not this PR.

Ack. It might be worthwhile to split up runCompaction into runFlush and runCompaction as well since there seems to be a lot of c.flushing != nil checks.

compaction.go, line 1044 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

I think it be more accurate for

pendingFlushCompactionDebtto track the number of "in flight" flushed bytes and to compute the actual debt when this variable is used aspendingFlushCompactionBytes * d.estimatedWAmp.

I agree, I've updated it.

compaction.go, line 1073 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

There is a lot of shared code between this and the flush pacing. See my comment above that this should be restructured in a follow-on PR.

Ack.

compaction.go, line 1074 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

runCompactioncan run concurrently, once for a flush and once for a compaction. It looks like you're updatingpendingFlushCompactionDebtwhen flushing and then reading it here...without any synchronization.

I was under the wrong assumption that assigning here was atomic. I made this assumption in some other places too, which I've fixed.

compaction_picker.go, line 149 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

I'm not understanding this comment. If

levelSize <= p.levelMaxBytes[level]indicates that no compaction is needed atlevel, but don't subsequent levels affect write amp? It might be misunderstanding the calculation you're trying to perform. More comments would be helpful.

I think the way I've used w-amp here is a bit unconventional and incorrect. The way I define w-amp is really how much compaction debt we would incur per byte added to L0. This is slightly different from w-amp since a byte that is added to some arbitrary level contributes to w-amp but may not necessarily contribute to compaction debt (In the case where the level isn't full).

I've adjusted the naming to better fit the implementation and added some more comments.

db.go, line 176 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Is there any locking which protects the update to this variable? If it is updated atomically, please add an

updated atomicallycomment.

Done. I also had to do this with the float64 but there is no nice atomic.LoadFloat64 to use. I've changed the value to uint64 which loses a bit of resolution (though not very significant). There are some unsafe tricks to atomically read and write float64 but I'm not sure if that's worth doing.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 0 of 10 files reviewed, 8 unresolved discussions (waiting on @ajkr and @Ryanfsdf)

compaction.go, line 1026 at r1 (raw file):

Previously, Ryanfsdf (Ryan Kim) wrote…

Ack. It might be worthwhile to split up

runCompactionintorunFlushandrunCompactionas well since there seems to be a lot ofc.flushing != nilchecks.

There were previously separate flush and compaction routines, but I merged them due to the significant overlap. Rather than splitting them apart, I think there should be some sort of "pacer" interface that is called on every loop iteration that encapsulates all of this pacing logic. That "pacer" would be specialized for flushing and compaction.

compaction.go, line 1074 at r2 (raw file):

pendingFlushCompactionDebt := atomic.LoadUint64(&d.pendingFlushCompactionDebt) compactionDebtMultiplier := atomic.LoadUint64(&d.compactionDebtMultiplier) flushCompactionDebt := pendingFlushCompactionDebt * compactionDebtMultiplier

Rather than these calculations, I wonder if it would be better to call d.mu.versions.picker.estimatedCompactionDebt every 1000 iterations or so, and pass in pendingFlushCompactionDebt as an l0ExtraSize parameter. This would remove the need to compute compactionDebtMultiplier. Thoughts?

compaction_picker.go, line 149 at r1 (raw file):

Previously, Ryanfsdf (Ryan Kim) wrote…

I think the way I've used

w-amphere is a bit unconventional and incorrect. The way I definew-ampis really how much compaction debt we would incur per byte added to L0. This is slightly different from w-amp since a byte that is added to some arbitrary level contributes to w-amp but may not necessarily contribute to compaction debt (In the case where the level isn't full).I've adjusted the naming to better fit the implementation and added some more comments.

Ok, this makes more sense now. Thanks for the comments and renaming.

compaction_picker.go, line 113 at r2 (raw file):

for _, file := range p.vers.files[0] { levelSize += file.size }

This can be levelSize = totalSize(p.vers.files[0]).

compaction_picker.go, line 122 at r2 (raw file):

var nextLevelSize uint64 for level := p.baseLevel; level < numLevels - 1; level++ { if nextLevelSize > 0 {

It isn't clear to me why you're tracking nextLevelSize. In the first iteration of this loop, nextLevelSize will be 0 which will overwrite the computation of levelSize outside of the loop. Am I missing something here?

compaction_picker.go, line 142 at r2 (raw file):

levelSize += bytesAddedToNextLevel bytesAddedToNextLevel = 0

I think some of the logic here could be rearranged to make this clearer:

levelSize += bytesAddedToNextLevel

if levelSize <= uint64(p.levelMaxBytes[level]) {

return ...

}

bytesAddedToNextLevel = levelSize - uint64(p.levevlMaxBytes[level])

...

compaction_picker_test.go, line 17 at r2 (raw file):

) func load(d *datadriven.TestData) (*version, *Options, string) {

This function needs a somewhat more describe name, such as loadVersion or initVersion.

db.go, line 176 at r1 (raw file):

Previously, Ryanfsdf (Ryan Kim) wrote…

Done. I also had to do this with the

float64but there is no niceatomic.LoadFloat64to use. I've changed the value to uint64 which loses a bit of resolution (though not very significant). There are someunsafetricks to atomically read and writefloat64but I'm not sure if that's worth doing.

FYI, you don't need to use unsafe to store a float64 in a uint64. See https://golang.org/pkg/math/#Float64bits and https://golang.org/pkg/math/#Float64frombits.

Please add a comment describing what compactionDebt is measuring.

db.go, line 180 at r2 (raw file):

// written atomically. compactionDebt uint64 pendingFlushCompactionDebt uint64

Nit: pending isn't quite the right term here. Pending makes me think of all of the immutable memtables, but this is tracking the compaction debt imposed by L0 tables that are in the process of being written. I don't have a better suggestion. Naming is hard.

Similar to above, please add a comment describing what this field is measuring.

a17e85c

to

4a4871e

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I made some changes to how the compaction debt is calculated.

I noticed before that when L0->LBase compactions happened, the compaction debt wouldn't go down at all. This was because I didn't properly account for the size of LBase during the compaction. I've updated this in compaction_picker.go. Now we use the predicted size of L0 in the current or next L0->LBase compaction to predict how many bytes from LBase will be need to be compacted.

Also, I removed the MinCompactionRate option because I realized that we can actually tie the minimum compaction rate to the minimum flush rate, since they are related. Now in the compaction routine I set the minimum compaction rate to match the speed of memtable flushing. This minimum compaction rate should only take effect when the write throughput is less than 1MB/s, which is the minimum speed of flushing.

I updated the minimum flush rate from 4MB/s to 1MB/s because 4MB/s is still quite fast and the benchmarks at write throughputs less than 4MB/s showed slightly jumpy behavior due to the that flush rate.

Reviewable status: 0 of 10 files reviewed, 7 unresolved discussions (waiting on @ajkr and @petermattis)

compaction.go, line 1026 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

There were previously separate flush and compaction routines, but I merged them due to the significant overlap. Rather than splitting them apart, I think there should be some sort of "pacer" interface that is called on every loop iteration that encapsulates all of this pacing logic. That "pacer" would be specialized for flushing and compaction.

Ack, I'll implement that in the follow-on PR.

compaction.go, line 1074 at r2 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Rather than these calculations, I wonder if it would be better to call

d.mu.versions.picker.estimatedCompactionDebtevery 1000 iterations or so, and pass inpendingFlushCompactionDebtas anl0ExtraSizeparameter. This would remove the need to computecompactionDebtMultiplier. Thoughts?

I think that's good. That should give us a slightly more accurate reading on compaction debt.

compaction_picker.go, line 113 at r2 (raw file):

Previously, petermattis (Peter Mattis) wrote…

This can be

levelSize = totalSize(p.vers.files[0]).

Done.

compaction_picker.go, line 122 at r2 (raw file):

Previously, petermattis (Peter Mattis) wrote…

It isn't clear to me why you're tracking

nextLevelSize. In the first iteration of this loop,nextLevelSizewill be0which will overwrite the computation oflevelSizeoutside of the loop. Am I missing something here?

The computation of levelSize outside of the loop is l0 size. We've already set bytesAddedToNextLevel to the l0 size so it is no longer needed. I guess we could have just set bytesAddedToNextLevel directly.

The purpose of nextLevelSize is for every level after lBase to reuse the computation which was done at the end of the loop.

I've refactored this in estimatedCompactionDebt() to be clearer.

compaction_picker.go, line 142 at r2 (raw file):

Previously, petermattis (Peter Mattis) wrote…

I think some of the logic here could be rearranged to make this clearer:

levelSize += bytesAddedToNextLevel if levelSize <= uint64(p.levelMaxBytes[level]) { return ... } bytesAddedToNextLevel = levelSize - uint64(p.levevlMaxBytes[level]) ...

I removed compactionDebtMultiplier so this is no longer needed.

compaction_picker_test.go, line 17 at r2 (raw file):

Previously, petermattis (Peter Mattis) wrote…

This function needs a somewhat more describe name, such as

loadVersionorinitVersion.

Done.

db.go, line 176 at r1 (raw file):

Previously, petermattis (Peter Mattis) wrote…

FYI, you don't need to use

unsafeto store afloat64in auint64. See https://golang.org/pkg/math/#Float64bits and https://golang.org/pkg/math/#Float64frombits.Please add a comment describing what

compactionDebtis measuring.

With the new approach of measuring compaction debt every 1000 iterations, this is no longer needed.

db.go, line 180 at r2 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Nit:

pendingisn't quite the right term here.Pendingmakes me think of all of the immutable memtables, but this is tracking the compaction debt imposed byL0tables that are in the process of being written. I don't have a better suggestion. Naming is hard.Similar to above, please add a comment describing what this field is measuring.

I've changed it to bytesFlushed. This will be the same as the flush routine's compaction.bytesIterated. The only difference is that it's accessible by the compaction routine.

4a4871e

to

c70573f

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is getting close. I'm curious to see what the benchmarks are looking like.

Reviewable status: 0 of 10 files reviewed, 5 unresolved discussions (waiting on @ajkr and @Ryanfsdf)

compaction.go, line 1068 at r3 (raw file):

// debt is below this threshold, we slow down compactions. If compaction debt is above // this threshold, we let compactions continue as fast as possible. We want to keep // compaction debt as low as possible to match the speed of flushes. This threshold

We don't need to keep compaction debt at zero because doing so may mean we're compacting faster than necessary and having an impact on foreground traffic. So "as low as possible" is slightly inaccurate.

compaction.go, line 1071 at r3 (raw file):

// is set so that a single flush cannot contribute enough compaction debt to overshoot // the threshold. compactionSlowdownThreshold = uint64(estimatedMaxWAmp * float64(d.opts.MemTableSize))

Can you provide some justification for this computation? Looking at this now, I could imagine the compaction slowdown threshold as being something like the sum of the target file sizes per level. That is, allow compaction debt that is equal to 1 sstable over the target size for each level. The existing computation here might be better. I haven't thought about it enough.

compaction_picker.go, line 73 at r3 (raw file):

// of L0->LBase compactions which will need to occur for the LSM tree to // become stable. predictedL0CompactionSize := uint64(p.opts.L0CompactionThreshold * p.opts.MemTableSize)

Nit: I'd prefer s/predicated/estimated/g.

compaction_picker.go, line 78 at r3 (raw file):

// LSM tree to become stable. We multiply this by levelSize(LBase size) to // estimate the compaction debt incurred by LBase in the L0->LBase compactions. compactionDebt += (levelSize * bytesAddedToNextLevel) / predictedL0CompactionSize

Since baseLevel is treated specially, perhaps it is worth pulling it out of the loop as well, and then iterating from level := p.baseLevel+1; level < numLevels - 1; level++. I think that would allow you to get rid of nextLevelSize and have each loop iteration do:

levelSize := totalSize(p.vers.files[level]) + bytesAddedToNextLevel

compaction_picker.go, line 92 at r3 (raw file):

bytesAddedToNextLevel = levelSize - uint64(p.levelMaxBytes[level]) levelRatio := float64(nextLevelSize)/float64(levelSize) compactionDebt += uint64(float64(bytesAddedToNextLevel) * (levelRatio + 1))

Would it be better to use smoothedLevelMultiplier here, rather than levelRatio?

c70573f

to

7ef9249

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

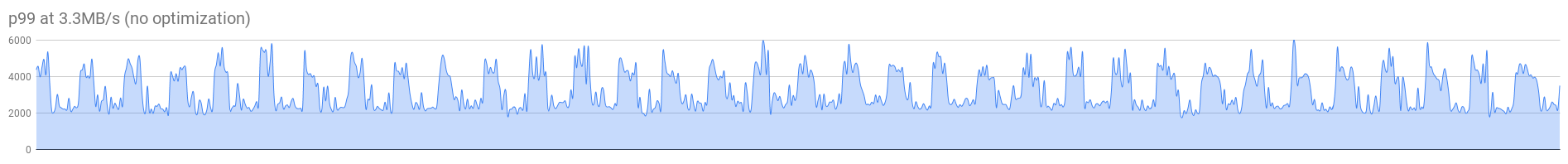

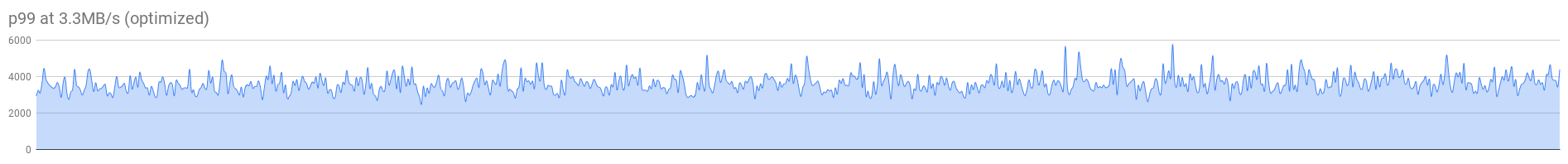

I've been doing some personal benchmarks while making changes to this PR.

Right now, I've been running something like

./pebble sync -c 4000 -d 20m -w /mnt/data1/bench -m 1000 --batch 100

on the roachprod machines (4 core, local ssd).

What I found is that the p50 timeseries shows systematically lower latencies when memtable flush pacing is enabled. This difference is quite significant (~40% lower).

To try to find the cause of this unintended improvement, I added a background process which writes 1mb/s to a dummy file, and disabled the memtable flushing. The background process was intended to simulate the constant low flush rate that memtable flush pacing introduced. However, this change did not show any improvement to p50 latencies. This leads me to believe that there is something else going on in the code which lowers p50 latencies when the memtable flush rate is throttled. It could be due to changes in lock contention behavior or something of that sort.

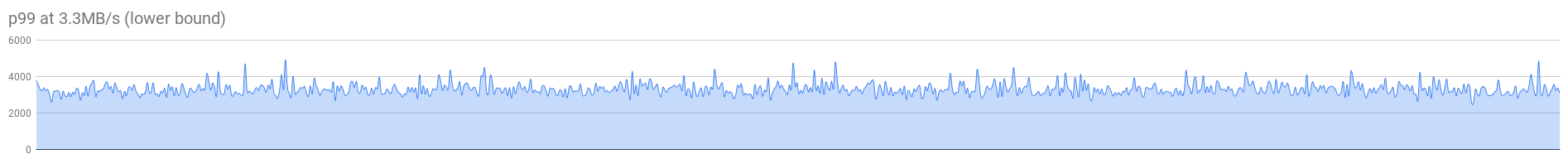

I also ran a benchmark which acts as a lower bound on latencies. This lower bound shows the lowest possible latencies we could produce by introducing the pacing changes. I made this lower bound by limiting the maximum compaction rate to 4MB/s. This causes stalling after a certain point because 4MB/s is a very slow compaction rate. However, we can observe the latencies before stalling happens (or just drop l0 tables when l0_stop_threshold is hit) to compare the theoretical "best" latencies we can achieve by pacing compactions, compared to the actual implementation of the pacing mechanism.

Comparing the latency histograms showed similar results, which means that the actual implementation is fairly close to the best possible implementation we could have of the pacing mechanism.

Comparing the pacing implementation to doing nothing at all, I found that the spikes in latencies when compactions/flushes happen are flattened and dispersed. This was the intended effect of pacing flushes and compactions. However, I found that the average latencies were higher on the paced benchmark compared to doing nothing at all.

For example, the unpaced mechanism showed baseline p95 latencies of around 1.5ms when no flush or compaction are happening, but spikes of up to 4ms when flushes and compactions occur. The paced mechanism showed p95 latencies at around ~2-3ms at all times. The average p95 latencies of the unpaced mechanism was 2.320ms while the paced mechanism was 2.564ms. The results were similar for other latency levels such as p99 and pMax.

I'll be making a comprehensive benchmark document for reference as well, once the implementation is finalized.

Also, I've added back the MinCompactionRate option, since removing it and setting it to match the speed of flushes negatively impacted certain benchmarks at low write rates.

Reviewable status: 0 of 10 files reviewed, 4 unresolved discussions (waiting on @ajkr and @petermattis)

compaction.go, line 1068 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

We don't need to keep compaction debt at zero because doing so may mean we're compacting faster than necessary and having an impact on foreground traffic. So "as low as possible" is slightly inaccurate.

Oops, that was unintentional. It was supposed to say "keep compaction speed as slow as possible".

compaction.go, line 1071 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Can you provide some justification for this computation? Looking at this now, I could imagine the compaction slowdown threshold as being something like the sum of the target file sizes per level. That is, allow compaction debt that is equal to 1 sstable over the target size for each level. The existing computation here might be better. I haven't thought about it enough.

The sum of the target file sizes per level would be too small.

For example, let's say all the levels are currently full, except for the last level. When the memtable is flushed, the compaction debt increase would be num_levels * (levelRatio+1). If there's 7 levels with a level ratio of 10, the compaction debt would increase by 77 * memtable_size, which is w-amp * memtable_size. If we only count the target file sizes per level, that would be ~7 * memtable_size.

compaction_picker.go, line 73 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Nit: I'd prefer

s/predicated/estimated/g.

Done.

compaction_picker.go, line 78 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Since

baseLevelis treated specially, perhaps it is worth pulling it out of the loop as well, and then iterating fromlevel := p.baseLevel+1; level < numLevels - 1; level++. I think that would allow you to get rid ofnextLevelSizeand have each loop iteration do:levelSize := totalSize(p.vers.files[level]) + bytesAddedToNextLevel

We still need to keep nextLevelSize or prevLevelSize to calculate levelRatio. If we decide to use smoothedLevelMultiplier instead, we can remove nextLevelSize.

I've pulled the baseLevel condition out of the loop.

compaction_picker.go, line 92 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Would it be better to use

smoothedLevelMultiplierhere, rather thanlevelRatio?

I think levelRatio here would give a slightly better estimate than smoothedLevelMultiplier because levelRatio compares the actual sizes of the levels rather than the target sizes of the levels. For example, let's say a level has a "score" of 10 and the next level has a score of 1 and the smoothedLevelMultiplier is 10. This means that the level and the level below it have the same size, which means we should use bytesAddedToNextLevel * (1 + 1), rather than `bytesAddedToNextLevel * (10 + 1).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 0 of 10 files reviewed, 4 unresolved discussions (waiting on @ajkr, @petermattis, and @Ryanfsdf)

compaction.go, line 1068 at r3 (raw file):

Previously, Ryanfsdf (Ryan Kim) wrote…

Oops, that was unintentional. It was supposed to say "keep compaction speed as slow as possible".

Looks like this wasn't updated (still says "low").

compaction_picker.go, line 73 at r3 (raw file):

Previously, Ryanfsdf (Ryan Kim) wrote…

Done.

I think you forgot to push your updates. Also, I just noticed I said predicated, when I meant predicted.

7ef9249

to

9a6a745

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Reviewable status: 0 of 10 files reviewed, 4 unresolved discussions (waiting on @ajkr and @petermattis)

compaction.go, line 1068 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

Looks like this wasn't updated (still says "low").

I changed compaction debt to compaction speed but forgot to update low to `slow. Fixed now.

compaction_picker.go, line 73 at r3 (raw file):

Previously, petermattis (Peter Mattis) wrote…

I think you forgot to push your updates. Also, I just noticed I said

predicated, when I meantpredicted.

I'm seeing estimated instead of predicted (in revision 4). I just pushed the new changes from updating low to slow so this should update now if it didn't before.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Even if the benchmark numbers are still unclear on AWS, I think this is worth merging. It has the mechanism that we've been pushing for. It will be easier to adjust in follow-on PRs than to continue to tweak in this PR.

Reviewable status: 0 of 10 files reviewed, 1 unresolved discussion (waiting on @ajkr and @Ryanfsdf)

compaction_picker.go, line 73 at r3 (raw file):

Previously, Ryanfsdf (Ryan Kim) wrote…

I'm seeing

estimatedinstead ofpredicted(in revision 4). I just pushed the new changes from updatinglowtoslowso this should update now if it didn't before.

Ack. I misread. Oops.

compaction_picker.go, line 95 at r4 (raw file):

// added to L0. func (p *compactionPicker) estimatedMaxWAmp() float64 { return float64(numLevels - p.baseLevel) * (p.smoothedLevelMultiplier + 1)

This computation only changes when p.baseLevel and p.smoothedLevelMultipler change, which occurs in initLevelMaxBytes. I think you could replace the method with an estimatedMaxWAmp field computed there.

9a6a745

to

c75ce4e

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

TFTR!

Reviewable status: 0 of 10 files reviewed, 1 unresolved discussion (waiting on @ajkr and @petermattis)

compaction_picker.go, line 95 at r4 (raw file):

Previously, petermattis (Peter Mattis) wrote…

This computation only changes when

p.baseLevelandp.smoothedLevelMultiplerchange, which occurs ininitLevelMaxBytes. I think you could replace the method with anestimatedMaxWAmpfield computed there.

Done.

Added a

compactionDebtindicator which estimates the number of bytes which need to be compacted before the LSM tree becomes stable. This value is incremented during memtable flushing and decremented during compactions. This allows us to get a smooth signal on the current compaction debt in the system. If compaction debt is low, compactions are intentionally slowed down to match the speed of memtable flushes. This should free up IO for reads and reduce latency for writes.Closes #7

The compaction debt calculation is similar to RocksDB, with some adjustments to get a smoother signal on compaction debt. In RocksDB, compaction debt for L0 tables is computed only after a certain number of L0 sstables. This made the compaction debt signal jumpy, since L0 table count is jumpy (ex. 0->4, 4->0). This was removed from the implementation to get a smoother signal.