A comprehensive review of deep learning-based single image super-resolution

- Published

- Accepted

- Received

- Academic Editor

- Ahmed Elazab

- Subject Areas

- Artificial Intelligence, Computer Vision, Data Mining and Machine Learning, Graphics, Multimedia

- Keywords

- Super-resolution, Image super-resolution, Deep learning, Single-image super-resolution (SISR), Convolutional neural networks (CNN), Generative adversarial networks (GAN), Neural networks, Artificial intelligence

- Copyright

- © 2021 Bashir et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2021. A comprehensive review of deep learning-based single image super-resolution. PeerJ Computer Science 7:e621 https://doi.org/10.7717/peerj-cs.621

Abstract

Image super-resolution (SR) is one of the vital image processing methods that improve the resolution of an image in the field of computer vision. In the last two decades, significant progress has been made in the field of super-resolution, especially by utilizing deep learning methods. This survey is an effort to provide a detailed survey of recent progress in single-image super-resolution in the perspective of deep learning while also informing about the initial classical methods used for image super-resolution. The survey classifies the image SR methods into four categories, i.e., classical methods, supervised learning-based methods, unsupervised learning-based methods, and domain-specific SR methods. We also introduce the problem of SR to provide intuition about image quality metrics, available reference datasets, and SR challenges. Deep learning-based approaches of SR are evaluated using a reference dataset. Some of the reviewed state-of-the-art image SR methods include the enhanced deep SR network (EDSR), cycle-in-cycle GAN (CinCGAN), multiscale residual network (MSRN), meta residual dense network (Meta-RDN), recurrent back-projection network (RBPN), second-order attention network (SAN), SR feedback network (SRFBN) and the wavelet-based residual attention network (WRAN). Finally, this survey is concluded with future directions and trends in SR and open problems in SR to be addressed by the researchers.

Introduction

The image-based computer graphics models lack resolution independence (Freeman, Jones & Pasztor, 2002) as the images cannot be zoomed beyond the image sample resolution without compromising the quality of images. This is the case, especially in realistic images, for instance, natural photographs. Thus, simple image interpolation will lead to the blurring of features and edges within a sample image.

The concept of super-resolution was first used by Gerchberg (1974) to improve the resolution of an optical system beyond the diffraction limit. In the past two decades, the concept of super-resolution (SR) is defined as the method of producing high-resolution (HR) images from a corresponding low-resolution (LR) image. Initially, this technique was classified as spatial resolution enhancement (Tsai & Huang, 1984). The applications of super-resolution include computer graphics (Kim, Lee & Lee, 2016a,b; Tao et al., 2017), medical imaging (Bates et al., 2007; Fernández-Suárez & Ting, 2008; Huang et al., 2008; Hamaide et al., 2017; Jurek et al., 2020; Teh et al., 2020; Bashir & Wang, 2021a), security, and surveillance (Zhang et al., 2010; Shamsolmoali et al., 2018; Lee, Kim & Heo, 2020), which shows the importance of this topic in recent years.

Although being explored for decades, image super-resolution remains a challenging task in computer vision. This problem is fundamentally ill-posed because there can be several HR images with slight variations in camera angle, color, brightness, and other variables for any given LR image. Furthermore, there are fundamental uncertainties among the LR and HR data since the downsampling of different HR images may lead to a similar LR image, making this conversion a many-to-one process (Yang & Yang, 2013).

The existing methods of image super-resolution can be categorized into single-image super-resolution (SISR) and multiple-image approaches. In single image SR, the learning is performed for single LR-HR pair for a single image, while in multiple-image SR, the learning is performed for a large number of LR-HR pairs for a particular scene, thereby enabling the generation of an HR image from a scene (multiple images) (Kawulok et al., 2020). Video super-resolution deals with multiple successive images (frames) and utilizes the relationship within the frames to super-resolve a target frame; it is a special type of multiple image SR where the images are part of a scene containing different frames (Liu et al., 2020b).

In the past, classical SR methods such as statistical methods, prediction-based methods, patch-based methods, edge-based, and sparse representation methods were used to achieve super-resolution. However, recently the advances in computational power and big data have made researchers use deep learning (DL) to address the problem of SR. In the past decade, deep learning-based SR studies have reported superior performance than the classical methods, and DL methods have been used frequently to achieve SR. Researchers have used a range of methods to explore SR, ranging from the first method of Convolutional Neural Network (CNN) (Dong et al., 2014) to the recently used Generative Adversarial Nets (GAN) (Ledig et al., 2017). In principle, the methods used in deep learning-based SR methods vary in hyper-parameters such as network architecture, learning strategies, activation functions, and loss functions.

In this study, a brief overview of the classical methods of SR is outlined initially, whereas the main focus is given to give an overview of the most recent research in SR using deep learning. Previous studies have explored the literature on SR, but most of these studies emphasize the classical methods (Borman & Stevenson, 1998; Park, Park & Kang, 2003; Van Ouwerkerk, 2006; Yang, Ma & Yang, 2014; Thapa et al., 2016), additionally (Yang, Ma & Yang, 2014; Thapa et al., 2016) used human visual perception to gauge the performance of SR methods.

In recent years, there have been some reviews (Ha et al., 2019; Yang et al., 2019; Zhang et al., 2019c; Zhou & Feng, 2019; Li et al., 2020) focused on deep learning-based image super-resolution. The study by Yang et al. (2019) was focused on the deep learning methods for single image super-resolution. Zhang et al. (2019c) limited the scope of image SR to CNN-based methods for space applications, thereby only reviewing four methods namely, SRCNN, FSRCNN, VDSR and DRCN. Ha et al. (2019) reviewed the state-of-the-art SISR methods and classified them based on the type of framework, i.e., CNN, RNN-CNN-based methods and GAN-based methods. Zhou & Feng (2019) briefly reviewed some of the state-of-the-art SISR methods and provided an introduction of some of the methods without any evaluation of comparison of methods, while Li et al. (2020) reviewed the state-of-the-art methods in image SR while emphasizing on the methods based on CNNs and GANs for real-time applications. These review papers did not encompass the domain of super-resolution as a whole, and this paper fills that research gap by providing an overview of both classical and deep learning-based methods. At the same time, we have reviewed the deep learning-based methods into subdomain based on the functional blocks, i.e., upsampling methods, SR networks, learning strategies, SR framework and other improvements. This review paper fills the gap of a comprehensive review where a reader could access the overall progress of image super-resolution with appropriate section for the overall image quality metrics, SR methods, datasets, applications, and challenges in the field of image SR.

This survey is a comprehensive overview of the recent advances in SR, emphasizing deep learning-based approaches and their achievements in systematically achieving SR. Tables S1 and S2 respectively show the complete list of symbols and acronyms used in this study.

The key features of this study are:

-

We highlight the brief overview of the classical methods in SR and their contributions in light of past studies to give perspective.

-

We provide a detailed survey of deep learning-based SR, including the definition of the problem, dataset details, performance evaluation, deep learning methods used for SR, and specific applications where these SR methods were used and their performance.

-

We compare and contrast the recent advances in deep learning-based SR methods by summarizing the bounds of the methods by providing details of components of the SR methods used structurally.

-

Finally, the open problems in SR and critical challenges that require further probing are highlighted in this survey to provide future directions in SR.

This study is organized as follows:

In “Introduction”, we have introduced the concept of SR and the overall overview of this study. In Fig. 1, we have summarized the hierarchical structure of this review. There are four main sections: classical methods, deep learning-based methods, applications of SR, Discussion, and future directions. In “Super-Resolution: Definitions and Terminologies”, we put forward the problem definition and details of the evaluation dataset. “Survey Methodology” discusses the methodology for the selection of studies included within this review. In “Conventional Methods of Super-Resolution”, we compare and contrast the classical methods of SR, whereas, in “Supervised Super-Resolution”, the SR methods based on supervised deep learning are explored. “Unsupervised Super-Resolution” covers the studies that used unsupervised deep learning-based methods for SR, and in “Domain-Specific Applications of Super-Resolution”, various field-specific applications of SR in recent years are discussed. “Discussion and Future Directions” summarizes open challenges and limitations in current SR methods and puts forward future research directions, while “Conclusion” highlights the conclusions.

Figure 1: Hierarchical classification of this survey.

Four main categories are (a) classical methods of image super-resolution, (b) deep learning-based methods for SR, (c) applications of super-resolution, (d) future research and directions in SR. Green color represent first-level sections, the blue color is for second-level subsections, and orange color represent third level subsections.Super-resolution: definitions and terminologies

In this section, the problem definition and the associated concepts of image super-resolution are discussed in light of the literature review.

Single image super-resolution—problem definition

The image SR focuses on the recovery of an HR image from LR image input as and in principle, the LR image can be represented as the output of the degradation function, as shown in (1).

(1)

Where is the SR degradation function that is responsible for the conversion of HR image to LR image, is the input HR image (reference image), whereas depicts the input parameters of the image degradation function. Degradation parameters are usually scaling factor, blur type, and noise. In practice, the degradation process and dependent parameters are unknown, and only LR images are used to get HR images by the SR method. The SR process is responsible for predicting the inverse of the degradation function d, such that

(2)

Where is the SR function, depicts the input parameters to the function , and is the estimated HR corresponding to the input image. It is also worth noticing that the super-resolution function, as in (2), is ill-posed, as the function is a non-injective function; thus, there are infinite possibilities of for which the condition will hold.

The degradation process for the input LR images is unknown, and this process is affected by numerous factors such as sensor-induced noise, artifacts created because of lossy compression, speckle noise, motion blur, and misfocused images. In the literature, most of the studies have used a single downsampling function as the image degradation function:

(3)

Where is the downsampling operator with being the scaling factor. One of the frequently used downsampling functions in SR is the bicubic interpolation (Shi et al., 2016; Zhang & An, 2017; Shocher, Cohen & Irani, 2018) with antialiasing. In some studies, like (Zhang, Zuo & Zhang, 2018), researchers have used more operations in the downsampling function, and the overall downsampling operation is:

(4)

Where depicts the convolution of the HR image with the blurring kernel , represents the additive white Gaussian noise with a standard deviation of . The degradation function defined in (4) and Fig. 2 is closer to the actual function as it considers more parameters than the simple downsampling degradation function (Zhang, Zuo & Zhang, 2018).

Figure 2: Downsampling and upsampling in super-resolution.

Noise is added to simulate realistic degradation within an image.Finally, the purpose of SR is to minimize the loss function as follows:

(5)

Where is the loss function between the output HR image of SR and the actual HR image, is the tradeoff parameter, whereas is the regularization term. The most common loss function used in SR is the pixel-based mean square error (MSE), which can also be referred to as pixel loss. In recent years, researchers have used a combination of various loss functions, and these combinations are further explored in later sections. Further mathematical modeling of the SR problem is discussed in Candès & Fernandez-Granda (2014).

Methods for quality of SR images

Image quality can have several definitions as per the measurement methods, and it is generally a measure of the quality of visual attributes and perception of the viewers. The image quality assessment (IQA) methods are characterized into subjective methods (human perception of an image is natural and of good quality) and objective methods (quantitative methods by which image quality can be numerically computed) (Thung & Raveendran, 2009).

Quality-related visual aspects of an image are mostly a good measure, but this method requires more resources, especially if the dataset is large (Wei, Yuan & Cai, 1999); thus, in SR and computer vision tasks, the more suitable methods are objective. As per (Saad, Bovik & Charrier, 2012), the IQA methods are primarily categorized into three categories, i.e., reference image-based features from the actual image and blind IQA with no information about the ground truth. In this section, IQA methods primarily used in the domain of SR are further explored.

Peak signal-to-noise ratio

In information systems, the peak signal-to-noise ratio (PSNR) is a measurement technique for analyzing the signal power compared to the noise power, especially in images; the PSNR is used as a quantitative measure of the compression quality of an image. In super-resolution, the PSNR of an image is defined by the maximum pixel value and the mean square error between the reference image and the SR image, also known as the power of image distortion noise. For a given maximum pixel value ( ) and the reference image ( ) having t pixels and the SR image ( ), the peak signal-to-noise ratio is defined as:

(6)

Where M is mostly for 8-bit color space depth, i.e., the max value of 255 and is given by:

(7)

As seen from (6), the PSNR is related to the individual pixel intensity values of the SR image and reference image and is a pixel-based metric of image quality. In some cases (Almohammad & Ghinea, 2010; Horé & Ziou, 2010; Goyal, Lather & Lather, 2015), this quality metric can be misleading as the overall image might not be visually similar to that of the reference image. This metric is still used for image comparisons, especially comparing the results of SR algorithms with previously published results to compare the working of any new method in the field of SR.

MSE for color images averaged for color channels, and an alternate approach is to measure PSNR for luminance and or greyscale channels separately as the human eye is more sensitive to changes in luminance in contrast to changes in chrominance (Dabov et al., 2006).

Structural similarity index

The visual perception of humans is efficient in extracting the structural information within an image, and PSNR does not consider the structural composition of the image (Rouse & Hemami, 2008). The structural similarity index metric (SSIM) was proposed by (Wang et al., 2004) to measure the structural similarity between images by comparing the contrast, luminance, and structural details within the reference image.

An image with total pixels ; the contrast , and luminance can be denoted as the standard deviation and the mean of the image intensity given by:

(8)

(9)

The pixel of the reference image is denoted by . The comparisons based on the contrast and luminance between the reference image and the estimated image are:

(10)

(11)

Where and , these constant terms ensure stability by ensuring and .

Normalized pixel values represent the image structure, while the inner product of these is the equivalent of structural similarity between the reference image and the estimated image . The covariance is given by:

(12)

Function for structural comparison is given by:

(13)

Where μ3 is stability constant, the final structural similarity index (SSIM) is given by:

(14)

The control parameters , and can be adjusted to increase the importance of luminance, contrast, and structural comparison in calculating the .

Conventionally, PSNR is used in computer vision tasks for evaluation, but SSIM is based on human perception of structural information within an image. Thus this method is widely used for comparing the structural similarity between images (Blau & Michaeli, 2018; Sara, Akter & Uddin, 2019). In medical images where the variance or luminance of the reference images are low, SSIM could be very unstable, thus reporting false results; however, this is not the case for natural images (Pambrun & Noumeir, 2015).

Opinion scoring

Opinion scoring is a qualitative method, which lies in the subjective category of IQA. In this method, the quality testers are asked to grade the quality of images based on specific criteria, e.g., sharpness, natural look, and color, where the final graded score is the mean of the rated scores.

This method has limitations such as non-linearity between the scores, variation in results due to changes in test criteria, and human error. In SR, certain methods have reported good objective quality scores but scored poorly in subjective results, especially in human face reconstruction (Ledig et al., 2017; Nasrollahi & Moeslund, 2014; Chen et al., 2018b). Thus, the opinion scoring method is also used in studies (Wei, Yuan & Cai, 1999; Deng, 2018; Ravì et al., 2018, 2019; Vasu, Thekke Madam & Rajagopalan, 2019) to measure the quality of human perception.

Perceptual quality

Opinion scoring used human raters for manual evaluation of the images; while this method can provide accurate results as far as human perception is concerned, this method requires many resources, especially large datasets (Viswanathan & Viswanathan, 2005). Initially (Kim & Lee, 2017) proposed a CNN-based full reference image quality assessment (FR-IQA) model where human behavior was learned using an IQA database that contained distorted images, subjective scores, and error maps, and this method was called DeepQA.

In Ma et al. (2017a), the authors used quality-discriminable image pairs (DIP) for training, and the system was called dipQA (DIP inferred quality index); they used RankNet with L2R algorithm to learn blind opinion IQA, whereas in Ma et al. (2018) a multi-task end-to-end optimized deep neural network (MEON) was proposed. MEON used two stages, in the first stage, distortion type learning using large datasets already available, and in the second stage, the output of the first stage was used to train the quality assessment network using stochastic gradient descent. In Talebi & Milanfar (2018), the authors used CNN to develop a no-reference IQA method known as NIMA; NIMA was trained on pixel-level and aesthetic quality datasets.

RankIQA (Liu, Van De Weijer & Bagdanov, 2017) trained a Siamese network to grade the quality of images using datasets with known image distortions, CNNs were used to learn the IQA, and this method even outperformed full-reference methods without using the reference image. IQA proposed in Bosse et al. (2018) included ten convolution layers and five pooling layers for feature extraction while there were two fully connected layers for regression; this method performed significantly well for both no-reference and full-reference IQA.

Even though opinion scoring and perceptual quality-based methods do exhibit human perception in IQA, but the quality we require is still an open question (i.e., if we want images to be more natural or similar to the reference image); thus, PSNR and SSIM are the primarily used methods in computer vision and SR.

Task-based evaluation

Although the primary purpose of image SR is to achieve better resolution, as mentioned earlier, SR is also helpful in other computer vision tasks (Kim, Lee & Lee, 2016b; Tao et al., 2017; Teh et al., 2020; Liu et al., 2018a). The performance achieved in these can indirectly measure the performance of the SR methods used in those tasks. In the case of medical images, the researchers used the original and SR constructed images to see the performance in the training and prediction phases. In general, computer vision tasks such as classification (Krizhevsky, Sutskever & Hinton, 2012; Cai et al., 2019), face recognition (Nasrollahi & Moeslund, 2014; Liu et al., 2015; Chen et al., 2018b), and object segmentation (Martin et al., 2001; Lin et al., 2014; Wang et al., 2018b) can be done using SR images. The performance of these computer vision tasks can be used as a metric to assess the performance of the SR method.

Miscellaneous IQA methods

The development of IQA methods is an open field, and in recent years various researchers have proposed SR metrics, but these methods were not used widely by the SR community. Feature similarity (FSIM) index metric (Zhang et al., 2011) evaluates image quality by extracting feature points considered by the human visual system based on gradient magnitude and phase congruency. The multi-scale structural similarity (MS-SSIM) (Wang, Simoncelli & Bovik, 2003) used multi-scale to incorporate variations in the viewing conditions to measure the image quality and proposed that MS-SSIM provides form flexibility in the measurement of image quality than single-scale SSIM. In Li & Bovik (2010), the authors claimed that SSIM and MS-SSIM do not perform well on distorted and noisy images; thus, they used a four-component-based weighted method that adjusted the weight of scores based on the local feature, whereas in the case of contrast-distorted images Yao & Liu (2018) like TID2013 and CSIQ datasets SSIM does not perform well.

According to Blau & Michaeli (2018), the perceptual quality and image distortion are at odds with each other; as the distortion decreases, the perceptual quality should also be worse; thus, the accurate measurement of SR image quality is still an open area of research.

The comparison of image quality assessment metrics for super-resolution is shown in Table 1. It depends on the requirements of the methods; most of the methods use PSNR and SSIM to evaluate the performance as these are quantitative methods.

| Method | Strengths | Weaknesses |

|---|---|---|

| PSNR |

|

|

| SSIM |

|

|

| Opinion Scoring |

|

|

| Perceptual Quality |

|

|

| Task-based Evaluation |

|

|

Operating color channels

In most datasets, RGB color space is used; thus, SR methods mostly employ RGB images, YCbCr space is also used in SR (Dong et al., 2016). The Y component in YCbCr is the luminance component, which represents the light intensity, while Cb and Cr are the chrominance components (i.e., blue-differenced and red-differenced Chroma channels) (Shaik et al., 2015). In recent years, most of the SR challenges and datasets use the RGB color space, limiting the use of RGB space for comparison with state of the art. Furthermore, the results of IQA based on PSNR vary if the color space in the testing stage is different from the training/evaluation stage.

Details of the reference dataset

The datasets used in evaluating the SR algorithms are summarized in this section; the various datasets discussed in this section vary in the total number of example images, image resolution, quality, and imaging hardware setup. A few of the datasets comprise paired LR-HR images for training and testing SR algorithms. In contrast, the rest of the datasets include HR images, and the corresponding LR images are usually generated by using bicubic interpolation with antialiasing as performed in Shi et al. (2016), Zhang & An (2017) and Shocher, Cohen & Irani (2018). Matlab function imresize (I, scale), where the default method is bicubic interpolation with antialiasing, and scale is the downsampling factor input to the function.

Table 2 comprises a list of datasets frequently used in SR and information on total image count, image format, pixel count, HR resolution, type of dataset, and classes of images.

| Name | Number of images/pairs | Image format | Type | Resolution | Details of images |

|---|---|---|---|---|---|

| BSD100 (Martin et al., 2001) | 100 | PNG | Unpaired | (480, 320) | 100 images of animals, people, buildings, scenic views etc. |

| BSDS300 (Martin et al., 2001) | 300 | JPG | Unpaired | (430, 370) | 300 images of animals, people, buildings, scenic views, plants, etc. |

| BSDS500 (Arbeláez et al., 2010) | 500 | JPG | Unpaired | (430, 370) | Extended version of BSD 300 with additional 200 images |

| CelebA (Liu et al., 2015) | 202,599 | PNG | Unpaired | (2048, 1024) | Over 40 attribute defined categories of celebrities |

| DIV2K (Agustsson & Timofte, 2017) | 1,000 | PNG | Paired | (2048, 1024) | Objects, People, Animals, scenery, nature |

| Manga109 (Fujimoto et al., 2016) | 109 | PNG | Unpaired | (800, 1150) | 109 manga volumes drawn by professional manga artists in Japan |

| MS-COCO (Lin et al., 2014) | 164,000 | JPG | Unpaired | (640, 480) | Labeled objects with over 80 object categories |

| OutdoorScene (Wang et al., 2018b) | 10,624 | PNG | Unpaired | (550, 450) | Outdoor scenes including plants, animals, sceneries, water reservoirs, etc. |

| PIRM (Blau et al., 2018) | 200 | PNG | Unpaired | (600, 500) | Sceneries, people, flowers, etc. |

| Set14 (Zeyde, Elad & Protter, 2012) | 14 | PNG | Unpaired | (500, 450) | Faces, animals, flowers, animated characters, insects, etc. |

| Set5 (Bevilacqua et al., 2012) | 5 | PNG | Unpaired | (300, 340) | Only 5 images including, butterfly, baby, bird, head, and women. |

| T91 (Yang et al., 2010) | 91 | PNG | Unpaired | (250, 200) | 91 images of fruits, cars, faces, etc. |

| Urban100 (Huang, Singh & Ahuja, 2015) | 100 | PNG | Unpaired | (1000, 800) | Urban buildings, architecture |

| VOC2012 (Everingham et al., 2014) | 11,530 | JPG | Unpaired | (500, 400) | Labelled objects with over 20 classes |

Most of the datasets for SR are unpaired data, and the LR images are generated using various scale factors using bicubic interpolation with antialiasing. Other than the mentioned datasets in Table 2, datasets like General-100 (Dong, Loy & Tang, 2016), L20 (Timofte, Rothe & Van Gool, 2016) and ImageNet (Deng et al., 2009) are also used in computer vision tasks. In recent times, researchers have preferred the use of multiple datasets for training/evaluation and testing the SR models; for instance, in Bashir & Ghouri (2014), Lai et al. (2017), Sajjadi, Scholkopf & Hirsch (2017) and Tong et al. (2017), the researchers used SET5, SET14, BSDS100 and URBAN100 for training and testing.

Super-resolution challenges

The most prominent SR challenges NTIRE (Agustsson & Timofte, 2017; Timofte et al., 2017), and PIRM (Blau et al., 2018), are discussed in this section.

The New Trends in Image Restoration and Enhancement (NTIRE) challenge (Agustsson & Timofte, 2017; Timofte et al., 2017) was in collaboration with the Conference on Computer Vision and Pattern Recognition (CVPR). NTIRE includes various challenges like colorization, image denoising, and SR. In the case of SR, the DIV2K dataset (Agustsson & Timofte, 2017) was used, which included bicubic downscaled image pairs and blind images with realistic but unknown degradation. This dataset has been widely used to evaluate SR methods under known and unknown conditions to compare against the state-of-the-art methods.

The perceptual image restoration and manipulation (PIRM) challenges were in collaboration with the European Conference on Computer vision (ECCV), and like NTIRE, it contained multiple challenges. Apart from the three challenges mentioned in NTIRE, PIRM also focused on SR for smartphones and compared perceptual quality with generation accuracy (Blau et al., 2018). As mentioned by Blau & Michaeli (2018), the models that focus on distortion often give visually unpleasant SR images, while the models focusing on the perceptual image quality do not perform well on information fidelity. Using the image quality metrics NIQE (Mittal, Soundararajan & Bovik, 2013) and (Ma et al., 2017b), the methods that performed best in achieving perceptual quality (Blau & Michaeli, 2018) was the winner. In contrast, in a sub-challenge (Ignatov et al., 2018b), SR methods were evaluated using limited resources to evaluate SR performance for smartphones using the PSNR, MS-SSIM, and opinion scoring metrics. Thus, PIRM encouraged the researchers to explore the perception-distortion tradeoff domain and SR for smartphones.

Survey methodology

The majority of the studies included in this review paper are peer-reviewed publications to ensure the validity of the methods; these studies include conference proceedings and journal papers. The included papers include early access and a published version of recent papers for super-resolution from 2008 to 2021. However, some in classical methods, some papers, and initial papers on image SR were included before this range to develop the review and give the background of the classical methods developed before the deep learning-based methods overtook the field. Google Scholar, IEEE Xplore, and Science Direct were queried to collect the initial list of papers in this research. Specific keywords were used to search the databases and based on the abstract. A further selection of papers was made using the reference sections of the selected papers as they contain additional relevant studies in image super-resolution. The last query was made on May 08, 2021. The collected papers were segregated based on their relevance with the Section; for example, papers with supervised learning were stored separately for review in “Supervised Super-Resolution”, and studies highlighting the applications of SR methods were grouped for discussion in “Domain-Specific Applications of Super-Resolution”.

The relevant search terms include image super-resolution, super-resolution, deep learning super-resolution, convolutional neural networks, image upsampling methods, super-resolution frameworks, supervised super-resolution, unsupervised super-resolution, super-resolution review, image interpolation, pixel-based methods, super-resolution application, assisted diagnosis using deep learning. The search keywords were not limited to single-image SR because our target was to report other aspects of super-resolution, including classical methods, applications, and datasets for SR.

Logical operators and wildcards were used to combine the keywords further and perform the additional search. Initial screening of the collected papers was performed following the inclusion/exclusion criteria shown in Table 3. The whole process is graphically shown in Fig. 3, where 653 studies were collected over one year. A total of 242 studies were included from the initial 653 collected research studies.

| Section | Inclusion | Exclusion |

|---|---|---|

| Introduction | Methods that defined image interpolation and performed some practical form of image interpolation, i.e., super-resolution |

|

| Classical Methods | Methods that performed pixel, neighborhood, or any classical image interpolation |

|

| Deep learning-based methods | Development of image super-resolution using deep learning methods, including review papers |

|

| Applications | Direct applications of super-resolution methods in the six fields defined in “Domain-Specific Applications of Super-Resolution” were included |

|

Figure 3: Methodology for the collection of studies.

Sample details based on inclusion/exclusion criteria defined in Table 5.Conventional methods of super-resolution

Classical methods of SR are briefly discussed in this section to encompass the overall development cycle of the SR. The classical methods include prediction-based, edge-based, statistical, patch-based and sparse representation methods.

The primary methods were based on prediction, and the first method (Duchon, 1979) was based on Lanczos filtering, which filtered the digital data using sigma factors (with modifiable weight function), and a similar frequency-domain filtering approach was used in Tsai & Huang (1984) for image resampling. In contrast, cubic convolution (Keys, 1981) was used for resampling the image data, and the results showed that this prediction method was more accurate than the nearest-neighbor prediction algorithm and linear interpolation of image data (Parker, Kenyon & Troxel, 1983). In Tsai & Huang (1984), the authors did not consider the blur in the imaging process, while (Irani & Peleg, 1991) used the knowledge of the imaging process and the relative displacements for image interpolation when the sampling rate was kept constant and this method reduced to deblurring.

The patch-based approach was used in Freeman, Jones & Pasztor (2002); the authors used a training set where various patches within the training set were extracted as training patterns, which helped generate detailed high-frequency images using the patch texture information. In Chang, Yeung & Xiong (2004), the authors used locally linear embedding to use local patches for generating high-resolution images based on the local patch features. In contrast, (Glasner, Bagon & Irani, 2009) used the concept of reoccurrence of geometrically similar patches in natural images to select the best possible pixel value based on the patch redundancy on the same scales. In Baker & Kanade (2002), the authors introduced the concept of hallucination, where they extracted local features within the LR image first and used these to map the HR image.

Edge-based methods use edge smoothness priors to upsample images, and in Sun, Xu & Shum (2008), a generic image prior, gradient prior profile was used to smoothen the edges within an image to achieve super-resolution in natural images. In Freedman & Fattal (2011), the authors used specially designed filters to search for similar patches using the local self-similarity observation, which performed lower nearest patch computations; this method was able to reconstruct realistic-looking edges, whereas it performed poorly in clustered regions with fine details.

Statistical methods were used to perform image super-resolution (Kim & Kwon, 2010), where the authors used Kernel ridge regression (KRR) with gradient descent to learn the mapping function from the image example pairs. Adaptive regularization was used to supervise the energy change during the image resampling iterative process. This provided more accurate results as the energy map was used to limit the energy change per iteration, which reduced the noise while maintaining the perceptual quality (Xiong, Sun & Wu, 2010) while Yang et al. (2010) and Yang et al. (2008) used sparse representation methods to perform image super-resolution which used the concept of compressed sensing.

The robust SR method proposed in Zomet, Rav-Acha & Peleg (2001) used the information of outliers to improve the performance of SR in patches where other methods introduce noise due to these outliers. Additionally, Yang et al. (2007) proposed a post-processing model that enhanced the resolution of a set of images using a single reference image up to 100x scaling factor. Another way to achieve SR is to use LR images to achieve a single HR image (Tipping & Bishop, 2003). The conventional upsampling methods, such as interpolation-based, use the information within the LR image to generate HR images, and these methods do not add any new information to the image (Farsiu et al., 2004a). Furthermore, they also introduce some inherent problems, such as noise amplification and blur enhancement. Thus, in recent years, the researchers have shifted to learning-based upsampling methods explored in “Supervised Super-Resolution”.

Supervised super-resolution

Various deep learning methods were developed over the years to solve the SR problem; in this section, the models discussed are trained using both low and high-resolution images (LR–HR pairs). Although there are significant differences in the supervised SR models, and the models can be classified based on the components like the upsampling method employed, deep learning network, learning algorithm, and model frameworks. Any supervised image SR model is based on the combinations of these components, and in this section, we summarize the employed methods for these four components in light of recent supervised image SR research studies.

The component-based review of various methods is performed in this section, and the basic overview of the models is shown in Fig. 1.

Upsampling methods

The upsampling is essential in deep learning-based SR methods such as its positioning, and the method performed for upsampling has a significant impact on the training and test performance of the model. There are some commonly used methods (Yang et al., 2010, 2008; Lee, Yang & Oh, 2015; Timofte, De Smet & Van Gool, 2015), which use the conventional CNNs for end-to-end learning. In this subsection, various deep learning-based upsampling layers are discussed.

As mentioned in “Conventional Methods of Super-Resolution”, the interpolation-based methods of upsampling do not add any new information; hence, learning-based methods are used in image SR in the last decade.

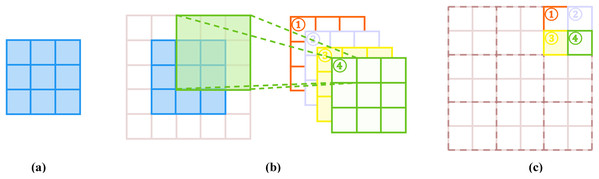

Sub-pixel layer

The end-to-end learning layer (Shi et al., 2016), called the sub-pixel layer, performs upsampling by generating several additional channels using convolution, and by reshaping these channels, this layer performs upsampling, as shown in Fig. 4. In this layer, convolution is applied to where is the scaling factor, as shown in Fig. 4B. Since the input image size is where is height, is width, and depicts color channels, the resulting convolution is . In order to achieve the final image, a reshuffling (Shi et al., 2016) operation is performed to get the final output image , as shown in Fig. 4C. Since it is an end-to-end layer, this layer is frequently used in SR models (Ledig et al., 2017; Zhang, Zuo & Zhang, 2018; Ahn, Kang & Sohn, 2018a; Zhang et al., 2018b).

Figure 4: Sub-pixel layer. Blue color represents the input convolution, and output feature maps are represented in other colors.

(A) Input. (B) Convolution. (C) Reshaping.This layer has a wide receptive field, which helps learn more contextual information that generates realistic details, whereas this layer may generate some false artifacts at the boundaries of complex patterns due to its uneven distribution of the respective field. Furthermore, predicting the neighborhood pixels in a block-type region sometimes results in unsmooth outputs that do not look realistic when compared with the true HR image; to address this issue, PixelTCL (Gao et al., 2020) was proposed that used the interdependent prediction layer, which used the information of the interlinked pixels during upsampling. The results were smooth and more realistic when compared with the ground truth image.

Deconvolution layer

The deconvolution layer also referred to as transposed convolution layer (Zeiler et al., 2010), is the converse of the convolution, i.e., predicting the probable input HR-image based on the feature maps from the LR image. In this process, additional zeros are inserted to increase the resolution, and afterwards, convolution is performed. For instance, taking scaling factor 2 for the SR image, a convolution kernel of (as shown in Figs. 5A, 5B and 5C), the input LR image is expanded twice by inserting zeros, convolution with the kernel is performed by using a stride and padding of .

Figure 5: Deconvolution layer. The blue color represents the input, and the green color represents the convolution operation.

(A) Input. (B) Expansion. (C) Convolution.The deconvolution layer is widely used in SR methods (Sroubek, Cristobal & Flusser, 2008; Hugelier et al., 2016; Lam et al., 2017; Tong et al., 2017; Haris, Shakhnarovich & Ukita, 2018), as it generates HR images in an end-to-end way, and it has compatibility with the vanilla convolution. As per Odena, Dumoulin & Olah (2017), in some cases, this layer may cause the problem of uneven overlapping within the generated HR image as the patterns are replicated in a check-like format and may result in a non-realistic HR image, thereby decreasing the performance of the SR method.

Meta upscaling

The scaling factor was predefined in the previously mentioned methods, thereby training multiple upsampling modules with different factors, which is often inefficient and is not the actual requirement of an SR method. A meta upscaling module (Hu et al., 2019) was proposed; this module uses arbitrary scaling factors to generate SR image-based in meta-learning. Meta scaling module projects every position in the required HR image to a small patch in the given LR feature maps , where j is arbitrary, and ci is the total number of channels within the extracted feature map (in Hu et al., 2019 this was 64). Additionally, it also generates the convolution weights , where represents the output image channels, and it is usually 3. Thus, the meta upscaling module continuously uses arbitrary scaling factors within a single model and using a substantial training set, a large number of factors are simultaneously trained. The performance of this layer even surpasses the results produced with fixed factor models, and even though this module predicts the weights during the inference time, the overall execution time for weight prediction is 100 times less than the total time required for feature extraction (Hu et al., 2019). In cases where there is a need for larger magnifications, this module may become unstable as it predicts the convolution weights for every pixel independent of the image information within those pixels.

This upscaling method is frequently used in recent years, particularly in post-upsampling frameworks (“Post-Upsampling SR”). The high-level representations extracted from the low-level information are used to construct an HR image using meta upscaling in the last layer of the model, making this method an end-to-end SR approach.

The comparison of upsampling methods is shown in Table 4; most SR methods use deconvolution or sub-pixel layers for upscaling. However, for multiple scale factors, meta upscaling is used.

| Method | Strengths | Weaknesses |

|---|---|---|

| Sub-pixel layer |

|

|

| Deconvolution layer |

|

|

| Meta upscaling |

|

|

Deep learning SR networks

The network design and advancements in design architecture are recent trends in deep learning, and in SR, researchers have tried several design implications along with the SR framework (as seen in “SR Frameworks”) for designing the overall SR network. Some of the fundamental and recent network designs are discussed in this section.

Recursive learning

One of the basic network-based learning strategies is to use the same module for recursively learning high-level features. This method also minimizes the parameters as the strategy is based on the same module being updated recursively, as shown in Fig. 6A.

Figure 6: Deep-learning network structures for super-resolution.

(A) Recursive learning, (B) residual learning, (C) dense connection-based learning, (D) multiscale learning, (E) advanced convolution-based learning, (F) attention-based learning.One of the most used recursive networks is the Deeply-recursive Convolutional Network (DRCN) (Kim, Lee & Lee, 2016b). Utilizing a single convolution layer DRCN reaches up to a repetitive field without requiring additional parameters, which is very deep compared to the Super-resolution Convolution Neural Network SRCNN (Thapa et al., 2016) . The Deep Recursive Residual Network (DRRN) (Tai, Yang & Liu, 2017) utilized a ResBlock (He et al., 2016) as part of the recursive module for a total of 25 recursions and was reported to achieve better performance than the baseline ResBlock. Using the concept of DRCN, Tai et al. (2017) proposed a memory block-based method MemNet which contained six recursive ResBlocks, whereas the Cascading Residual Network (CARN) (Ahn, Kang & Sohn, 2018a) also used ResBlocks as recursive units. In this approach, the network shares the weights globally in recursion using an iterative up-and-down sampling-based approach. Apart from end-to-end recursions, the researchers also used Dual-state Recurrent Network (DSRN) (Han et al., 2018), which shared the signals between the LR and generated HR states within the network.

Overall, while reducing the parameters, recursive learning networks can learn the complex representation of the data at the cost of computational performance. Additionally, the increase in computational requirements may result in an exploding or vanishing gradient. Thus, recursive learning is often used in combination with multi-supervision or residual learning for minimizing the risk of exploding or vanishing gradient (Kim, Lee & Lee, 2016b; Tai et al., 2017; Tai, Yang & Liu, 2017; Han et al., 2018).

Residual learning

Residual learning was widely used in the field of SR (Bevilacqua et al., 2012; Timofte, De Smet & Van Gool, 2015; Timofte, De & Van Gool, 2013), until ResNet (He et al., 2016) was proposed for learning residuals, as shown in Fig. 6B. Overall, there are two approaches, local and global residual learning.

The local residual learning approach mitigates the degradation problem (He et al., 2016) caused by increased network depth. Furthermore, the local residual learning also improved the learning rate and reduced the training difficulty; this is frequently used in the SR field (Protter et al., 2009; Mao, Shen & Bin, 2016; Han et al., 2018; Li et al., 2018).

The global residual learning is an approach used in which the input and the final output are correlated, and in image SR, the output HR is highly correlated with the input LR image; thus, learning the global residuals between LR and HR image is significant in SR. In global residual learning, the model only learns the residual map that transforms the LR image into an HR image by generating the missing high-frequency details in the LR image. Furthermore, the residuals are minimal; thereby, the learning difficulty and model complexity are significantly reduced in global residual-based learning. This method is also frequently used in SR methods (Kim, Lee & Lee, 2016a; Tai, Yang & Liu, 2017; Tai et al., 2017; Hui, Wang & Gao, 2018).

Overall, both methods use residuals to connect the input image with the output HR image; in the case of global residual learning, the connection is directly made, which in local residual learning various layers of different depth to connect the input (using local residuals) with the output.

Dense connection-based learning

This learning method uses dense blocks to address SR, like DenseNet (Huang et al., 2017). The dense block utilizes all the features maps generated by the previous layers as inputs and its feature inputs, leading to connections in an l-layer dense block. Using dense blocks will increase the reusability of the features while resolving the gradient vanishing problem. Furthermore, the dense connections also minimize the model size by utilizing a small growth rate and enfolding the channels using concatenated input features.

Dense connections are used in SR to connect the low-level and high-level features maps for reconstructing a high-quality fine-detailed HR image, as shown in Fig. 6C. SRDenseNet (Tong et al., 2017) proposed a 69-layer network containing dense connections within the dense blocks and dense connections among the dense blocks. In SRDenseNet, the feature maps from the prior blocks and the feature maps were used as inputs of all preceding blocks. RDN (Zhang et al., 2018b), CARN (Ahn, Kang & Sohn, 2018a), MemNet (Tai et al., 2017) and ESRGAN (Wang et al., 2019c) also used layer or block-level dense connection, while DBPN (Wang et al., 2018b) only used the dense connection between the upsampling and downsampling units.

Multi-path learning

In multi-path learning, the features are transferred to multiple paths for different representations, and these representations are later combined to gain improved performance. Scale-specific, local, and global multi-path learnings are the main types.

For different scales, the super-resolution models use different feature extraction; in Lim et al. (2017), the authors proposed a single network-based multi-path learning for multiple scales. The intermediate layers of the model were shared for feature extraction, while scale-specific paths, including pre-processing and upsampling, were at the end of the models, i.e., the start and end of the network. During training, the scale relative paths are enabled and updated accordingly, and the proposed deep super-resolution MDSR method (Lim et al., 2017) also decreases the overall model size because of the sharing of parameters across the scales. Like MDSR, a similar multi-path-based approach is also implemented in ProSR and CARN.

Local multi-path learning is inspired using a new block, the inception module (Szegedy et al., 2015), for multi-scale feature extraction, as performed in MSRN (Li et al., 2018) (shown in Fig. 6D). The additional block consists of and kernel size convolution layers, which simultaneously extracts the features. After combining the outputs of the two convolution layers, the final output goes through a kernel convolution. Furthermore, a path links the input and output by element-wise addition and uses this local multi-path learning; this method extracts features efficiently than multi-scale learning.

Another variation of multi-path learning is global multi-path learning; in this method, various features are extracted from multi-paths that can interact. In DSRN (Han et al., 2018), there are two paths for extracting low and high-level information, and there is a continuous sharing of features for improved learning. In contrast, in pixel recursive SR (Dahl, Norouzi & Shlens, 2017), a conditioning path is responsible for extracting global structures, and the prior path further finds the serial codependence among the generated pixels. A different method was employed by Ren, El-Khamy & Lee (2017), where multi-path learning was performed for unbalanced structures, which were later combined in the final layer to get the SR output.

Advanced convolution-based learning

In SR, the methods explored depend on the convolution operation, and various research studies have attempted to modify the convolution operation for better performance. In recent years, research studies have shown that group convolution, as shown in Fig. 6E, decreased the total number of parameters at the cost of small loops in performance (Hui, Wang & Gao, 2018; Johnson, Alahi & Li, 2016). In CARN-M (Ahn, Kang & Sohn, 2018a) and IDN (Hui, Wang & Gao, 2018), group convolution was used instead of vanilla convolution. In dilated convolution, the contextual information is used to generate realistic-looking SR images (Zhang et al., 2017); dilated convolution was used to double the receptive field, resulting in better results.

Another type of convolution is depthwise separable convolution (Howard et al., 2009); although this convolution significantly reduces the total number of parameters, it reduces the overall performance.

Attention-based learning

In deep learning, attention learning is the idea where certain factors are given more preference, which processes the data than others; here, two types of attention-based learning mechanisms are discussed in SR. In channel attention, a particular block is added in the model where global average pooling (GAP) squeezes the input channels; two fully connected layers process these constants to generate channel-wise residuals (Hu, Shen & Sun, 2018), as shown in Fig. 6F. This technique has been incorporated in SR, known as RCAN (Zhang et al., 2018a), which has improved performance. Instead of GAP, Dai et al. (2019) used the second-order channel attention (SPCA) module, which used second-order feature metric for extracting more data representation using channel-based attention

In SR, most of the models use local fields for the generation of SR pixels, while in a few cases, some textures or patches which are far apart are necessary for generating accurate local patches. In Zhang et al. (2019b), local and non-local attention blocks were used to extract local and non-local representations between pixel data. Similarly, the non-local attention technique was incorporated by Dai et al. (2019) to capture contextual information using a non-local attention method. Chen et al. proposed an SR reconstruction method with feature maps to facilitate the reconstruction of the image using an attention mechanism (Chen et al., 2021), while Yang et al. proposed a channel attention and spatial graph convolutional network (CASGCN) for a more robust feature obtaining and feature correlations modeling (Yang & Qi, 2021).

Wavelet transform-based learning

Wavelet transform (WT) (Daubechies & Bates, 1993; Griffel & Daubechies, 1995) represents textures using high-frequency sub-bands and global structural information in low-frequency sub-bands in a highly efficient way. WT was used in SR to generate the residuals of the HR sub-bands using the sub-bands of the interpolated LR wavelet. Using the WT, the LR image is decomposed, while the inverse WT provides the reconstruction of the HR image in SR. Other examples of WT based SR are Wavelet-based residual attention network (WRAN) (Xue et al., 2020), multi-level wavelet CNN (MWCNN) (Liu et al., 2018b) and (Ma et al., 2019); these approaches used a hybrid approach by combining WT with other learning methods to improve the overall performance.

Region-recursive-based learning

In SR, most methods follow the underlying assumption that it is a pixel-independent process; thus, there is no priority to the interdependence among the generated pixels. Using the concept of PixelCNN (Van Den Oord et al., 2016; Dahl, Norouzi & Shlens, 2017) proposed a method for pixel recursive learning, which performed SR by pixel-by-pixel generation using two networks. The two networks (Dahl, Norouzi & Shlens, 2017) captured information about pixel dependence and global contextual information within the pixel recursive SR method. Using the mean opinion scoring-based evaluation method (Dahl, Norouzi & Shlens, 2017) performed well compared to other methods for generating SR face images using the pixel recursive method. The attention-based face hallucination method (Cao et al., 2017a) also utilized the concept of a path-based attention shifting mechanism to enhance the details in the local patches.

While the region-recursive methods perform marginally better than other methods, the recursive process exponentially increases the training difficulty and computation costs due to long propagation paths.

Other methods

Other SR networks are also used by researchers, such as Desubpixel-based learning (Vu et al., 2019), xUnit-based learning (Kligvasser, Shaham & Michaeli, 2018) and Pyramid Pooling-based learning (Zhao et al., 2017).

To improve the computational speed, the desubpixel-based approach was used to extract features in a low-dimensional space, which does the inverse task of the sub-pixel layer. By segmenting the images spatially and using them as separate channels, the desubpixel-based learning avoids any information loss; after learning the data representations in low-dimensional space, the images are upsampled to get a high-resolution image. This technique is particularly efficient in applications with limited resources such as smartphones.

In xUnit learning, a spatial activation function was proposed for learning complicated features and textures. In xUnit, the ReLU operation was replaced by xUnit to generate the weight maps through Gaussian gating and convolution. The model size was decreased by 50% using xUnit at the cost of increased computational demand without compromising the SR performance (Kligvasser, Shaham & Michaeli, 2018).

Learning strategies

Learning strategies also dictate the overall performance of any SR algorithm as the evaluations are dependent upon the choice of the learning strategy selected. In this section, recent research studies are discussed using the learning strategy utilized in SR, and some of the critical strategies are discussed in detail.

Loss functions

For any application in deep learning, the selection of the loss functions is critical, and in SR, these functions are used to measure the error in the reconstruction of HR, which further helps optimize the model iteratively. Since the necessary element of the images is a pixel, initial research studies employed the pixel loss, L2, but it was evaluated that the pixel loss cannot wholly represent the quality of reconstruction (Ghodrati et al., 2019). Thus, in SR, different loss functions such as content loss (Johnson, Alahi & Li, 2016) or adversarial loss (Ledig et al., 2017) are used to measure the error in the generation these loss functions have been widely used in the field of SR. Various loss functions are explored in this section, and the notation follows the previously defined variables except where defined otherwise.

Content Loss. The perceptual quality, as mentioned previously, is essential in the evaluation of an SR model, and this loss was used in SR (Johnson, Alahi & Li, 2016; Dosovitskiy & Brox, 2016) to measure the differences between the generated and ground-truth images using an image classification network (N). Let the high-level data representation on the lth layer is , the content loss is defined as the Euclidean among the high-level representations of the two images and , where is the original image and is the generated SR image as below:

(15)

Where , and respectively are height, width, and several channels of the image representations in the layer.

Content loss aims to share information about image features from the image classification network to the SR network. This loss function ensures the visual similarity between the original image ( ) and the generated image ( ) by comparing the content and not the individual pixels. Thus, this loss function helps in producing visually perceptible and more realistic looking images in the field of SR as in Ledig et al. (2017), Wang et al. (2018b), Sajjadi, Scholkopf & Hirsch (2017), Wang et al. (2019c), Johnson, Alahi & Li (2016) and Bulat & Tzimiropoulos (2018) where the networks used as pre-trained CNNs were ResNet (He et al., 2016) and VGG (Simonyan & Zisserman, 2015).

Adversarial Loss. In recent years, after the development of GANs (Goodfellow et al., 2014), GANs have received more consideration due to their ability to learn and self-supervise. A GAN combines dual networks performing generation and discrimination tasks, i.e., generating the actual output and using a discriminator network to evaluate the results of the generative network. While training the GANs, two continuous updates were performed, i.e. (i) Adjust the generator for better results while training the discriminator to discriminate more efficiently and (ii) Adjust the discriminator while training the generator. This is a recursive training network, and through many iterations of training and evaluation, the generator can generate the output that conforms to the distribution of the actual data. The discriminator is unable to differentiate between real and generated information.

In terms of image SR, the purpose of a generative network is to generate an HR image, while another discriminator network will be used to evaluate if the image is of the same distribution as the input data. This method was first introduced in SR as SRGAN (Ledig et al., 2017), the adversarial loss in Ledig et al. (2017) was represented by:

(16)

(17)

Where . is the adversarial loss function of the generator in the SR model, while is the adversarial loss function of the discriminator , which is a binary classifier. In (17), the randomly sampled ground truth image is denoted by . The same loss functions were reported by Sajjadi, Scholkopf & Hirsch (2017).

Other than binary classification error, the studies Yuan et al. (2018) and Wang et al. (2018a) used mean square error for improved training and better results compared to (Ledig et al., 2017), the loss functions are given in (18) and (19):

(18)

(19)

Contrary to the loss functions mentioned in (18) and (19), (Park et al., 2018) showed that in some cases, pixel-level discriminator network generates high-frequency noise; thus, we used another discriminator network to evaluate the first discriminator network for high-frequency representations. Using the two discriminator networks, (Park et al., 2018) were able to capture all attributes accurately.

Various opinion scoring systems have been used regressively to test the performance of the SR model that uses adversarial loss. Although the SR models attained lower PSNR than the pixel-loss-based SR on perceptual quality metrics like opinion scoring, these adversarial loss-based SR methods scored very high (Ledig et al., 2017; Sajjadi, Scholkopf & Hirsch, 2017). The use of a discriminator as the control network for the generator GANs was able to regenerate some intricate patterns that were very difficult to learn using ordinary deep learning methods. The only drawback of the GANs is their training stability (Arjovsky, Chintala & Bottou, 2017; Gulrajani et al., 2017; Lee et al., 2018a; Miyato et al., 2018).

Pixel Loss. As evident from the name, this loss function performs a pixel-wise comparison between the reference image and the generated image, and there are two types of comparisons, i.e., an loss, which is also termed as mean absolute error and loss, which is the mean square error ( )

(20)

(21)

The loss in some cases becomes numerically unstable to compute; thus, another variant of the L1 loss called the Charbonnier loss (Farsiu et al., 2004b; Barron, 2017, 2019; Lai et al., 2017) is given by:

(22)

Here is a constant which ensures numerical stability.

The pixel loss function ensures that the generated HR image has the same pixel values as the HR image . Furthermore, the L2 loss used the square of pixel-value errors, giving more weightage to high-value differences than lower ones; thus, this loss function may give either the too variable result (in case of outliers) or give too smooth results (in case of minimal error values). Therefore, the L1 loss function is widely used over L2 loss (Zhao et al., 2016; Lim et al., 2017; Ahn, Kang & Sohn, 2018a). Furthermore, the PSNR equation is closely related to the definition of L1 loss, and minimizing L1 loss always leads to increased PSNR. Thus, researchers have often used the L1 loss to maximize the PSNR; as mentioned earlier, the pixel loss function does not cater to perceptual quality or textures. Thus, SR networks based on this loss function may have less high-frequency details, resulting in smooth but unrealistic HR images (Wang, Simoncelli & Bovik, 2003; Wang et al., 2004).

Style Reconstruction Loss. Ideally, the reconstructed HR image should have comparable styles to the actual HR image (colors, textures, gradient, contrast), thus using the research studies (Sajjadi, Scholkopf & Hirsch, 2017; Gatys, Ecker & Bethge, 2015), style reconstruction loss was used in SR to match the texture details of the reference image with the generated image. The correlation between the feature maps of different channels as given by the Gram matrix (Levy & Goldberg, 2014) . is the dot product of the features , and in the layer , it is which is given by:

(23)

Where is the vectorization operation and denoted the channel of feature maps in the layer . Now the texture loss is given by (24)

(24)

Using the texture loss function in (24), EnhanceNet (Sajjadi, Scholkopf & Hirsch, 2017) reported more realistic results that look visually similar to the reference HR image. Although an optimized texture loss function-based SR generates more realistic-looking images, the selection of patch size is still an open field of research. The selection of small patch size leads to the generation of artifacts in the textured region, while selecting a big patch size generates artifacts across the whole image as the patches are averages over the whole image.

Total Variation Loss. Using the pixel values of the neighboring pixels, the total variation loss (Rudin, Osher & Fatemi, 1992) was defined as the sum of the absolute difference among the values of the neighboring pixels as:

(25)

Total variation loss was used in Ledig et al. (2017) and Yuan et al. (2018) to ensure smoothness across sharp edges/transitions within the generated image.

Cycle Consistency Loss. Using the CycleGAN (Zhu et al., 2017a) image SR method was presented in Yuan et al. (2018) using the cyclic consistency loss function. Using the generated HR image , the network generated another LR image , which is further compared with the input LR image for cyclic consistency.

In practice, various loss functions are used as a combination in SR to ensure various aspects of the generation process in the form of a weighted average as in Kim, Lee & Lee (2016a), Wang et al. (2018b), Sajjadi, Scholkopf & Hirsch (2017) and Lai et al. (2017). The selection of appropriate weights of the loss functions in itself is another learning problem as the results vary significantly by varying the weights of the loss function in image SR.

Curriculum learning

In the Curriculum learning technique (Bengio et al., 2009), the method adapts itself to the variable difficulty of tasks, i.e., starting from simple images with minimum noise to complex images. Since SR always suffers from adverse conditions, the curriculum approach is mainly applied to its learning difficulty and network size. For reducing the training difficulty of the network in SR, small scaling factor, SR is performed in the beginning; in the curriculum learning-based SR, the training starts with 2× upsampling, and gradually the following scaling factors , , and so on are generated using the output of previously trained networks. ProSR (Wang et al., 2018a) uses the upsampled output of the previous level and linearly trains the next level using the previous one, while ADRSR (Bei et al., 2018) concatenates the HR output of the previous levels and further adds another convolution layer. In CARN (Ahn, Kang & Sohn, 2018b), the previously generated image is entirely replaced by the next level generated image, updating the HR image in sequential order.

Another alternative is to transform the image SR problem into N subsets and gradually solving these problems; as in Park, Kim & Chun (2018), the upsampling problem was divided into three problems (i.e., ; and ) and three separate networks were used to solve these problems. Using a combination of the previous reconstruction, the next level was finetuned in this method. The same concept was used in Li et al. (2019b) to train the network from low image degradations to high image degradations, thus gradually increasing the noise in the LR input image. Curriculum learning reduces the training difficulty; hence, the total computational time is also reduced.

Batch normalization

Batch normalization (BN) was proposed by Ioffe & Szegedy (2015) to stabilize and accelerate the deep CNNs by reducing the internal covariate shift of the network. Every mini-batch was normalized, and two additional parameters were used per channel to preserve the representation ability. Batch normalization is responsible for working on the intermediate feature maps; thus, it resolves the vanishing gradient issue while allowing high learning rates. This technique is widely used in SR models such as Ledig et al. (2017), Zhang, Zuo & Zhang (2018), Tai, Yang & Liu (2017), Tai et al. (2017), Ledig et al. (2017), Sønderby et al. (2017), Tai et al. (2017), Tai, Yang & Liu (2017), Liu et al. (2018b) and Zhang, Zuo & Zhang (2018). In contrast, (Lim et al., 2017) claimed that batch normalization-based networks lose the scale information of the generated images. Thus, there is a lack of flexibility in the network; hence, Lim et al. (2017) removed batch normalization and used the additional memory to design a large model with superior performance compared to the BN-based network. Other studies Wang et al. (2019c), Wang et al. (2018a) and Chen et al. (2018a) also implemented this technique to achieve marginally better performance.

Multi-supervision

Using numerous supervision signals within the same model for improving the gradient propagation and evading the exploding/vanishing gradient problem is called multi-supervision. In Kim, Lee & Lee (2016b), multi-supervision is incorporated within the recursive units to address the gradient problems. In SR, the multi-supervision learning technique is implemented by catering to a few other factors in the loss function, which improves the back-propagation path and reduces the training difficulty of the model.

SR frameworks

SR being an ill-posed problem; thus, upsampling is critical in defining the performance of the SR method. Based on learning strategies, upsampling methods, and network types, there are several frameworks for SR; here, four of them are discussed in detail, especially in light of the upsampling method used within the framework, as shown in Figs. 7–10.

Figure 7: Pre-upsampling-based super-resolution network pipeline.

Figure 8: Post-upsampling-based super-resolution network pipeline.

Figure 9: Iterative up-and-down sampling-based super-resolution network pipeline.

Figure 10: Progressive sampling-based super-resolution network pipeline.

Pre-upsampling SR

Learning the mapping functions for upsampling from an LR image directly to an HR image is done using this framework, where the LR image is upsampled in the beginning, and various convolution layers are used to extract representations in an iterative way using deep neural networks. Using this concept Dong et al. (2014, 2016) introduced the pre-upsampling-based SR framework (SRCNN), as shown in Fig. 7. SRCNN was used to learn the end-to-end mapping of LR-HR image conversion using CNNs. Using the classical methods of upsampling as discussed in “Conventional Methods of Super-Resolution”, the LR image is firstly converted to an HR image, and then deep CNNs were used to learn the representations for mapping the HR image.

Since the pre-upsampling layer already performs the actual pixel conversion task, the network needs to refine the results using CNNs; this results in reduced learning difficulty. Compared to single-scale SR (Kim, Lee & Lee, 2016a), which uses specific scales of input, these models can handle any random size image for refinement and have similar performance. In recent years, many application-oriented research studies have used this framework (Kim, Lee & Lee, 2016b; Shocher, Cohen & Irani, 2018; Tai, Yang & Liu, 2017; Tai et al., 2017), the differences in these models are in the deep learning layers employed after the upsampling. The only drawback in this model is the use of a predefined classical method of pre-upsampling, which often results in the introduction of image blur, noise amplification in the upsampled image, which later affects the quality of the concluding HR image. Moreover, the dimensions of the image are increased at the start of the method. Thus, the computational cost and memory requirements of this framework are higher (Shi et al., 2016).

Post-upsampling SR

To minimize the memory requirements and increase computational efficiency, the post-upsampling method was used in SR to utilize deep learning to learn the mapping functions in low-dimensional space. This concept was first used in SR by Shi et al. (2016) and Dong, Loy & Tang (2016), and the network diagram is shown in Fig. 8.

Due to low computational costs and the use of low-dimensional space for deep learning, this model has been widely used in SR because this reduces the complexity of the model (Ledig et al., 2017; Lim et al., 2017; Tong et al., 2017; Han et al., 2018).

Iterative up-and-down sampling SR

Since the LR–HR mapping is an ill-posed problem, efficient learning using the LR-HR image pair using back-propagation (Irani & Peleg, 1991) was used in SR (Timofte, Rothe & Van Gool, 2016). The SR network is called the iterative up-down sampling SR, as shown in Fig. 9. This model refines the image using recursive back-propagation, i.e., continuously measuring the error and refining the model based on the reconstruction error. The DBPN method proposed in Haris, Shakhnarovich & Ukita (2018) used this concept to perform continuous upsampling and downsampling, and the final image was constructed using the intermediate generations of the HR image.

Similarly, SRFBN (Li et al., 2019b) used this technique with densely connected layers for image SR, while RBPN (Haris, Shakhnarovich & Ukita, 2019) used recurrent back-propagation with iterative up-down upsampling for video SR. This framework has shown significant improvement over the other frameworks; still, the back-propagation modules and their appropriate use require further exploration as this concept is recently introduced.

Progressive-upsampling SR

Since the post-upsampling framework uses a single layer at the end of upsampling and the learning is fixed for scaling factors; thus, multi-scale SR will increase the computational cost of the post-upsampling framework. Thus, using progressive upsampling within the framework to gradually achieve the required scaling was proposed, as seen in Fig. 10. An example of this framework is the LapSRN (Lai et al., 2017), which uses cascaded CNN-based modules responsible for mapping a single scaling factor, and the output of one module acts as the input LR image to the other module. This framework was also used in ProSR (Wang et al., 2018a) and MS-LapSRN (Lai et al., 2017).

This model achieves higher learning rates as the learning difficulty is less since the SR operation is segregated into several small upscaling tasks, which is more straightforward for CNNs to learn. Furthermore, this model has built-in support for multi-scale SR as the images are scaled with various intermediate scaling factors. Training stability and convergence are the main issues with this framework, and this requires further research.

Other improvements

Apart from the four primary considerations in image SR, other factors have a significant effect on the performance of a super-resolution method, and in this section, a few are discussed in light of recent research.

Data augmentation

Data augmentation is a common technique in deep learning, and this concept is used to further enhance the performance of a deep learning model by generating more training data using the same dataset. In the case of image super-resolution, some of the augmentation techniques are flipping, cropping, angular rotation, skew, and color degradation (Timofte, Rothe & Van Gool, 2016; Lai et al., 2017; Lim et al., 2017; Tai, Yang & Liu, 2017; Han et al., 2018). Recoloring the image using channel shuffling in the LR-HR image pair is also used as data augmentation in image SR (Bei et al., 2018).

Enhanced prediction

This data augmentation method affects the output HR image as multiple LR images are augmented using rotation and flipping functions (Timofte, Rothe & Van Gool, 2016). These augmented are fed to the model for reconstruction, the reconstructed outputs are inversely transformed, and the final HR image is based on the mean (Timofte, Rothe & Van Gool, 2016; Wang et al., 2018a) or median (Shocher, Cohen & Irani, 2018) pixel values of the corresponding augmented outputs.

Network fusion and interpolation

This technique used multiple models to predict the HR image, and each prediction acts as the input to the following network, like in context-wise network fusion (CNF) (Ren, El-Khamy & Lee, 2017). The CNF was based on three individual SRCNNs, and this model achieved the performance, which was compared with the state-of-the-art SR models (Ren, El-Khamy & Lee, 2017).

In the SR network, network interpolation is a model that uses PSNR-based and GAN-based models for image SR to boost SR performance. Network interpolation strategy (Wang et al., 2019c, 2019b) used a PSNR-based model for training. In contrast, a GAN-based model was used for fine-tuning while the parameters were interpolated to get the weights of interpolation, and their results had few artifacts and look realistic.

Multi-task learning