Abstract

In the 'Beyond Moore's Law' era, with increasing edge intelligence, domain-specific computing embracing unconventional approaches will become increasingly prevalent. At the same time, adopting a variety of nanotechnologies will offer benefits in energy cost, computational speed, reduced footprint, cyber resilience, and processing power. The time is ripe for a roadmap for unconventional computing with nanotechnologies to guide future research, and this collection aims to fill that need. The authors provide a comprehensive roadmap for neuromorphic computing using electron spins, memristive devices, two-dimensional nanomaterials, nanomagnets, and various dynamical systems. They also address other paradigms such as Ising machines, Bayesian inference engines, probabilistic computing with p-bits, processing in memory, quantum memories and algorithms, computing with skyrmions and spin waves, and brain-inspired computing for incremental learning and problem-solving in severely resource-constrained environments. These approaches have advantages over traditional Boolean computing based on von Neumann architecture. As the computational requirements for artificial intelligence grow 50 times faster than Moore's Law for electronics, more unconventional approaches to computing and signal processing will appear on the horizon, and this roadmap will help identify future needs and challenges. In a very fertile field, experts in the field aim to present some of the dominant and most promising technologies for unconventional computing that will be around for some time to come. Within a holistic approach, the goal is to provide pathways for solidifying the field and guiding future impactful discoveries.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 license. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Introduction

In the 'Beyond Moore's Law' era, domain-specific computing embracing unconventional approaches will become increasingly prevalent with increasing edge intelligence. Several shortcomings plague current computational models and the underlying technologies: (1) exorbitant energy cost, which drains resources and will ultimately affect our environment; (2) slowing of Dennard scaling and Moore's law since we are reaching the limits of transistor downscaling, which would impact the economy in the long run; (3) difficulty in reconciling fast switching times with long memory retention: conventional computing systems face a trade-off between memory speed and capacity owing to hierarchical memory structures, which would have adverse consequences for a world that is always data-hungry; (4) limited parallelism: while conventional computing can achieve some parallelism through multi-core processors, not all computing tasks can be efficiently parallelized, leading to slow data processing; and (5) the von Neumann bottleneck which limits the size, weight and power (SWaP) of our current computational technologies.

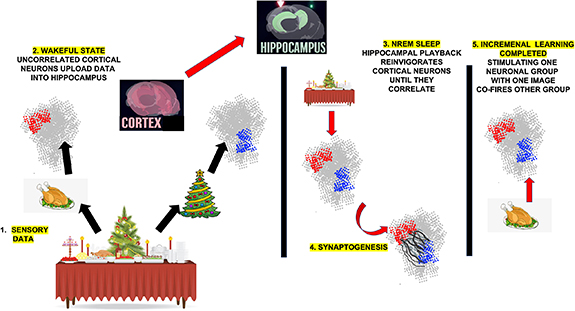

New unconventional computing paradigms have emerged to contend with some or all of these challenges. At the same time, adopting a wide variety of nanotechnologies to implement unconventional computing will benefit energy cost, computational speed, reduced footprint, cyber-resilience, and data processing prowess. The time is ripe to lay out a roadmap for unconventional computing with nanotechnologies to guide future research, and this collection aims to fulfill that need. The authors provide a holistic roadmap for unconventional computing with electron spins, memristive devices, nanomaterials, mixed-dimensional heterojunctions, nanomagnets, and assorted dynamical systems. The authors address the similarities and differences between the paradigms discussed in the manuscript, emphasizing the underlying connections. For example, the manuscript distinguishes between neuromorphic computing and brain-inspired computing. Although both are based on the behavior of a biological brain, the former is usually associated with neural networks, while the latter aims to delve deeper into the architecture of the brain, taking into account regions with different memory and processing functions. They also address other paradigms for solving combinatorial optimization and graph-theoretic problems, such as Ising machines and simulated bifurcation, computing in the presence of uncertainties such as Bayesian inference engines, probabilistic computing with p-bits, processing in memory to circumvent the von-Neumann bottleneck, quantum memories and algorithms for solving NP problems, computing with skyrmions and spin waves for massive parallelism, and brain-inspired computing for incremental learning and solving problems in severely environments with severe constraints on resources, see figure 1. All of these approaches have advantages over conventional Boolean computing predicated on the von-Neumann architecture. With the computational need for artificial intelligence growing at a rate 50-fold faster than Moore's law for electronics, more unconventional approaches to computing and signal processing will appear on the horizon, and this roadmap will aid in identifying future needs and challenges.

Figure 1. Illustrate the potential applications of nanotechnology. This roadmap explores hardware implementations across a spectrum of computational paradigms and highlights computational models that are poised to take full advantage of nanotechnology components. We will focus on cutting-edge computational models and hardware implementations, describing their current status and challenges, as well as current nanotechnology efforts to address these challenges. Section 1 covers hardware implementation using magnets and nanomagnets for neuromorphic computing. Section 2 covers memristive devices and their potential use for unconventional computing. Section 3 covers the use of nanomaterials for unconventional computing. Section 4 covers probabilistic and quantum computing. Section 5 considers the use physical systems and physics-inspired models, such as Ising and Boltzmann machines as well as memcomputing, for unconventional computing. Section 6 covers in-memory computing. Section 7 covers brain-inspired computing.

Download figure:

Standard image High-resolution imageIn particular, Ising and Boltzmann machines are similar in their (physics-based) computing approaches. In contrast, simulated bifurcation is related to both and can be viewed as a natural progression of the underlying idea. They embed the solution to a problem in the ground state configuration of several interacting devices, which are programmed by biasing each one and adjusting the weight of the synaptic connection between them. They have two significant advantages. First, since they compute by relaxing to the ground state, no external energy needs to be pumped into them to maintain them in an excited state. That makes them highly frugal in the use of energy. Second, they are very forgiving of errors since the computational activity is elicited from the cooperative actions of many devices working in unison, and the failure of one or few devices does not impair circuit functionality. Computing with large-scale dynamical systems, whose natural response mimics the execution of algorithms without any software, shares these characteristics. For example, the combination of dynamical systems with the memcomputing paradigm is a promising direction for solving combinatorial optimization problems, including prime factorization, at scale. Brain-inspired computing can also become more efficient with this feature. Probabilistic computing employing p-bits and quantum computing utilizing qubits also have some mutual connection. The p-bit is sometimes referred to as a poor person's qubit. The difference is that a p-bit is sometimes the bit 0 and sometimes the bit 1 (with tailored probabilities) but never simultaneously both 0 and 1, while a qubit is simultaneously both 0 and 1 all the time but collapses to either bit with different (tailored) probabilities when a measurement is made. Both probabilistic and quantum computing have been shown to be capable of solving combinatorial optimization problems such as the traveling salesman problem and maximum satisfiability, although a thorough scalability analysis is needed. In the same vein, there is a similarity between computing with skyrmions and spin waves, not just because they are both magnetic entities but because both can be adapted to either analog or digital information processing. Skyrmions may also have a role to play in quantum computing.

Furthermore, memristive systems promise the realization of ultrafast and ultradense memory elements that can mimic a wide range of functions ranging from matrix multiplication to bio-realistic synapses for hardware accelerators and neural networks, respectively. In particular, emerging memtransistors and mixed-dimensional heterojunctions can realize bio-realistic neuronal functions such as input-adaptive learning, continuous learning, heterogeneous plasticity, and complex spiking behavior that can simplify circuit architectures. Nanomaterials enable printed neuromorphic devices to integrate with bio-compatible sensors and flexible electronics applications. While these nascent ideas face different challenges than more mature technologies, such as floating gate memories, they also promise alternative paradigms that can circumvent technological hurdles due to inherently superior physical properties, form factors, and device metrics. One of their primary advantages is the tunability of response functions that establish a fertile ground to realize devices and circuits for the circuit realization of cortical architectures and processes.

This article not only addresses paradigms but also addresses technologies that are best suited to a given approach. Most algorithms can be executed with different devices and hardware, whether nanomagnets, nano-CMOS, memristors, or something else. In other words, they are generally hardware agnostic. However, some algorithms run best on a particular class of devices, depending on their specific properties, such as non-volatility, intrinsic stochasticity, time nonlocality, and memory. Artificial neural networks adapt well to both memristors and magnetic devices, as well as to CMOS. Addressing and updating large memristor crossbar arrays is a challenge where dual-gated memtransistors based on two-dimensional materials can simplify the architecture and operation of these synaptic circuits. Probabilistic computing's ideal hardware will be low-barrier nanomagnets, perhaps also CMOS. Ising machines and others that rely on the relaxation of an interacting assembly of devices to their many-body ground state can employ various technologies. However, some may be better than others depending on the type of problem being solved. One technology receiving widespread attention involves coupled oscillators involving spin Hall nano-oscillators or CMOS. In this article, each paradigm is illustrated with a particular technological substrate, whether CMOS, nanomagnets, memristive elements, or something else, because it is easy and convenient to implement the paradigm with that technology. Additionally, the chosen technology may reduce SWaP, which is an important consideration.

The manuscript is organized as follows: Section 1 deals with using magnetism to implement unconventional computing at the nanoscale. Sections 1.1 and 1.2 focus on spintronic technology based on magnetic tunnel junctions, while sections 1.3 and 1.4 discuss spin-wave and skyrmion-based approaches. Section 2 discusses the use of memresistors for unconventional computing. Section 3 discusses nanomaterial systems for hardware implementation of unconventional computing, with section 3.2 focusing on two-dimensional materials. Section 4 delves into the realm of using probabilities for complex computations, which includes the discussion of probabilistic computing in section 4.1 and quantum computing in section 4.2. Section 5 discusses the use of dynamical systems for complex computations. Section 5.1 discusses how unconventional computation can exploit the intrinsic complex dynamics of physical systems. Sections 5.2 and 5.3 consider models and hardware implementations for Boltzmann and Ising machines. Section 5.4 deals with memcomputing. Sections 6.1 and 6.2 deals with in-memory computing. Finally, section 7.1 considers brain-inspired computing, taking into account the complex interactions between the hippocampus and the cortex.

Each technology, of course, comes with its challenges. The primary obstacles in spintronic and magnetic technologies are device-to-device variations, sensitivity to defects, and the deleterious effects of thermal noise. In the case of spin wave devices, the challenge is to find efficient interfaces for input and output. With skyrmionic computing, the roadblocks involve inadequate control over skyrmions' size, stability, creation, annihilation, and electrical readout. Memristive technologies may face some material challenges and may have to contend with device-to-device variability and the experienced difficulty of large-scale integration. Scalability is also an issue. The advances on the nanomaterial level are indispensable for the transition to the system level of application. Emerging tunable memristive systems such as memtransistors from two-dimensional materials and mixed-dimensional heterojunctions possess additional challenges, including wafer-scale growth, transfer, and possible integration as back-end-of-the-line (BEOL) circuits. For probabilistic computing, the main challenges are device-to-device variation, which hinders large-scale integration, and slow computational speed when implemented with low barrier nanomagnets made of common ferromagnets owing to the small flips per second determining the p-computer rate. Control, coherence, and readout are the looming obstacles for spin-based qubits. The need for efficient simulated annealing techniques to avoid metastable states (sub-optimal solutions) and to speed up computation challenges coupled oscillator technology for Ising machines. Compute-in-memory faces materials-related challenges and the complexity of implementation. Finally, brain-inspired computing has to contend with many daunting challenges, such as faithful reproduction of synaptogenesis and dendritogenesis, that are difficult to implement with artificial devices and circuits.

By its nature, no roadmap article can claim to be completely comprehensive, and we make no such claim either. New ideas and technologies germinate fast, and today's state of the art becomes obsolete tomorrow. Here, we have presented some of the dominant and most promising technologies for unconventional computing that we believe will endure for some time.

Finally, a critical need is to create standardizing metrics to benchmark different approaches to unconventional computing. Their performances should be evaluated at various levels, including hardware (device and circuit) and algorithmic. Developing standardizing metrics is a complex task that requires interdisciplinary collaboration and a deep understanding of the underlying principles and design goals. However, by establishing rigorous metrics, researchers and practitioners can effectively compare and evaluate the performance of different unconventional computing systems. Via the applied holistic approach, this roadmap article could contribute to achieving that objective.

1.1. Magnetic architectures for unconventional computing

Jean Anne C Incorvia1, Supriyo Bandyopadhyay2 and Joseph S Friedman3

1 Chandra Family Department of Electrical and Computer Engineering, University of Texas at Austin, Austin, TX 78712, United States of America

2 Department of Electrical and Computer Engineering, Virginia Commonwealth University, Richmond, VA 23284, United States of America

3 Department of Electrical and Computer Engineering, University of Texas at Dallas, Richardson, TX 75080, United States of America

Email: [email protected], [email protected] and [email protected]

Status

Next-generation unconventional computing will address key needs and problems for processing increasingly large and unstructured data workloads, as well as the increase in edge computing devices and corresponding energy constraints. Some problems that unconventional computing addresses include the bottleneck between compute and memory; the large energy and delay penalty of analog-to-digital conversion; computing with small energy budgets; and application-specific computing with balanced energy, time, and precision needs, since precise computing is not always required.

Magnetic thin films, both continuous and patterned into nanomagnets, have a long history in computing, starting with hard disk drives and including today's spin transfer torque and spin orbit torque-based magnetic random access memory (STT-MRAM, SOT-MRAM). Unconventional computing hardware (neuromorphic, Bayesian, Boltzmann machines) implemented with magnetic devices, e.g. magnetic tunnel junctions (MTJs), are attractive since the constituent elements are non-volatile and could be extremely energy-efficient. The device characteristics and inter-device interactions, which depend on the energy barrier within the free layer of the MTJ, can be tailored and controlled through multiple simultaneous knobs, such as current, voltage, strain, magnetic fields, and by both DC and AC inputs. This offers immense flexibility in designing hardware accelerators for machine learning such as binary stochastic neurons (BSNs), neuromorphic components like synapses, Ising machines, etc.

The MTJ and corresponding devices also benefit from high endurance in switching the magnetic state, and from the fact that, under normal operation, the resistance states can be set in a controllable way, without drift over time or over cycles. This stability and robustness of the bit state control (not necessarily the states themselves, which can be tuned between stable and stochastic) can compensate for some of the challenges MTJs face.

Whereas MTJs with low energy barriers exhibit constant stochastic switching between resistance states, useful for BSNs, MTJ memory devices with high energy barriers exhibit an alternative stochastic phenomenon: the switching between the two stable states is intrinsically stochastic. This stochastic writing process provides analog behavior to these binary memory devices, enabling their use in neuromorphic systems of the type described in figure 2(a). Furthermore, the binary MTJ states are inherently robust against the variations and stochastic behavior that plagues memristors and phase-change memory, thereby making non-volatile MTJ synapses a promising technology for neuromorphic computing.

Figure 2. (a) Neuromorphic crossbar array with the stochastic writing of MTJ synapses. (b) Example domain wall-MTJ, here patterned as a synapse, with patterned blue/white/red domain wall track and blue output tunnel junction, including notches to control the domain wall position. (c) A two-node Bayesian network implemented with two MTJs. (d) Reservoir computer comprised of frustrated nanomagnets.

Download figure:

Standard image High-resolution imageNeural network crossbar arrays can be implemented using the nanomagnet as both the artificial synapse and the artificial neuron. By using a top-pinned MTJ stack and extending the bottom magnetic layer into a longer track, the MTJ can be configured as a domain wall-magnetic tunnel junction (DW-MTJ), shown in figure 2(b). Subsequent choice of patterning can then have the device show analog resistance states as a synapse [1] or as a neuron [2]. While a domain wall, or similarly a magnetic skyrmion, can be harder to control than a single-domain nanomagnet, it provides additional bio-mimetic functions for unconventional computing such as time delays, stochastic pinning and depinning, and frequency-based switching. It can also benefit from magnetic field interactions between the domain walls of the devices.

Belief networks (Bayesian inference engines) are another genre of unconventional computers for computing in the presence of uncertainty. They are difficult to implement with most technologies since they require non-reciprocal synapses. Simple 2-node networks consist of a parent and a child node where the child node's state is correlated with that of the parent, but not the other way around. Two dipole-coupled MTJs of different shapes built on a piezoelectric substrate can implement this paradigm easily, show in figure 2(c). The degree of correlation or anti-correlation between the nodes can be varied with global strain applied to both MTJs via the piezoelectric [3] and this can enable Bayesian inference [4]. The synaptic connection between the nodes is dipole coupling, which consumes no area on the chip and dissipates no energy since it does not involve current flow.

While trained neuromorphic computing systems promise exceptional capabilities, the training process incurs significant hardware costs in terms of energy, area, and speed. Reservoir computing therefore provides an opportunity to avoid those costs by using a system that requires minimal training. In particular, the bulk of the system is untrained, while only a single output layer must be trained. Nanomagnetism naturally provides such reservoirs, as irregular arrays of closely-packed nanomagnets exhibit frustration that produces complex physical dynamics and hysteresis, shown in figure 2(d). All these extra-ordinary capabilities make magnetic architectures for unconventional computing unique and attractive.

Current and future challenges

The year 2021 heralded the first three experimental demonstrations of neural networks with synapse weights encoded in binary MTJ states; all three performed some type of recognition task. In the simplest of these experiments, a 4 × 2 single-layer neuromorphic network was directly implemented with MTJ synapses [5] to perform vector-matrix multiplication. More complex MTJ-based synapse structures were used in a two-layer (13 × 6 + 6 × 3) network as well as a 64 × 64 single-layer network [6]. The key future challenges for this neuromorphic computing approach are scaling to large network dimensions and the experimental demonstration of learning through stochastic switching.

DW-MTJs also have been demonstrated recently [1, 2, 7]. Clear needs are better understanding and control of the domain wall behavior over many cycles, especially without needing to refresh the devices; all-electrical control without the need of external magnetic fields to aid domain wall movement; scaling down to modern feature sizes; and scaling up for larger circuit demonstrations, including better understanding of device-to-device variations and their impact on the unconventional computing applications.

As a first step towards the development of reservoir computers based on frustrated nanomagnetism, micromagnetic simulation studies have demonstrated their memory capacity and expressivity [8]. These systems have been shown to successfully perform complex classification tasks, including waveform identification, Boolean operations based on previous inputs, and observation and prediction of dynamical discrete time series. Furthermore, comparative simulation studies indicate a 60x improvement in energy efficiency relative to conventional CMOS systems [8]. However, experimental demonstration and proof of concept remain a significant challenge.

Neuromorphic computing is generally much more forgiving of switching errors than Boolean logic, but it is not necessarily very tolerant of large device-to-device variations. The response time of BSNs, for example, can change dramatically in the presence of fabrication defects or slight shape variations, which results in significant device-to-device variations that is a challenge for large scale networks. One way to counter this is to adopt hardware aware in-situ learning [9]. Another is to replace common ferromagnets used in MTJs with dilute magnetic semiconductors which have several orders of magnitude lower saturation magnetization. That makes the energy barriers in the nanomagnets much less sensitive to shape and size variations and suppresses device-to-device variations [10].

A challenge with ferromagnetic devices is the relatively slow switching speed of ∼1 ns which creates a bottleneck in training and inference in both recurrent and deep neural networks. There has been some recent interest in harnessing anti-ferromagnetic materials for synapses and they are capable of much higher speed. This is a nascent field, but important discoveries may be around the corner. All these challenges make magnetic architectures for unconventional computing a fertile field of research.

Advances in science and technology to meet challenges

One of the critical challenges for neural networks based on both conventional MTJs and DW-MTJs is efficient network training. As conventional supervised learning algorithms (e.g. backpropagation) become increasingly complex when scaled to large and deep networks, the hardware costs for implementing this mathematical circuitry hinder the development of neural networks with online learning. Preliminary explorations of unsupervised learning algorithms with MTJs and DW-MTJs [5] indicate that local Hebbian learning rules can be used with feedback circuits to efficiently train neural networks with minimal energy, speed, and area costs.

Realization of reservoir computing systems based on frustrated nanomagnets will require advances in experimental techniques for providing the input signals while contemporaneously measuring the magnetization of the various nanomagnets that make up the reservoir. The inputs can be provided via STT switching, and the output magnetizations can be read through an MTJ (preliminary experimental efforts may focus on imaging the output). These output signals must be fed to a single trained layer.

Domain wall creep and the stochasticity of domain wall motion at room temperature pose significant obstacles to DW-MTJ technologies. Recent progress with notched structures [1, 2] have ameliorated some of the difficulties, but further material research is needed to find possibly simpler solutions where the intrinsic material properties may be able to suppress or control the stochastic behavior of domain wall and skrymion motion.

A well-known challenge with magnetic devices such as MTJs is the low on/off ratio and low overall resistance, which typically results in low training accuracies in neuromorphic architectures [6]. Research is needed to find proper material combinations to increase the tunneling magneto-resistance or on/off ratios of MTJs to alleviate this problem. This field has a long history and unfortunately has been slow in making progress. However, its importance cannot be overstated since a high on/off ratio provides a wider range of synaptic weights and improves error tolerance.

Challenges in patterning MTJ-based structures leads to device-to-device variation that can increase with reducing feature size. This challenge compounds with the low on/off ratio to blur the difference between the 0 and 1 state, and is even more of an issue if more resistance levels are desired between the 0 and 1. While good control of the device resistance states can help with this issue, better patterning methods are needed, as well as more effort in circuit design to design around these challenges.

Concluding remarks

The human brain consumes 1–100 fJ of energy per synaptic event. Magnetic devices can rival (or even eclipse) this energy efficiency. Their non-volatility offers additional architectural advantages, e.g. in reservoir computing [8].

The low energy consumption has other benefits: it provides hardware security, which is very important for artificial intelligence. Because of the low power requirement, these architectures can be embedded in edge devices that have minimal contact with the cloud and are therefore somewhat insulated from cloud-borne attacks. Additionally, they are inherently resilient against malign hardware. A hardware Trojan, no matter how surreptitious, will consume some energy and that can become comparable to (or exceed) the energy consumed by magnetic hardware. Therefore, Trojans can be easily detected with side channel monitoring.

Finally, neuromorphic computing with anti-ferromagnetic devices is a burgeoning area of research laden with promise and it can spawn new devices and architectures that will speed up training and inference tasks immensely. This is an exciting area of research that is about to bear fruit.

Acknowledgments

The work of S B in this field has been supported by the National Science Foundation under Grants CCF-2001255 and CCF-2006843. The work of J A C I in this field has been supported by the National Science Foundation under Grants EPMD-2225744, CCF-1910997, and CCF-2006753, as well as Sandia National Laboratories. The work of J S F in this field has been supported by the National Science Foundation Grants CCF-1910800 and CCF-2146439, and the Semiconductor Research Corporation.

1.2. The impact of spintronics in neuromorphic computing

Qu Yang1, Anna Giordano2, Julie Grollier3, Giovanni Finocchio4 and Hyunsoo Yang1

1 Department of Electrical and Computer Engineering, National University of Singapore, Singapore 117576, Singapore

2 Department of Engineering, University of Messina, 98166 Messina, Italy

3 Laboratoire Albert Fert, CNRS, Thales, Université Paris Saclay, 91767, Palaiseau, France

4 Department of Mathematical and Computer Sciences, Physical Sciences and Earth Sciences, University of Messina, 98166 Messina, Italy

Email: [email protected], [email protected], [email protected], [email protected] and [email protected]

Status

Neuromorphic spintronics aims to develop spintronic hardware devices and circuits with brain-inspired principles [11]. The conventional complementary metal–oxide–semiconductor (CMOS) neuron and synapse designs require numerous transistors and feedback mechanisms and would be unsuitable for developing modern artificial intelligence systems. Spintronics is a promising approach to neuromorphic computing as it potentially enables energy and area-efficient embedded applications by mimicking key features of biological synapses and neurons with a single device instead of using multiple electronic components [11, 12].

As discussed in sections 1.1, the main building block for neuromorphic spintronics is the magnetic tunnel junction (MTJ), which exhibits several unique characteristics over other technologies, including CMOS compatibility, low power consumption, outstanding read/write endurance, non-volatility, and fast speed [11]. Krysteczko et al carried out the first work on the spintronic implementation of memristive functionalities by voltage-induced switching in MTJs [13]. Later on, different MTJ-based spintronic structures have been proposed to potentially offer solutions to neuronal computations with bio-fidelity [14]. For example, MTJs have been used for the realization of memristors for storing synaptic weights, activation functions of a neuron (such as ReLU-like and sigmoidal), reservoir computing and in-memory computing.

Figure 3(a) illustrates an example of MTJ-based integrate-and-fire spiking neuron [14]. In this case, the current-induced spin orbit torque (SOT) integrates the domain wall motion (DWM). When the domain wall reaches the critical position (threshold), the neuron device spikes a 'fire' signal. With similar principles, more sophisticated spin-based neuron models have been further developed, and similar structures with magnetic skyrmions (discussed in section 1.4) instead of DWM as information carrier have also been constructed, see figures 3(b) and (c) [12, 15]. Figure 3(d) presents a spin-torque nano-oscillator (STNO)-based neuron that emulates a Hodgkin-Huxley analogue model, surpassing the limitations of the integrate-and-fire model [16]. The proposed device harnesses the combined effects of magnetization dynamics and temperature fluctuations within the STNO, enabling the generation of a sequence of spikes whose frequency depends on the amplitude of the constant applied current. Moreover, various SOT neuromorphic solutions also have been demonstrated experimentally for the realization of ultrafast neuromorphic spintronics, field-free artificial neuron, auto-reset stochastic neuron, and stochastic artificial synapse.

Figure 3. Different schemes for spintronics implementation of firing neurons. (a)–(c) Emulate the Leaky-Integrate, Fire and Reset model while (d) emulate the biorealistic Huxley-Hodgkin model. Reprinted with permission from [12]. Copyright (2022) American Chemical Society. Reprinted from [14], with the permission of AIP Publishing. Reprinted from [15], with the permission of AIP Publishing. Reprinted (figure) with permission from [16], Copyright (2023) by the American Physical Society.

Download figure:

Standard image High-resolution imageSpintronics could be powerful in the development of neuromorphic computing because it enables the data processing and storage at a very local level. To this end, there have been a rich variety of spintronic materials and device designs for proof-of-concept neuromorphic computing implementations. Regarding the neural networks, the paradigm is now shifting from frame-based to event-based exploiting the idea of spiking neurons in spiking neural networks, an approach that is closer to the brain working principle. These novel research progresses have further aroused a research enthusiasm towards developing large-scale brain-inspired spintronic systems.

Current and future challenges

There are important challenges to be overcome for further development of neuromorphic spintronics. One of the biggest challenges is that the read-out signal of spintronic approaches is quite small, making it difficult to read quickly. As discussed in section 1.1, significant research endeavors have been dedicated to enhancing the tunneling magnetoresistance (TMR) in MTJs. Nonetheless, the resistance changes of MTJs (typically one to three on/off ratios) remain relatively modest compared to other memory technologies. To further address this issue, researchers have explored the integration of MTJs with CMOS technology to achieve a higher on/off ratio and lower leakage current [6].

Regarding spintronic neurons, typically a reset pulse with a sufficiently high magnitude (equal to or several times larger than that required for writing) and of opposite polarity is necessary [12]. This not only increases energy consumption and complexity of the chip, but also lowers the areal density for peripheral circuits required. Besides, an extra resetting step will decrease the operational speed of the neural circuit. The neural device will not be usable till it has been reset by a reset-pulse. Therefore, a bio-realistic neural device with the auto-reset functionality is desirable for energy-efficient and densely packed artificial neural networks.

Moreover, implementing spintronic hardware in neural networks has challenges in coupling control of each neuron. Synchronization of device properties instead of changing their individual response would be one promising way to extend spintronic approaches to multilayer neural networks [17]. As detailed in section 1.1, addressing device variability, response speed, and circuit design challenges is crucial when connecting each neuron to potentially thousands of synapses in a neural network algorithm.

Advances in science and technology to meet challenges

Research efforts have been put to address the challenges encountered in spintronic neuromorphic computing. The spintronic memristor has been developed to emulate synaptic behaviors (section 2.1). The inherent stochasticity in stochastic MTJ (S-MTJ) enables highly energy-efficient probabilistic computing tasks such as stochastic number generation and probabilistic spin logic operation (section 4.1). Besides, the switching probability of S-MTJ is adjustable by an applied electric bias, and thus the junction can be utilized for the emulation of the Poisson neuron which generates a spiking train with a tunable firing rate.

Moreover, the unique features of STNO have been utilized for neuromorphic computing. Based on time multiplexing, the single oscillator can function as a reservoir computer, which is a special type of neural network for time series analysis [18]. In addition, the high tunability of STNOs facilitates the coupling with other oscillator devices and could emulate the synchronization of neurons. This is important for information sharing and processing. The classification of vowels at microwave frequencies has been experimentally demonstrated through the synchronization of STNOs [17]. The response of spin-diodes has also be used to mimic neurons in the non-linear regime, and synapses in the linear regime. Frequency multiplexing appears as a possible solution to build multilayer neural networks with STNO neurons and spin diodes synapses.

Future research efforts should focus on the integration of spintronic devices (e.g. SOT devices) in the MTJ-based magnetic random-access memory (MRAM) architecture to increase the read-out signal via TMR. For the circuit design, the shared write channel-based SOT architecture can be explored to reduce the transistor count for large scaling [19]. Adding a gate to these devices to enable volatile or non-volatile voltage-controlled anisotropy will certainly be critical to enhance the computational capabilities of SOT-based architectures. To further enlarge the resistance change of MRAM and improve the scaling, researchers have put the effort into investigating novel crossbar array architecture (illustrated in figure 4) [6], as elaborated in section 6.2.

Figure 4. Layout (a) and micrograph (b) and of the 64 × 64 MRAM crossbar array. (c) MRAM crossbar array architecture and (d) configuration of each bit-cell. Reproduced from [6], with permission from Springer Nature.

Download figure:

Standard image High-resolution imageConcluding remarks

In conclusion, spintronics offers compelling opportunities for advancing neuromorphic computing by offering a range of bio-plausible hardware solutions. Various spintronic artificial synapses and neurons, driven by diverse physical mechanisms such as SOT, DWM, and magnetic skyrmions (as discussed in section 1.4), have been effectively demonstrated. These advancements hold the potential for seamless integration into comprehensive brain-inspired spintronic systems. Nevertheless, several challenges remain to be addressed such as increasing the read-out signal, further investigation of bio-realistic neural devices, coupling control of neurons, and large scaling of compact and energy-efficient artificial neural networks. The state-of-the-art spintronic technologies have been discussed to meet these challenges including spintronic memristors, S-MTJ, STNO, spin-diodes, and new design of MTJ-based MRAM architecture. Spintronic neuromorphic computing is currently a technologically fast evolving field. The experimental demonstration of spintronics-based network-level neuromorphic computing remains to be further explored and to be implemented into large-scale hardware neural networks.

Acknowledgments

The work is supported partially by the SpOT-LITE Programme (A*STAR Grant, A18A6b0057) through RIE2020 funds, the National University of Singapore Advanced Research and Technology Innovation Centre (A-0005947-19-00), National Research Foundation (NRF) Singapore (NRF-000214-00), Samsung Electronics' University R&D Programme, the project PRIN 2020LWPKH7 funded by the Italian Ministry of University and Research and by the European Union's Horizon 2020 research and innovation program under Grant RadioSpin No. 101017098 and under Grant SWAN-on-chip No. 101070287 HORIZON-CL4-2021-DIGITAL-EMERGING-01.

1.3. Spin wave-based computing

Florin Ciubotaru1, Andrii V Chumak2, Azad J Naeemi3 and Sorin D Cotofana4

1 IMEC, Leuven 3001, Belgium

2 Faculty of Physics, University of Vienna, Vienna 1090, Austria

3 School of Electrical & Computer Engineering, Georgia Institute of Technology, Atlanta, GA 30332, United States of America

4 Quantum and Computer Engineering Department, Delft University of Technology, Delft, CD 2628, The Netherlands

Email: [email protected], [email protected], [email protected] and [email protected]

Status

Magnonics [20] is an emerging solid-state physics field where Spin Waves (SW)—the collective excitations of the magnetic orders—and their quanta magnons are utilized, instead of electrons, for information transport and processing [21]. SW characteristics, e.g. GHz to THz frequency range, down to atomic scale wavelengths, pronounced non-linear and non-reciprocal phenomena, tunability, low-energy data transport and processing, offer many avenues towards building SW based nanoelectronics. The applied magnonics field is intensively growing, while SW sensing and SW radio frequency applications, which are becoming increasingly important, in view of the 5G technology requirements, are still in early stages of development. Boolean and unconventional SW computing have reached many milestones in recent years, and are experiencing constant growth [20, 21]. Moreover, quantum magnonics attracts increasing attention within the community [21] and potentially offers an additional entanglement-related degree of freedom for quantum computing. The most important Boolean computing relevant achievements are the experimental realization of the inline majority gate [22], directional coupler, and magnetic half adder [23], as well as the basic circuits demonstration by means of micromagnetic simulations [24] (see figures 5(a)–(c)). Magnon-based unconventional computing [21] is primarily associated with neuromorphic computing [25, 26] although versatile approaches of wave-based computing including spectrum analysis or pattern recognition with magnonic holographic memory devices [27] can be placed in the same category (see figure 5(d)).

Figure 5. (a) Scanning electron micrograph of a sub-micron scaled spin wave majority gate (top) and the polar plot of the transmitted power for different input phases demonstrating strong and weak majority signals (bottom). Reproduced from [21]. CC BY 4.0. (b) 2D Brillouin light scattering spectroscopy maps of the spin-wave intensity recorded in a directional coupler for two excitation frequencies (f = 3.465 GHz and f = 3.58 GHz) (top). Operational principle of a magnonic half-adder demonstrated by micromagnetic simulations. Adapted from [23], with permission from Springer Nature. (c) 2-bit Inputs Spin Wave Multiplier. © [2021] IEEE. Reprinted, with permission, from [24]. (d) Schematic of a nanomagnet based spin-wave scatterer (top), and a spin-wave intensity pattern (bottom) for neural network applications. Reproduced from [26]. CC BY 4.0. (e) Magnonic demultiplexer device simulated by inverse design micromagnetic simulations. Reproduced from [28]. CC BY 4.0.

Download figure:

Standard image High-resolution imageThe concept of inverse-design magnonics, which given a certain functionality utilizes a feedback-based computational algorithm to obtain the corresponding device design [28], has been successfully utilized for radio-frequency applications [28] and neural networks [26]. Such an approach produces a device with rectangular ferromagnetic functional region patterned with square-shaped voids, as depicted in figure 5(e). To demonstrate the universality of this approach, linear, nonlinear, and nonreciprocal magnonic functionalities were explored and a magnonic (de-)multiplexer (see figure 5(e)), a nonlinear switch, and a circulator have been designed. Machine learning-based inverse design has significant potential for any kind of data processing, including the realization of complex multi-bit Boolean logic gates or neuromorphic networks [26].

Current and future challenges

Various reported simulations and experiments have clearly demonstrated that SW could serve as information carriers and their interaction for data processing. However, the design of a fully magnetic computing system is far from being possible until effective solutions are found for: (i) constructing circuits out of magnonic gates and (ii) the realization of magnonic memories. Typical circuit construction challenges include fanout achievement and gate cascading, and they have been recently addressed in [24]. However, while the proposed approaches [24] enable magnonic circuit realization by enabling up to a fanout of 4 and direct gate cascading within the magnonic domain, they are expensive in terms of area and delay. Thus, to unleash magnonic computing full potential more effective solutions are required. Unfortunately, except the Holographic Memory concept introduced in [27], which is not a real magnonic memory as it does not store data in magnons, very little progress has been made towards the conceptual realization of magnonic memories.

Moreover, building magnonic computing chips requires many more components apart of simple magnetic waveguides used for SW propagation. Passing the input data from CMOS (the charge domain) to the magnonic circuitry requires high energy efficient scaled transducers. Typical SW transducers, e.g. inductive antennas, spin-transfer- or spin-orbit-torque based magnetic tunnel junctions, are scalable but very inefficient in terms of energy consumption. Their energy-delay characteristics need to be improved by few orders in magnitude to compete with the CMOS computing circuitry counterpart. Voltage driven transducers, e.g. based on magnetoelectric effects, might reach the required energy efficiency, yet such performances should be experimentally demonstrated at the nanoscale. Next to the transducers, the magnetic conduits should transport the information with minimum losses and delay. Recent studies demonstrated SW propagation lengths in scaled waveguides in a several micrometers range. However, not all the studied materials (e.g. Y3Fe5O12) are CMOS compatible. Alloys based on CoFe(B) are widely utilized in MRAM technology and are promising candidates for magnonic conduits. Nevertheless, further material developments and optimizations, especially for the voltage driven transducers, will be required. In addition, SW amplifiers might be needed to restore the amplitude loss during the propagation if the circuit length exceeds the wave mean free path. These amplifiers also need to operate at very low energies (towards aJ), which suggest that the amplification process should also rely on voltage driven mechanisms, e.g. using Voltage Control of Magnetic Anisotropy (VCMA) or other magnetoelectric effects [29].

Magnonic circuit layout design is much more challenging than the one of a charge-based counterpart. SW propagation and interaction are quite sensitive to waveguide dimensions and geometries, e.g. SW behavior in straight waveguides and around corners are different due to reflections, and, as such, layout can significantly influence circuit performance and even make it malfunctions. Moreover, due to SW amplitude decay and dephasing phenomena the circuit size (chip real-estate) should be minimized by enabling 2D signal crossing and/or 3D interconnect.

Advances in science and technology to meet challenges

Much progress has been done in material development, realization and characterization of nano-scaled SW conduits below 100 nm, as well as in understanding the underlaying physical mechanism of SW generation and propagation, both in linear and non-linear regimes [21]. Most of the studies focus on SW properties (2D or 3D systems) and rely on optical characterization and/or micromagnetic simulations. However, the main challenge for building efficient magnonic computing circuits is related to the physical realization of an efficient energy coupling interface for the information transfer between electric and magnetic domains. The research progress on heterogeneous integration of multiferroic materials, magnetoelectric composite [29] and VCMA stacks for the generation and detection of SW could allow for the demonstration of nanoscale cascaded logic gates in a full electric experiment. Furthermore, the coupling of phonons or photons to SW could bring additional functionalities and enhance or control their characteristics, e.g. the group velocity or amplitude.

State of the art SW-based computing assumes phase encoding of information and is performed via not input-output format coherent majority gates. The direct cascading of such gates results in input data dependent circuit malfunctions. Despite of the fact that gate cascading solutions have been proposed they are rather expensive and induce large gate delay overhead, e.g. the gate delay is increased from few ns to more than 20 ns [24]. As such, to build competitive SW circuits more effective cascading schemes are required and potential solutions might be found by means of inverse-design [26, 28] and/or by investigating alternative information encodings that may result in directly cascadable gates, and potentially enable the realization of more computation within one single gate. Given that information can be encoded in SW phase, amplitude, frequency, and any combination of those, a plethora of alternative and more effective SW computation paradigms could be potentially developed. Note that moving from charge-based circuits, e.g. CMOS, to SW circuits requires changes into the computer aided design framework. The traditional Boolean algebra-based logic synthesis should accommodate a new universal gate set composed of majority gate and inverter. Given that magnonic circuits are expected to be hybrid (to be interfaced with the environment within which they operate) and may include CMOS parts, a not yet existing mixed micomagnetic-SPICE simulation framework should be developed. The SW circuit layout design is governed by completely different principles and has fundamental implications on the circuit performance and behavior. Thus, a novel approach to produce correct by construction SW circuit layouts is essential for the proliferation of the SW computing paradigm.

Concluding remarks

Spin waves demonstrated to possess a high potential for computing but also for other emerging sensing or radio-frequency applications. The deep understanding of the associated physical phenomena and the development of materials and device fabrication techniques will allow the transition from the fundamental research to engineering devices in a near future. However, for computing applications, the SW devices should be integrated in hybrid magonic—CMOS architectures, where the magnonic units are utilized to solve some specific and energy consuming tasks. In this quest, several key components are still to be developed, as explained in this roadmap. Independent on the computing paradigm, an efficient information transfer interface between CMOS and magnonic circuits is the enabling factors towards real technologies. Development of multi-physics and SPICE design tools for device simulation as well as for circuit layouts will further pave the way for applications. Last, but not least, novel computing architectures as (spiking) neuronal networks based on interference, non-linear effects or non-reciprocity of spin waves could be developed for special applications.

Acknowledgments

The work of F Ciubotaru and S D Cotofana was supported by the European Union's Horizon 2020 Research and Innovation Programme through the FET-OPEN project CHIRON under Grant Agreement 801055 as well as from its Horizon Europe research and innovation program within the project SPIDER (Grant Agreement No. 101070417). F Ciubotaru acknowledges the support from imec's industrial affiliate program on Exploratory logic. A Chumak acknowledges the financial support by the European Research Council (ERC) Proof of Concept Grant 101082020 5G-Spin and by the Austrian Science Fund (FWF) via Grant No. I 4917-N (MagFunc).

1.4. Computing with skyrmions

Riccardo Tomasello1, Christos Panagopoulos2 and Mario Carpentieri1

1 Department of Electrical and Information Engineering, Politecnico di Bari, 70125 Bari, Italy

2 Division of Physics and Applied Physics, School of Physical and Mathematical Sciences, Nanyang Technological University, Singapore 639798, Singapore

Email: [email protected], [email protected] and [email protected]

Status

Magnetic skyrmions are localized whirling spin-textures with topological properties and particle-like characteristics [30]. Research has exploded in the last decade with proposals for new materials and device concepts [30], offering intriguing functionalities ranging from memory to computing applications. Originally observed at low temperatures in B20 compounds, today, skyrmions can be found in many thin film materials (ferro-, ferri-, and synthetic antiferro-magnets (SAFs)) at room temperature [30]. Their small size and the possibility to easily manipulate them electrically make these topologically protected chiral spin configurations attractive as information carriers in compact, and energetically efficient devices. Furthermore, the anticipated insensitivity to defects and potential for low energy consumption [31] have accelerated efforts to understand their formation, stability, and dynamics sufficiently well, already extending the interest towards unconventional applications. In particular, the topological properties of magnetic skyrmions offer new paradigms in reservoir, stochastic, neuromorphic, and quantum operations [32, 33].

Single skyrmions or a skyrmion fabric [34] are promising for reservoir computing because the non-linear dynamics of magnetic skyrmions can increase the systems nonlinearity and therefore the efficiency of the reservoir. In stochastic computing, the essence for optimal operation is the decorrelation of the bitstreams [35]. This feature can be achieved by a skyrmion-based reshuffle chamber (a missing element in current implementations of stochastic computing) that has been demonstrated taking advantage of the diffusion properties of skyrmions [35] (figure 6(a)).

Figure 6. (a) Example of a skyrmion reshuffler. Reproduced from [35], with permission from Springer Nature. (b) Memristive behavior of skyrmions for synapsis applications. Reproduced from [36], with permission from Springer Nature.

Download figure:

Standard image High-resolution imageSkyrmions have been also proposed for neuromorphic application. While skyrmion-based neurons are still only theorized [32], a skyrmion-based synapsis has already been experimentally demonstrated [36] (figure 6(b)), with the weight represented by the Hall resistivity.

Skyrmions could also serve as a source for non-Abelian statistics. Imprinting skyrmions on superconductors may trigger the formation of special Majorana quasiparticles, granting unrivalled resilience to the decoherence problem that plagues other quantum computing platforms [37]. Nano-skyrmions are also of interest for their potential as a logical element of a quantum processor [37]. They develop quantized eigenstates with distinct helicities and out-of-plane magnetizations. In a skyrmion qubit, information is stored in the quantum degree of helicity, and the logical states can be adjusted by electric and magnetic fields, offering an operation rich regime with high anharmonicity.

Current and future challenges

Fundamental challenges for utilizing magnetic skyrmions in technology include controlling their size, sustaining positional stability, enhancing electrical readout, deterministic nucleation and annihilation, reducing/suppressing the skyrmion Hall angle while maintaining high velocity, but also achieving an overall integration with digital circuits and associated circuit overhead.

Skyrmion position can be controlled by engineering pinning sites with lithography or ion irradiation [30]. Improvements in electrical readout calls for the use of optimized large-Tunneling Magnetoresistance Magnetic Tunnel Junctions (TMR-MTJs) [38]. Nucleation/annihilation protocols enable deterministic functionalities [30], but should be optimized to decrease energy consumption. The skyrmion Hall angle can be suppressed in SAFs [31]. However, the skyrmion velocity [39] is still far from theoretical predictions, calling for new and/or optimized materials and device architectures.

On the side of unconventional applications, Reservoir computing based on magnetic textures has not been realized experimentally yet. In stochastic computing, the first proof of concept of an algebraic computation based on skyrmions is still missing and hence a full skyrmion-based implementation. Furthermore, energy efficiency, velocity and accuracy in computation should be evaluated and compared with standard CMOS systems. In neuromorphic computing, some proposals rely on the use of a skyrmion-based spin-transfer-torque (STT) oscillator which has yet to be demonstrated. Whereas proposals involving memristive skyrmion behavior, such as skyrmion synapsis, should be based on large TMR-MTJs for improved detection and compact solutions. Specifically for the results in [36], the accuracy of the network could be improved, compared to the current estimate of 89%.

Beyond non-interventional creation or observation studies, skyrmions promise to dramatically improve quantum operations. Skyrmion-vortex interaction in device architectures of imprinted magnetic skyrmions on conventional superconductors can assist topological quantum computing by operations carried out on Anyons. A novel quantum hybrid architecture composed of Néel skyrmions and Niobium grants realistic hope [40]. Moving forward, in a homogeneous chiral magnet and superconductor stack, a skyrmion-vortex pair—and hence a Majorana zero mode—would be intrinsically mobile. This allows for non-perturbative, non-contact braiding operations by moving skyrmion-vortex pairs around the surface.

Quantum skyrmions in frustrated magnets offer a new element for the construction of qubits based on the energy-level quantization of the helicity degree of freedom. The skyrmion state, energy-level spectra, transition frequency, and qubit lifetime are configurable and can be engineered by adjusting external electric and magnetic fields, offering a rich operation regime with high anharmonicity.

Advances in science and technology to meet challenges

Over the next two decades, a concerted experimental effort will promote skyrmions to future devices, hence, realizing their technological potential for information processing transcending existing limits. Challenges, such as decreasing energy consumption of skyrmion devices, controlling the skyrmion size, suppressing the skyrmion Hall angle, could be achieved by exploring new materials architectures. We can reduce dimensionality with emphasis on 2D van der Waals materials. At the same time, we can explore 3D bulk materials that, thanks to improved imaging techniques, demonstrate the presence of static 3D structures, such as vortex rings and hopfions [41]. Whereas, on the dynamical side, so far the community has been relying on theoretical predictions of the current-driven Hopfion motion.

Emphasis on frustrated magnetism, intrinsic to triangular or hexagonal lattices with antiferromagnetic spin correlations, can utilize the induced non-collinear magnetic order, which itself breaks spatial inversion symmetry for the formation and manipulation of skyrmions only a few nanometers wide. We can also focus on the design of hybrid systems by combining ferro-, ferri-, and antiferro-magnets. Specifically for unconventional skyrmion applications, experimental realization of reservoir computing needs efficient and precise electrical measurements among multiple contacts for a reliable resistance evaluation linked to the magnetic texture distribution. Stochastic computing demands an integration with CMOS technology via stacks compatible with STT-MRAM technology already integrated and commercialized. Neuromorphic computing calls for the enhancement of skyrmion detection (figure 7(a)) beyond the 30% TMR threshold for MTJs. This could be achieved by combining state-of-the-art CoFeB-MgO MTJ with conventional skyrmion-hosting magnetic multilayers. Another direction could be combinatorial, including topological magnetism and acoustic waves, already promising for skyrmion-based synaptic behavior [42].

Figure 7. (a) Sketch of the improvements of skyrmion detectivity through the use of a skyrmion-based MTJ. R is the electrical resistance of the MTJ, Dsk is the skyrmion diameter, and Nsk is the number of skyrmions. (b) Skyrmion qubit concept. Reprinted (figure) with permission from [37], Copyright (2021) by the American Physical Society.

Download figure:

Standard image High-resolution imageFor quantum computing operations in skyrmion-superconductor hybrids, major tasks need to be performed for device capability. Firstly, ensuring that the magnetic interactions can spin-polarize the superconductor and cause a topological phase transition. Secondly, for Majorana braiding, the magnetic homogeneity of the architecture needs to be better than commonly achieved using magnetron-sputtering. In qubit-technology hardware, the applicability of nano-skyrmions can be further improved with the development of cleaner magnetic samples and interfaces in engineered architectures, without trading off qubit anharmonicity and scalability. Notably, demonstrating tunable macroscopic quantum tunneling, coherence, and oscillations for magnetic nano-skyrmions will also establish helicity in topologically protected chiral spin configurations as a quantum variable; much like macroscopic quantum tunneling and energy quantization in Josephson junctions, thus the fundamental physical step for developing a practical skyrmion qubit.

Concluding remarks

Skyrmions are fascinating topological magnetization configurations with realistic potential for a beneficial impact on computing paradigms. Their small size, particle-like behavior, topological properties, manipulability by electrical current, as well as memristive features promise disruptive advances in reservoir, stochastic, neuromorphic, and quantum computing. Stabilizing skyrmions in large TMR-MTJ will enhance the skyrmion detectivity with unprecedented effects on unconventional operations. Extending the investigation to 3D bulk magnetic systems and 2D materials will lead to major breakthroughs and new functionalities associated with complex topology and multiple degrees of freedom. Remarkable advancements in unconventional computing can be driven by the combination of different physical systems, such as topological magnetism and acoustic waves which already promise to control skyrmion-based synaptic behavior. Skyrmions interacting with superconductors can lead to chiral superconductivity and Majorana braiding platforms. Whereas, nano-skyrmions stabilized in magnetic disks bounded by electrical contacts will allow static fields to control the quantized energy spectra, enabling changes in the helicity between energetically favored levels. It is expected that skyrmions will be a major building block for the next generation of low power computing architectures, transcending from the classical to the quantum regime.

Acknowledgments

This work was supported by the Projects No. PRIN 2020LWPKH7 and PRIN20222N9A73 funded by the Italian Ministry of University and Research. C Panagopoulos acknowledges support from the National Research Foundation (NRF) Singapore Competitive Research Programme NRF-CRP21-2018-0001, and the Singapore Ministry of Education (MOE) Academic Research Fund Tier 3 Grant MOE2018-T3-1-002.

2.1. Neuromorphic computing with memristive devices

Peng Lin1, Gang Pan1 and J Joshua Yang2

1 College of Computer Science and Technology, Zhejiang University, Hangzhou 310013, People's Republic of China

2 Department of Electrical and Computer Engineering, University of Southern California, Los Angeles, CA 90089, United States of America

Email: [email protected], [email protected] and [email protected]

Status

Neuromorphic computing is a promising paradigm of artificial intelligence (AI) systems that aims at developing an efficient computing architecture with great physical and functional resemblance to the biological brains. Memristors, which represent a group of emerging memory devices, can reversibly change their conductance under a variety of physical switching sources such as phase change, ionic motion, ferroelectricity and ferromagnetic switching [43]. In particular, the ionic memristors shares some similarities at the physics level with ion transport in nerve cells (e.g. Ca2+, Mg2+, Na+, K+), which equips them with desirable ion dynamics, enabling a variety of more efficient emulations of neuronal and synaptic functions for neuromorphic computing [44].

In recent years, memristors with decent array sizes have been used as static synapses for physical implementations of artificial neural networks (ANNs), which are low hanging fruits for memristors because neural network applications take advantages of the strengths and avoid the weaknesses of typical memristive devices as revealed in [45]. This trend is reflected by a significant increase in application-oriented publications since 2018 (figure 8). Each memristor is not only a weight storage unit, but can also directly process weighting function to upstream voltage inputs in the form of voltage-conductance multiplications [46], and thus co-locates the memory and processing functions within the same cell. A memristor array is essentially a physical neural network in between two neuron layers with many possible array arrangements, and can be extended to 3D layout to implement complex neural networks [47]. Owing to its low power, highly parallel computing paradigm, memristor based systems have found numerous successes in ANN related applications and demonstrated excellent computing efficiency exceeding 100 TOPS/W.

Figure 8. Number of publications related to memristor based neuromorphic computing system, retrieved from Web of Science (WOS) database, data source: Clarivate. Inset: ANN demo in array [46]; Reproduced from [46], with permission from Springer Nature. Diffusive memristor [44]; Reproduced from [44], with permission from Springer Nature. Fully hardware CNN [48]; Reproduced from [48], with permission from Springer Nature. SNN demo in array [49]; Reproduced from [49], with permission from Springer Nature. 8-layer 3D computing array [47]; Reproduced from [47], with permission from Springer Nature. Integrated chip with 11-bits memristors [50]. Reproduced from [50], with permission from Springer Nature.

Download figure:

Standard image High-resolution imageIn the meantime, dynamical neuronal and synaptic properties of memristors are also under extensively study to provide more capable and compact building blocks for neuromorphic computing. In ANN applications, linear conductance modulation of memristors using a burst of identical pulses have been reported [43], which shows promises to implement accurate weight updates for fast on-chip training of ANNs. Meanwhile, functional memristive devices were developed to implement spiking neural network (SNN)—a potentially more efficient neural network with a high bio-plausibility. Important neuron models such as leaky-integrate-and-fire (LIF), Hodgkin-Huxley (HH) and plastic synaptic behaviors such as paired-pulse-facilitation (PPF) and spike-timing-dependent-plasticity (STDP) have been achieved by harvesting more dynamical behaviors of the memristors. These novel functions were achieved in a very compact form normally with a couple of memristive devices instead of bulky circuits with many transistors in pure CMOS implementations. However, the overall scales of these demonstrations were still limited to small arrays, which is significantly hampering the overall capability of neuromorphic computing systems in practical applications.

Current and future challenges

Even though building a neuromorphic system requires a synergistic effort from both hardware and software, the device performance of memristors still plays a decisive role in determining the ultimate capability and functionality of the neuromorphic system. Currently, there are a few prominent challenges for memristive devices.

First, it is still challenging to build a large-scale memristor arrays without an access transistor. At present, the majority of the memristor chips are based on the so-called one-transistor-one-memristor (1T1R) cell design, for which a transistor is integrated with a memristor and served as a current regulator for the memristor cell. The access transistor can (1) suppress the leakage current from the unselected cell, (2) use current compliance to achieve accurate conductance tuning and mitigate switching variations. However, the downside of having an access transistor is that it essentially limits the use of any array-wise parallel programming strategies, and raises challenges in designing an asynchronized system, such as those based on SNNs. The 2D scalability and 3D stack-ability of the memristor arrays are also limited by the addition of a transistor in each cell.

Secondly, linear analog conductance modulation using identical pulses is a key requirement for on-chip training of ANNs. Although it has been demonstrated in small prototype arrays, uniform linear conductance modulation across large-scale arrays is still challenging to achieve because device-to-device variations of switching voltages, conductance range, response time, etc can all affect the conductance modulation process. In addition, it is also highly desirable to have symmetric programming for potentiation and depression, which, however, is not well supported by most of the memristive switching mechanisms.

Lastly, the variability issue in memristors is also a key limiting factor for the implementation of SNNs since dynamical functions such as STDP and LIF usually have lower tolerance for variations. For example, a standard STDP response of synapses is nonlinearly related to the timing differences between the pre- and post-synaptic spikes. As a result, variations in the time domain (such as inaccurate firing delay from the LIF neuron) would be nonlinearly magnified in synaptic response, causing large errors during learning. Finding a solution to improve the scalability of these SNN functions, whether through more precise control of ionic motions, or through other compensation strategies, are highly desired for the development of SNN based neuromorphic system.

Of course, challenges are also existed in other aspects of a neuromorphic systems. Developing a global training algorithm for large scale SNNs is among the top of these challenges. Moreover, implementing neuromorphic systems still requires more efficient peripheral circuit designs, more sophisticated system architecture, and would require a better understanding of the working principles of SNNs.

Advances in science and technology to meet challenges

First, we would like to see continuous efforts in material and device engineering develop new device concepts with breakthroughs in device performance and gain better understanding of the switching mechanisms. This idea is supported by rich switching behaviors from different types of memristors, which are dictated by a combination of factors including material compositions, fabrication processes, device morphologies and many others, therefore provide a high degree of design freedom to tailor a device under specific application requirements. Alternative memristor design based on non-filamentary switching mechanisms may be developed to achieve improved switching uniformity. For example, a new type of electrochemical memristor was reported, and demonstrated better switching uniformity and excellent analog programmability, though its application in large arrays needs further verification [51].

Meanwhile, we hope to see new fabrication technologies or material synthesis methods for memristors, such as using sophisticated tools from commercial foundries. It is known that the use of ion implantation for CMOS process provided dramatic improvement to the doping profile of MOSFET. We believe that a disruptive improvement may also be achieved in a similar effort. For example, in a preliminary study, epitaxial tool was used to grow single crystal SiGe film with nanometer wide dislocation channels, which acted as predefined ion channel for switching. Owing to better control over the ion transport, improvement in switching uniformity of memristors was achieved [52].

Finally, challenges at device and hardware level may also be overcome through complementary research effort in computer science and neuroscience domains. For example, more robust, hardware-friendly algorithms and computational models could be developed to mitigate the variability issues of memristors and utilize some unexpected properties discovered in new device exploration. Meanwhile, co-optimizations of the parameters for both memristive devices and neural networks could be achieved through comprehensive modeling and simulations. Lastly, our understanding of the brain is still at its infant state, it is also possible that new findings in neuroscience could help to establish new training methods and design new network architectures.

Concluding remarks

Neuromorphic computing is a disruptive technological solution to future AI, and memristor is one of the leading candidates to implement parallel, analog and in-memory computing as well as rich dynamics inside neuromorphic computing systems. At the current stage, large-scale memristor based neuromorphic systems are mainly based on ANN algorithms, while SNN based demonstrations are far behind, primarily due to lack of appropriate training algorithms and the challenges to reliably obtain the desirable dynamical functions at large scale.

As more technical challenges described in this roadmap being resolved, it is expected to see a much more substantial progress made in neuromorphic computing. A large-scale memristor system based on a comprehensive SNN design can lead to significant improvements in energy efficiency, performance, and functionality over existing AI hardware. Meanwhile, the SNN hardware could, in return, inspire the development of SNN algorithms or even the understanding of biological neural networks, which have been inefficient to simulate using conventional computers.

Acknowledgments

P L was partially supported by the National Key R&D Plan of China (Grant No. 2022YFB4500100) and Major Program of Natural Science Foundation of Zhejiang Province in China (LDQ23F040001), G P was partially supported by the National Science Foundation of China (No. 2023752 and No. 61925603), The Key Research and Development Program of Zhejiang Province in China (2020C03004).

3.1. Nanomaterials for unconventional computing

Aida Todri-Sanial1, Gabriele Boschetto2 and Kremena Makasheva3

1 Electrical Engineering Department, Eindhoven University of Technology, Eindhoven, AZ 5612, The Netherlands

2 Microelectronics Department, LIRMM, Université de Montpellier, 34095 Montpellier, France

3 Laplace, Laboratory on Plasma and Conversion of Energy, CNRS, UT3, INPT, University of Toulouse, 31062 Toulouse, France

Email: [email protected], [email protected] and [email protected]

Status

With the emergence of nonconventional computing paradigms, one has the means to overcome the fundamental limitations of von Neumann architecture and perform highly complex functions with extremely low power. This prompts for materials and devices that can emulate the biological functions of neurons and synapses. Combining memory and resistor, memristors have become the most important electronic component for brain-inspired neuromorphic computing. The device has the ability to control resistance with multiple states by memorizing the history of previous electrical inputs—allowing it to mimic biological synapses and neurons of the biological neural networks. The switching in memristor devices is a reversible and controllable change of resistance induced by different stimuli, such as current, voltage, or light, with different physical processes such as ionic/electronic motion and redox reactions. Thus, the material selection plays a key role in the conductive path formation and modulation of the resistive switching behavior, and here we review them based on material properties. Owing to the dependence of their resistance states in the history of the applied electrical bias, memristors can store information in the form of electrical resistance and are typically driven by one of the four main mechanisms: electrochemical reactions (namely, redox and ion migration), phase changes (such as thermally activated amorphous-crystalline transitions), tunnel magneto-resistance (as such as spin-dependent tunnel resistance) or ferroelectricity (namely, tunneling or domain-wall transport). In addition, memristors can allow to process information intrinsically through the 'let physics compute' (namely, perform complex signal transformation with physical dynamics), which are beneficial beyond neuromorphic computing, such as solving NP-hard optimization problems and hardware security. To uncover their potentiality, we summarize here the nanomaterials used for unconventional computing and their different types of switching mechanisms (figure 9).

Figure 9. Illustration of different classes of nanomaterials used for unconventional computing, based on their dimensionality.

Download figure: