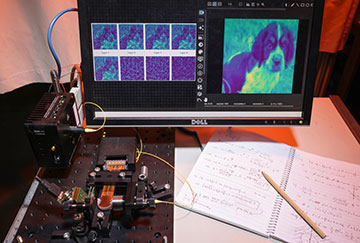

Researchers at EPFL have used a new scattering-based system to classify different types of image, showing that the more they scattered incoming light the more accurate their classification. [Image: Alain Herzog/Jih-Liang Hsieh]

Scientists in Switzerland have shown how linear optical components can be used to carry out both the linear and nonlinear operations needed in neural networks (Nat. Photonics, doi: 10.1038/s41566-024-01494-z). They say that their new scheme, which relies on multiple light scatterings, might in the future enable faster and more efficient artificial intelligence (AI) compared with what can be done with conventional electronic computers.

The need for efficiency

AI has made significant progress in recent years thanks to more powerful computers, better training algorithms and huge amounts of online data. But the vast number of neural connections needed for AI programs has led to a huge increase in energy consumption when using digital processors. While Google and others have improved efficiencies by developing dedicated chips, these devices still rely on the basic Von Neumann architecture that sees data continually shunted back and forth between processor and memory.

Analog optical computers can in principle overcome this problem thanks to the low power needed to move data through a circuit and the greater potential for parallel processing, given that (uncharged) photons do not naturally interact with one another. These devices are particularly well-suited to the linear operations that consume so much time and energy in their digital counterparts―the matrix multiplications needed to calculate the summed, weighted inputs to each neuron. Indeed, several companies have now been set up to commercialize this technology (see “Photonic Computing for Sale,” OPN, January 2023).

More problematic, however, are the nonlinear operations that convert a neuron's combined input into its output. The absence of interaction between photons means that one photon cannot be used to control another, as electrons do in transistors. So scientists developing optical neural networks either combine optical and electronic components, or else contrive interactions by using an intermediary―often a material whose optical properties change in response to light. However, this approach generally calls for high-powered lasers to enhance the light–matter interaction with the material in question.

Exploiting light scattering

In the latest work, Christophe Moser and colleagues at the Ecole Polytechnique Fédérale de Lausanne (EPFL) have shown how this problem could be overcome by exploiting the phenomenon of light scattering. They direct a low-powered laser beam at a spatial light modulator made from liquid crystal, and position a mirror opposite the modulator so that the beam bounces off the modulator and mirror multiple times before being recorded by a camera. Each successive region of the modulator is encoded with a slightly different version of the device's input data.

The scattering of encoded laser light off the encoded modulator is a nonlinear process yielding an output field at the camera that can be described in terms of a series of polynomials―as is the case with the output from a typical neural network. The greater the number of scatterings, the higher the order of polynomials that result.

The researchers enable pattern recognition by using two different parameters―for scaling and bias―to modify the value of each pixel, as if they were tweaking the weights of a neuron. Likewise, each reflective region of the spatial light modulator corresponds to a different layer in the optical network. By using a computer model to work out the optimum values of the two parameters in each pixel in each layer, they can then expose the device to new images and extract the correct image label from the intensity pattern recorded by the camera.

Putting the scheme to the test

The researchers enable pattern recognition by using two different parameters―for scaling and bias―to modify the value of each pixel, as if they were tweaking the weights of a neuron.

Moser and colleagues tested the scheme, which they call nonlinear processing with only linear optics(nPOLO), using data in the form of three different image sets―featuring images of fashion, handwritten numerals and everyday objects such as electronic devices and pets. In each case, they found they could more accurately label unknown images when scattering the light multiple times, compared with just a single reflection. They also saw that they got the biggest benefit for the most complex data―those of the everyday objects.

Looking at how the accuracy scaled with both the number of layers and the number of pixels in each layer, they found that both parameters boosted performance equally up to a certain parameter count―after which, having more layers proved a (slightly) bigger bonus. They also found that the difference between network prediction and reality fell away with parameter count according to a power law, which, they point out, is also the case with conventional neural networks operated by the company OpenAI. This attribute, they maintain, is “encouraging for the development of future large-scale networks.”

What's more, they also discovered that experiments carried out using multiple layers were more robust against phase noise in the pixels and misalignment of the modulator and mirror. As to why this should be the case, they suggest that “the model learns multiple paths from the input data to the output plane during training,” meaning that the higher polynomial orders are less likely to lose meaningful information.

Lead authors Mustafa Yildirim and Niyazi Ulas Dinc reckon that the new scheme could make neural networks up to 1,000 times more energy efficient, assuming that scientists can increase the current modulator rate of 50 Hz to a few tens of GHz and make these devices CMOS compatible. Also critical, they say, will be developing efficient interconnects for large data transfer between the optical mechanism and a digital electronic memory.