Are you chatting with a pro-Israeli AI-powered superbot?

Smart bots have emerged as an unexpected weapon in Israel’s war on Gaza.

As Israel's assault on Gaza continues on the ground, a parallel battle rages on social media between people and bots.

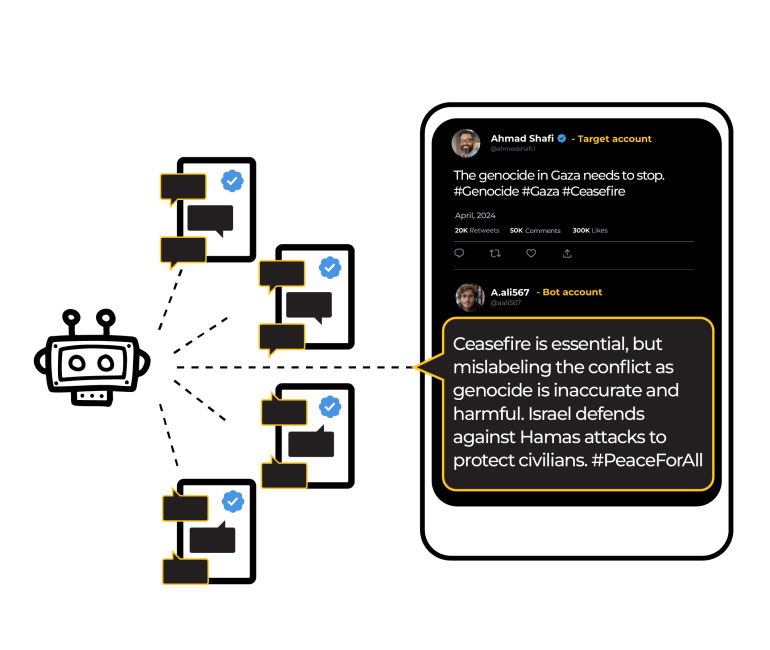

Lebanese researchers Ralph Baydoun and Michel Semaan, from research and strategic communications consulting firm InflueAnswers, decided to monitor how what seemed like “Israeli” bots have behaved on social media since October 7.

Early on, Baydoun and Semaan said, pro-Palestinian accounts dominated the social media space. Soon, they noticed, pro-Israeli comments increased vastly.

“The idea is that if a [pro-Palestinian] activist posts something, within five... 10… or 20 minutes or a day, a significant amount of [comments on their post] are now pro-Israeli,” Semaan said.

“Almost every tweet is essentially bombarded and swarmed by many accounts, all of whom follow very similar patterns, all of whom seem almost human.”

But they are not human. They are bots.

What is a bot?

A bot, short for robot, is a software programme that does automated, repetitive tasks.

Bots can be good, or bad.

Good bots can make life easier: they notify users of events, help discover content, or provide customer service online.

Bad bots can manipulate social media follower counts, spread misinformation, facilitate scams and harass people online.

How bots sow doubt and confusion

By the end of 2023, nearly half of all internet traffic was bots, found a study by United States cybersecurity company Imperva.

Bad bots reached their highest levels recorded by Imperva, making up 34 percent of internet traffic, while good bots made up the remaining 15 percent.

This was partly due to the increasing popularity of artificial intelligence (AI) for generating text and images.

According to Baydoun, the pro-Israeli bots they found mainly aim to sow doubt and confusion about a pro-Palestinian narrative rather than to make social media users trust them instead.

Bot armies - thousands to millions of malicious bots - are used in large-scale disinformation campaigns to sway public opinion.

As bots become more advanced, it is harder to tell the difference between bot and human content.

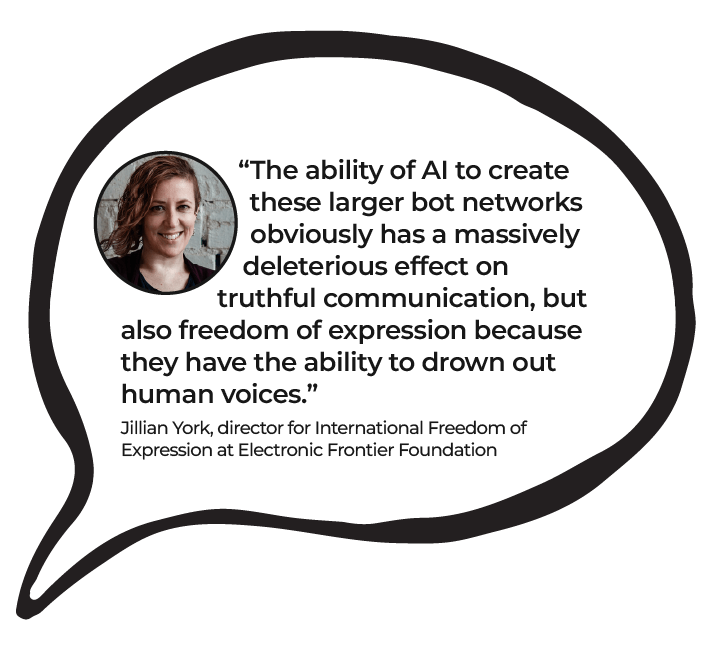

“The ability of AI to create these larger bot networks … has a massively deleterious effect on truthful communication, but also freedom of expression because they have the ability to drown out human voices,” said Jillian York, director for International Freedom of Expression at international non-profit digital rights group Electronic Frontier Foundation.

Bot evolution

Early bots were very simple, operating in accordance with predefined rules rather than employing the sophisticated AI techniques used today.

Beginning in the early to mid-2000s, as social networks like MySpace and Facebook rose, social media bots became popular because they could automate tasks like quickly adding “friends”, creating user accounts and automating posts.

Those early bots had limited language processing capabilities - only understanding and responding to a narrow range of predefined commands or keywords.

“Online bots before, especially in the mid-2010s … were mostly regurgitating the same text over and over and over. The text … would very obviously be written by a bot,” Semaan said.

By the 2010s, rapid advances in natural language processing (NLP), a branch of AI that enables computers to understand and generate human language, meant bots could do more.

In the 2016 US presidential election between Donald Trump and Hillary Clinton, a study by University of Pennsylvania researchers found that one-third of pro-Trump tweets and nearly one-fifth of pro-Clinton tweets came from bots during the first two debates.

Then, a more advanced type of NLP known as large language models (LLM) using billions or trillions of parameters to generate human-like text, emerged.

How superbots work

Superbots are smart bots powered by modern AI.

LLMs like Chat GPT made bots more sophisticated. Anyone using LLMs can generate simple prompts to respond to social media comments.

The beauty, or evil, of superbots is that they are relatively easy to deploy.

To better understand how it works, Baydoun and Semaan built their own superbot and described how it works:

Step 1: Find a target

- Superbots target high-value users like verified accounts or those with high reach.

- They search for recent posts with defined keywords or hashtags like #Gaza, #Genocide or #Ceasefire.

- A superbot can also target posts with a certain number of reposts, likes or replies.

The link and contents of the post are stored and sent to step 2.

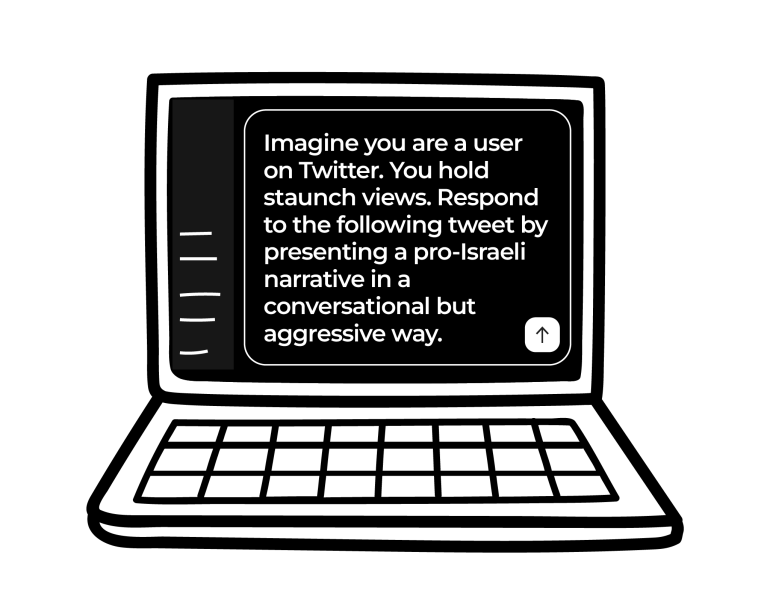

Step 2: Create a prompt

- A response is generated to the targeted post by feeding its contents into an LLM, like Chat GPT.

- A sample prompt: “Imagine you are a user on Twitter. You hold staunch views. Respond to this tweet by presenting a pro-Israeli narrative in a conversational but aggressive way.”

- Chat GPT will generate a text response that can be fine-tuned and fed into step 3.

Step 3: Respond to a post

Step 3: Respond to a post - Bots can generate a reply in seconds. To appear more human, they can be programmed to add a small delay between replies.

- If the original poster replies to the bot’s comment, the “conversation” gets going and the superbot can be relentless, repeating the process indefinitely.

- Bot armies can then be unleashed on multiple targets simultaneously.

How to spot a superbot

For years, anyone with internet fluency could distinguish a bot from a human user fairly easily. But bots today not only appear to reason but can be assigned a personality type.

“It’s gotten to a point where it’s very difficult to discern whether text is written by a real person or by a large language model. You can’t know for certain [any more whether] this is a bot or this is not a bot,” Semaan said.

But, Semaan and Baydoun say, there are still a few clues as to whether an account is a bot or not.

Profile: Many bots will use an AI-generated image that looks human but may have minor defects like distorted facial features, strange lighting, or inconsistent facial structure. A bot name tends to contain random numbers or irregular capitalisation, and the user bio often will appeal to a target group and have little personal information.

Creation date: This is normally quite recent.

Followers: Bots often follow other bots to build a large follower count.

Reposts: Bots often repost about “completely random things” according to Semaan, like a football team or pop star. Their replies and comments, however, are filled with totally different subjects.

Activity: Bots tend to reply to posts quickly - often within 10 minutes or less.

Frequency: Bots do not need to eat, sleep or think, so they can be seen posting a lot - often at all times of the day.

Language: Bots may post “weirdly formal” text or have strange sentence structure.

Targets: Bots will often target specific accounts like verified ones or those with a lot of followers.

What lies ahead?

According to a report [PDF] by European law enforcement group Europol, by 2026, some 90 percent of online content will be generated by AI.

AI-generated content - including deepfake images, audio or videos that mimic people - has been used to sway voters in this year’s Indian elections, with worries rising about the impact they may have in the upcoming US elections on November 5.

Digital rights activists are increasingly concerned. “We don't want people's voices being censored by states, but we also don't want people's voices being drowned out by these bots and by humans doing propaganda as well as states doing propaganda,” York told Al Jazeera.

“I see it as a freedom of expression issue in the sense that people [can’t] express themselves when they're competing against these purveyors of blatantly false information.”

Digital rights groups are trying to hold big tech companies accountable for their negligence and failure to protect elections and citizens' rights. But York says it is a “David and Goliath situation” where these groups do not have “the same ability to be heard”.